This repository has been archived by the owner on Apr 26, 2024. It is now read-only.

-

-

Notifications

You must be signed in to change notification settings - Fork 2.1k

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Fix historical messages backfilling in random order on remote homeser…

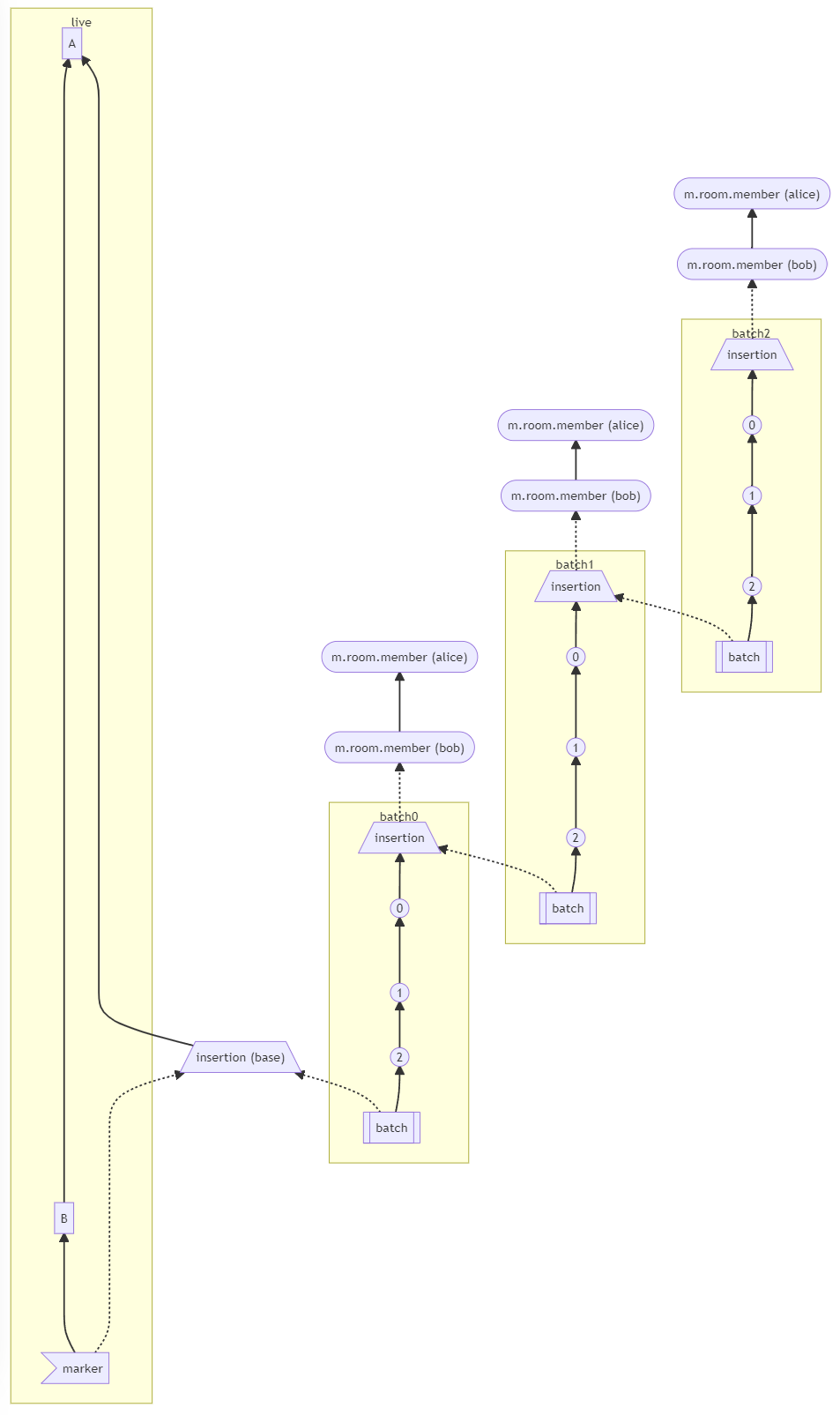

…vers (MSC2716) (#11114) Fix #11091 Fix #10764 (side-stepping the issue because we no longer have to deal with `fake_prev_event_id`) 1. Made the `/backfill` response return messages in `(depth, stream_ordering)` order (previously only sorted by `depth`) - Technically, it shouldn't really matter how `/backfill` returns things but I'm just trying to make the `stream_ordering` a little more consistent from the origin to the remote homeservers in order to get the order of messages from `/messages` consistent ([sorted by `(topological_ordering, stream_ordering)`](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)). - Even now that we return backfilled messages in order, it still doesn't guarantee the same `stream_ordering` (and more importantly the [`/messages` order](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)) on the other server. For example, if a room has a bunch of history imported and someone visits a permalink to a historical message back in time, their homeserver will skip over the historical messages in between and insert the permalink as the next message in the `stream_order` and totally throw off the sort. - This will be even more the case when we add the [MSC3030 jump to date API endpoint](matrix-org/matrix-spec-proposals#3030) so the static archives can navigate and jump to a certain date. - We're solving this in the future by switching to [online topological ordering](matrix-org/gomatrixserverlib#187) and [chunking](#3785) which by its nature will apply retroactively to fix any inconsistencies introduced by people permalinking 2. As we're navigating `prev_events` to return in `/backfill`, we order by `depth` first (newest -> oldest) and now also tie-break based on the `stream_ordering` (newest -> oldest). This is technically important because MSC2716 inserts a bunch of historical messages at the same `depth` so it's best to be prescriptive about which ones we should process first. In reality, I think the code already looped over the historical messages as expected because the database is already in order. 3. Making the historical state chain and historical event chain float on their own by having no `prev_events` instead of a fake `prev_event` which caused backfill to get clogged with an unresolvable event. Fixes #11091 and #10764 4. We no longer find connected insertion events by finding a potential `prev_event` connection to the current event we're iterating over. We now solely rely on marker events which when processed, add the insertion event as an extremity and the federating homeserver can ask about it when time calls. - Related discussion, #11114 (comment) Before | After --- | ---  |  #### Why aren't we sorting topologically when receiving backfill events? > The main reason we're going to opt to not sort topologically when receiving backfill events is because it's probably best to do whatever is easiest to make it just work. People will probably have opinions once they look at [MSC2716](matrix-org/matrix-spec-proposals#2716) which could change whatever implementation anyway. > > As mentioned, ideally we would do this but code necessary to make the fake edges but it gets confusing and gives an impression of “just whyyyy” (feels icky). This problem also dissolves with online topological ordering. > > -- #11114 (comment) See #11114 (comment) for the technical difficulties

- Loading branch information

1 parent

cf06783

commit fef2e79

Showing

9 changed files

with

342 additions

and

149 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1 @@ | ||

| Fix [MSC2716](https://github.com/matrix-org/matrix-doc/pull/2716) historical messages backfilling in random order on remote homeservers. |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.