API reference |

|---|

tmrl is a fully-fledged distributed RL framework for robotics, designed to help you train Deep Reinforcement Learning AIs in real-time applications.

tmrl comes with an example self-driving pipeline for the TrackMania 2020 video game.

TL;DR:

-

🚗 AI and TM enthusiasts:

tmrlenables you to train AIs in TrackMania with minimal effort. Tutorial for you guys here, video of a pre-trained AI here, and beginner introduction to the SAC algorithm here. -

🚀 ML developers / roboticists:

tmrlis a python library designed to facilitate the implementation of ad-hoc RL pipelines for industrial applications, and most notably real-time control. Minimal example here, full tutorial here and documentation here. -

👌 ML developers who are TM enthusiasts with no interest in learning this huge thing:

tmrlprovides a Gymnasium environment for TrackMania that is easy to use. Fast-track for you guys here. -

🌎 Everyone:

tmrlhosts the TrackMania Roborace League, a vision-based AI competition where participants design real-time self-racing AIs in the TrackMania 2020 video game.

tmrl is a python framework designed to help you train Artificial Intelligences (AIs) through deep Reinforcement Learning (RL) in real-time applications (robots, video-games, high-frequency control...).

As a fun and safe robot proxy for vision-based autonomous driving, tmrl features a readily-implemented example pipeline for the TrackMania 2020 racing video game.

Note: In the context of RL, an AI is called a policy.

-

Training algorithms:

tmrlcomes with a readily implemented example pipeline that lets you easily train policies in TrackMania 2020 with state-of-the-art Deep Reinforcement Learning algorithms such as Soft Actor-Critic (SAC) and Randomized Ensembled Double Q-Learning (REDQ). These algorithms store collected samples in a large dataset, called a replay memory. In parallel, these samples are used to train an artificial neural network (policy) that maps observations (images, speed...) to relevant actions (gas, break, steering angle...). -

Analog control from screenshots: The

tmrlexample pipeline trains policies that are able to drive from raw screenshots captured in real-time. For beginners, we also provide simpler rangefinder ("LIDAR") observations, which are less potent but easier to learn from. The example pipeline controls the game via a virtual gamepad, which enables analog actions. -

Models: To process LIDAR measurements, the example

tmrlpipeline uses a Multi-Layer Perceptron (MLP). To process raw camera images (snapshots), it uses a Convolutional Neural Network (CNN). These models learn the physics of the game from histories or observations equally spaced in time.

-

Python library:

tmrlis a complete framework designed to help you successfully implement ad-hoc RL pipelines for real-world applications. It features secure remote training, fine-grained customizability, and it is fully compatible with real-time environments (e.g., robots...). It is based on a single-server / multiple-clients architecture, which enables collecting samples locally from one to arbitrarily many workers, and training remotely on a High Performance Computing cluster. A complete tutorial toward doing this for your specific application is provided here. -

TrackMania Gymnasium environment:

tmrlcomes with a Gymnasium environment for TrackMania 2020, based on rtgym. Once the library is installed, it is easy to use this environment in your own training framework. More information here. -

External libraries:

tmrlgave birth to some sub-projects of more general interest, that were cut out and packaged as standalone python libraries. In particular, rtgym enables implementing Gymnasium environments in real-time applications, vgamepad enables emulating virtual game controllers, and tlspyo enables transferring python object over the Internet in a secure fashion.

-

In the french show Underscore_ (2022-06-08), we used a vision-based (LIDAR) policy to play against the TrackMania world champions. Spoiler: our policy lost by far (expectedly 😄); the superhuman target was set to about 32s on the

tmrl-testtrack, while the trained policy had a mean performance of about 45.5s. The Gymnasium environment that we used for the show is available here. -

In 2023, we were invited at Ubisoft Montreal to give a talk describing how video games could serve as visual simulators for vision-based autonomous driving in the near future.

Detailed instructions for installation are provided at this link.

Full guidance toward setting up an environment in TrackMania 2020, testing pre-trained weights, as well as a beginner-friendly tutorial to train, test, and fine-tune your own models, are provided at this link.

An advanced tutorial toward implementing your own ad-hoc optimized training pipelines for your own real-time tasks is provided here.

tmrl ON A PUBLIC NETWORK.

Security-wise, tmrl is based on tlspyo.

By default, tmrl transfers objects via non-encrypted TCP in order to work out-of-the-box.

This is fine as long as you use tmrl on your own private network.

HOWEVER, THIS IS A SECURITY BREACH IF YOU START USING tmrl ON A PUBLIC NETWORK.

To securely use tmrl on a public network (for instance, on the Internet), enable Transport Layer Security (TLS).

To do so, follow these instructions on all your machines:

- Open

config.json; - Set the

"TLS"entry totrue; - Replace the

"PASSWORD"entry with a strong password of your own (the same on all your machines); - On the machine hosting your

Server, generate a TLS key and certificate (follow the tlspyo instructions); - Copy your generated certificate on all other machines (either in the default tlspyo credentials directory or in a directory of your choice);

- If you used your own directory in the previous step, replace the

"TLS_CREDENTIALS_DIRECTORY"entry with its path.

If for any reason you do not wish to use TLS (not recommended), you should still at least use a custom password in config.json when training over a public network.

HOWEVER, DO NOT USE A PASSWORD THAT YOU USE FOR OTHER APPLICATIONS.

This is because, without TLS encryption, this password will be readable in the packets sent by your machines over the network and can be intercepted.

We host the TrackMania Roborace League, a fun way of benchmarking self-racing approaches in the TrackMania 2020 video game. Follow the link for information about the competition, including the current leaderboard and instructions to participate.

Regardless of whether they want to compete or not, ML developers will find the competition tutorial script handy for creating advanced training pipelines in TrackMania.

In case you only wish to use the tmrl Real-Time Gym environment for TrackMania in your own training framework, this is made possible by the get_environment() method:

(NB: the game needs to be set up as described in the getting started instructions)

from tmrl import get_environment

from time import sleep

import numpy as np

# LIDAR observations are of shape: ((1,), (4, 19), (3,), (3,))

# representing: (speed, 4 last LIDARs, 2 previous actions)

# actions are [gas, break, steer], analog between -1.0 and +1.0

def model(obs):

"""

simplistic policy for LIDAR observations

"""

deviation = obs[1].mean(0)

deviation /= (deviation.sum() + 0.001)

steer = 0

for i in range(19):

steer += (i - 9) * deviation[i]

steer = - np.tanh(steer * 4)

steer = min(max(steer, -1.0), 1.0)

return np.array([1.0, 0.0, steer])

# Let us retrieve the TMRL Gymnasium environment.

# The environment you get from get_environment() depends on the content of config.json

env = get_environment()

sleep(1.0) # just so we have time to focus the TM20 window after starting the script

obs, info = env.reset() # reset environment

for _ in range(200): # rtgym ensures this runs at 20Hz by default

act = model(obs) # compute action

obs, rew, terminated, truncated, info = env.step(act) # step (rtgym ensures healthy time-steps)

if terminated or truncated:

break

env.wait() # rtgym-specific method to artificially 'pause' the environment when neededThe environment flavor can be chosen and customized by changing the content of the ENV entry in TmrlData\config\config.json:

(NB: do not copy-paste the examples, comments are not supported in vanilla .json files)

This version of the environment features full screenshots to be processed with, e.g., a CNN. In addition, this version features the speed, gear and RPM. This works on any track, using any (sensible) camera configuration.

{

"ENV": {

"RTGYM_INTERFACE": "TM20FULL", // TrackMania 2020 with full screenshots

"WINDOW_WIDTH": 256, // width of the game window (min: 256)

"WINDOW_HEIGHT": 128, // height of the game window (min: 128)

"SLEEP_TIME_AT_RESET": 1.5, // the environment sleeps for this amount of time after each reset

"IMG_HIST_LEN": 4, // length of the history of images in observations (set to 1 for RNNs)

"IMG_WIDTH": 64, // actual (resized) width of the images in observations

"IMG_HEIGHT": 64, // actual (resized) height of the images in observations

"IMG_GRAYSCALE": true, // true for grayscale images, false for color images

"RTGYM_CONFIG": {

"time_step_duration": 0.05, // duration of a time step

"start_obs_capture": 0.04, // duration before an observation is captured

"time_step_timeout_factor": 1.0, // maximum elasticity of a time step

"act_buf_len": 2, // length of the history of actions in observations (set to 1 for RNNs)

"benchmark": false, // enables benchmarking your environment when true

"wait_on_done": true, // true

"ep_max_length": 1000 // episodes are truncated after this number of time steps

},

"REWARD_CONFIG": {

"END_OF_TRACK": 100.0, // reward for reaching the finish line

"CONSTANT_PENALTY": 0.0, // constant reward at every time-step

"CHECK_FORWARD": 500, // maximum computed cut from last point

"CHECK_BACKWARD": 10, // maximum computed backtracking from last point

"FAILURE_COUNTDOWN": 10, // early termination after this number time steps

"MIN_STEPS": 70, // number of time steps before early termination kicks in

"MAX_STRAY": 100.0 // early termination if further away from the demo trajectory

}

}

}Note that human players can see or hear the features provided by this environment: we provide no "cheat" that would render the approach non-transferable to the real world. In case you do wish to cheat, though, you can easily take inspiration from our rtgym interfaces to build your own custom environment for TrackMania.

The Full environment is used in the official TMRL competition, and custom environments are featured in the "off" competition 😉

In this version of the environment, screenshots are reduced to 19-beam LIDARs to be processed with, e.g., an MLP. In addition, this version features the speed (that human players can see). This works only on plain road with black borders, using the front camera with car hidden.

{

"ENV": {

"RTGYM_INTERFACE": "TM20LIDAR", // TrackMania 2020 with LIDAR observations

"WINDOW_WIDTH": 958, // width of the game window (min: 256)

"WINDOW_HEIGHT": 488, // height of the game window (min: 128)

"SLEEP_TIME_AT_RESET": 1.5, // the environment sleeps for this amount of time after each reset

"IMG_HIST_LEN": 4, // length of the history of LIDAR measurements in observations (set to 1 for RNNs)

"RTGYM_CONFIG": {

"time_step_duration": 0.05, // duration of a time step

"start_obs_capture": 0.04, // duration before an observation is captured

"time_step_timeout_factor": 1.0, // maximum elasticity of a time step

"act_buf_len": 2, // length of the history of actions in observations (set to 1 for RNNs)

"benchmark": false, // enables benchmarking your environment when true

"wait_on_done": true, // true

"ep_max_length": 1000 // episodes are truncated after this number of time steps

},

"REWARD_CONFIG": {

"END_OF_TRACK": 100.0, // reward for reaching the finish line

"CONSTANT_PENALTY": 0.0, // constant reward at every time-step

"CHECK_FORWARD": 500, // maximum computed cut from last point

"CHECK_BACKWARD": 10, // maximum computed backtracking from last point

"FAILURE_COUNTDOWN": 10, // early termination after this number time steps

"MIN_STEPS": 70, // number of time steps before early termination kicks in

"MAX_STRAY": 100.0 // early termination if further away from the demo trajectory

}

}

}If you have watched the 2022-06-08 episode of the Underscore_ talk show (french), note that the policy you have seen has been trained in a slightly augmented version of the LIDAR environment: on top of LIDAR and speed value, we have added a value representing the percentage of completion of the track, so that the model can know the turns in advance similarly to humans practicing a given track.

This environment will not be accepted in the competition, as it is de-facto less generalizable.

However, if you wish to use this environment, e.g., to beat our results, you can use the following config.json:

{

"ENV": {

"RTGYM_INTERFACE": "TM20LIDARPROGRESS", // TrackMania 2020 with LIDAR and percentage of completion

"WINDOW_WIDTH": 958, // width of the game window (min: 256)

"WINDOW_HEIGHT": 488, // height of the game window (min: 128)

"SLEEP_TIME_AT_RESET": 1.5, // the environment sleeps for this amount of time after each reset

"IMG_HIST_LEN": 4, // length of the history of LIDAR measurements in observations (set to 1 for RNNs)

"RTGYM_CONFIG": {

"time_step_duration": 0.05, // duration of a time step

"start_obs_capture": 0.04, // duration before an observation is captured

"time_step_timeout_factor": 1.0, // maximum elasticity of a time step

"act_buf_len": 2, // length of the history of actions in observations (set to 1 for RNNs)

"benchmark": false, // enables benchmarking your environment when true

"wait_on_done": true, // true

"ep_max_length": 1000 // episodes are truncated after this number of time steps

},

"REWARD_CONFIG": {

"END_OF_TRACK": 100.0, // reward for reaching the finish line

"CONSTANT_PENALTY": 0.0, // constant reward at every time-step

"CHECK_FORWARD": 500, // maximum computed cut from last point

"CHECK_BACKWARD": 10, // maximum computed backtracking from last point

"FAILURE_COUNTDOWN": 10, // early termination after this number time steps

"MIN_STEPS": 70, // number of time steps before early termination kicks in

"MAX_STRAY": 100.0 // early termination if further away from the demo trajectory

}

}

}In the example tmrl pipeline, an AI (policy) that knows absolutely nothing about driving or even about what a road is, is set at the starting point of a track.

Its goal is to learn how to complete the track as fast as possible by exploring its own capabilities and environment.

The car feeds observations such as images to an artificial neural network, which must output the best possible controls from these observations. This implies that the AI must understand its environment in some way. To achieve this understanding, it explores the world for a few hours (up to a few days), slowly gaining an understanding of how to act efficiently. This is accomplished through Deep Reinforcement Learning (RL).

Most RL algorithms are based on a mathematical description of the environment called Markov Decision Process (MDP). A policy trained via RL interacts with an MDP as follows:

In this illustration, the policy is represented as the stickman, and time is represented as time-steps of fixed duration. At each time-step, the policy applies an action (float values for gas, brake, and steering) computed from an observation. The action is applied to the environment, which yields a new observation at the end of the transition.

For the purpose of training this policy, the environment also provides another signal, called the "reward". RL is inspired from behaviorism, which relies on the fundamental idea that intelligence is the result of a history of positive and negative stimuli. The reward received by the AI at each time-step is a measure of how well it performs.

In order to learn how to drive, the AI tries random actions in response to incoming observations, gets rewarded positively or negatively, and optimizes its policy so that its long-term reward is maximized.

Soft Actor-Critic (SAC) is an algorithm that enables learning continuous stochastic controllers.

More specifically, SAC does this using two separate Artificial Neural Networks (NNs):

- The first one, called the "policy network" (or, in the literature, the "actor"), is the NN the user is ultimately interested in : the controller of the car. It takes observations as input and outputs actions.

- The second called the "value network" (or, in the literature, the "critic"), is used to train the policy network.

It takes an observation

xand an actionaas input, to output a value. This value is an estimate of the expected sum of future rewards if the AI observesx, selectsa, and then uses the policy network forever (there is also a discount factor so that this sum is not infinite).

Both networks are trained in parallel using each other. The reward signal is used to train the value network, and the value network is used to train the policy network.

Advantages of SAC over other existing methods are the following:

- It is able to store transitions in a huge circular buffer called the "replay memory" and reuse these transitions several times during training. This is an important property for applications such as TrackMania where only a relatively small number of transitions can be collected due to the Real-Time nature of the setting.

- It is able to output analog controls. We use this property with a virtual gamepad.

- It maximizes the entropy of the learned policy. This means that the policy will be as random as possible while maximizing the reward. This property helps explore the environment and is known to produce policies that are robust to external perturbations, which is of central importance in real-world self-driving scenarios.

REDQ is a more recent methodology that improves the performance of value-based algorithms like SAC.

The improvement introduced by REDQ consists essentially of training an ensemble of parallel value networks from which a subset is randomly sampled to evaluate target values during training. The authors show that this enables low-bias updates and a sample efficiency comparable to model-based algorithms, at a much lower computational cost.

By default, tmrl trains policies with vanilla SAC.

To use REDQ-SAC, edit TmrlData\config\config.json on the machine used for training, and replace the "SAC" value with "REDQSAC" in the "ALGORITHM" entry.

You also need values for the "REDQ_N", "REDQ_M" and "REDQ_Q_UPDATES_PER_POLICY_UPDATE" entries, where "REDQ_N" is the number of parallel critics, "REDQ_M" is the size of the subset, and "REDQ_Q_UPDATES_PER_POLICY_UPDATE" is a number of critic updates happenning between each actor update.

For instance, a valid "ALG" entry using REDQ-SAC is:

"ALG": {

"ALGORITHM": "REDQSAC",

"LEARN_ENTROPY_COEF":false,

"LR_ACTOR":0.0003,

"LR_CRITIC":0.00005,

"LR_ENTROPY":0.0003,

"GAMMA":0.995,

"POLYAK":0.995,

"TARGET_ENTROPY":-7.0,

"ALPHA":0.37,

"REDQ_N":10,

"REDQ_M":2,

"REDQ_Q_UPDATES_PER_POLICY_UPDATE":20

},As mentioned before, a reward function is needed to evaluate how well the policy performs.

There are multiple reward functions that could be used. For instance, one could directly use the raw speed of the car as a reward. This makes some sense because the car slows down when it crashes and goes fast when it is performing well.

This approach would be naive, though. The actual goal of racing is not to move as fast as possible. Rather, one wants to complete the largest portion of the track in the smallest possible amount of time. This is not equivalent as one should consider the optimal trajectory, which may imply slowing down on sharp turns in order to take the apex of each curve.

In TrackMania 2020, we use a more advanced and conceptually more interesting reward function:

For a given track, we record one single demonstration trajectory. This does not have to be a good demonstration, but only to follow the track. Once the demonstration trajectory is recorded, it is automatically divided into equally spaced points.

During training, at each time-step, the reward is then the number of such points that the car has passed since the previous time-step. In a nutshell, whereas the previous reward function was measuring how fast the car was, this new reward function measures how good it is at covering a big portion of the track in a given amount of time.

In tmrl, the car can be controlled in two different ways:

- The policy can control the car with analog inputs by emulating an XBox360 controller thanks to the vgamepad library.

- The policy can output simple (binary) arrow presses.

Different observation spaces are available in the TrackMania pipeline::

- A history of raw screenshots (typically 4).

- A history of LIDAR measurement computed from raw screenshots in tracks with black borders.

In addition,the pipeline provides the norm of the velocity as part of the observation space.

Example of tmrl environment in TrackMania Nations Forever with a single LIDAR measurement:

In TrackMania Nations Forever, we use to compute the raw speed from screenshots thanks to the 1-NN algorithm.

In TrackMania 2020, we now use the OpenPlanet API to retrieve the raw speed directly.

In the following experiment, on top of the raw speed, the blue car is using a single LIDAR measurement whereas the red car is using a history of 4 LIDAR measurements. The positions of both cars are captured at constant time intervals in this animation:

The blue car learned to drive at a constant speed, as it is the best it can do from its naive observation space. Conversely, the red car is able to infer higher-order dynamics from the history of 4 LIDARs and successfully learned to break, take the apex of the curve, and accelerate again after this sharp turn, which is slightly better in this situation.

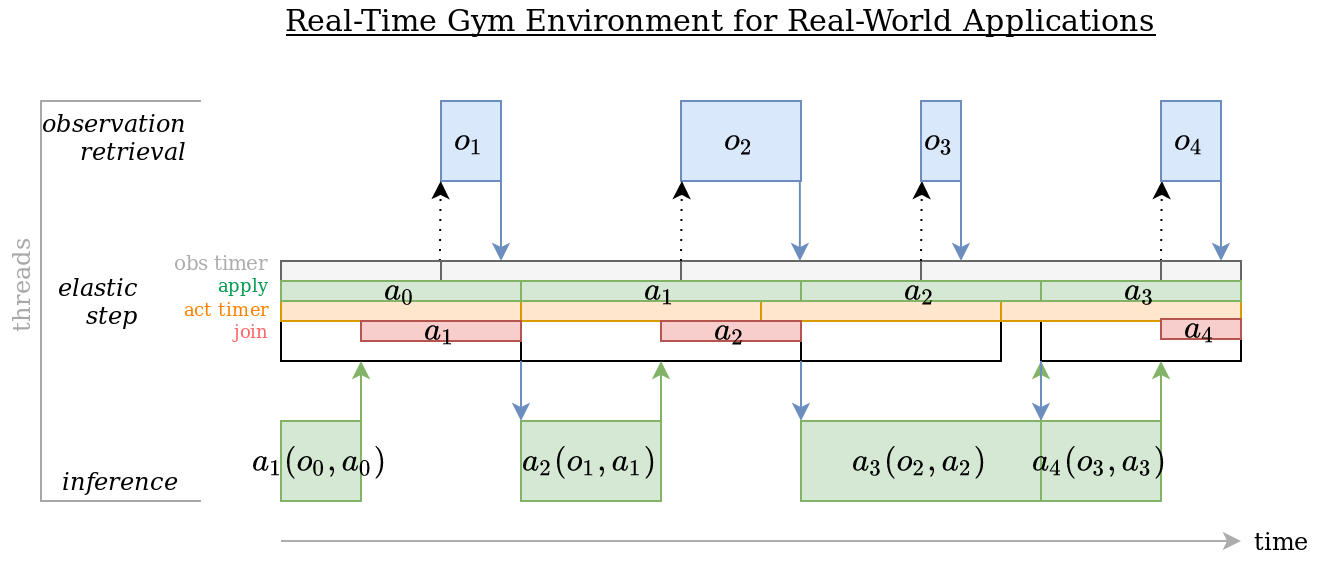

This project uses Real-Time Gym (rtgym), a simple python framework that enables efficient real-time implementations of Delayed Markov Decision Processes in real-world applications.

rtgym constrains the times at which actions are sent and observations are retrieved as follows:

Time-steps are being elastically constrained to their nominal duration. When this elastic constraint cannot be satisfied, the previous time-step times out and the new time-step starts from the current timestamp.

Custom rtgym interfaces for Trackmania used by tmrl are implemented in custom_gym_interfaces.py.

tmrl is built with tlspyo.

Its client-server architecture is similar to Ray RLlib.

tmrl is not meant to compete with Ray, but it is much simpler to adapt in order to implement ad-hoc pipelines, and works on both Windows and Linux.

tmrl collects training samples from several rollout workers (typically several computers and/or robots).

Each rollout worker stores its collected samples in a local buffer, and periodically sends this replay buffer to the central server.

Periodically, each rollout worker also receives new policy weights from the central server and updates its policy network.

The central server is located either on the localhost of one of the rollout worker computers, on another computer on the local network, or on another computer on the Internet. It collects samples from all the connected rollout workers and stores these in a local buffer. This buffer is then sent to the trainer interface. The central server receives updated policy weights from the trainer interface and broadcasts these to all connected rollout workers.

The trainer interface is typically located on a non-rollout worker computer of the local network, or on another machine on the Internet (e.g., a GPU cluster). Of course, it is also possible to locate everything on localhost when needed. The trainer interface periodically receives the samples gathered by the central server and appends these to a replay memory. Periodically, it sends the new policy weights to the central server.

These mechanics are summarized as follows:

Contributions to tmrl are welcome.

Please consider the following:

- Further profiling and code optimization,

- Find the cleanest way to support sequences in

Memoryfor RNN training.

You can discuss contribution projects in the discussions section.

When contributing, please submit a PR with your name in the contributors list with a short caption.

- Yann Bouteiller

- Edouard Geze

- Simon Ramstedt - initial code base

- AndrejGobeX - optimization of screen capture (TrackMania)

- Pius - Linux support (TrackMania)

MIT, Bouteiller and Geze.

Many thanks to our sponsors for their support!