-

2.1 User-facing server features

2.2 Client features

-

5.1. Nomenclature

5.2. entities

5.3. actor_msg_resources

This project is meant to be a demonstration of how to design, architect and implement a full-stack application using an actor system. It was created for the purpose of exercising software engineering through a concrete product idea. The requirements are intentionally shallow so that we will not divert focus to domain-specific details which are not relevant to aforementioned idea of showcasing the implementation.

Further, the implementation does not aim to be "production-ready" (see the Missing for production section).

This project is named "Library" because it implements a basic system for a library (as in a "book library") where users will be able to borrow books and return them later. This theme is simple and restricted enough that it allows for us to focus on the software engineering aspects without having to bother with menial domain-specific details - that is not the purpose of this project.

Following the traditional Rest API principles, the routes are grouped by

Resources

with semantic hierarchical nesting by interplay of slashes and parameters. e.g.

/book/{id}/borrow.

Allows for user creation with custom access levels.

- User is the regular (non-special) access level which is able to borrow a book

- Librarian is a User, plus it can create new books and cancel existing borrows

- Admin is a Librarian, plus it has unlimited access to all functionality

Librarian and above can only be created by the Admin type.

Generates an access token with given credentials. The authentication strategy is primitive and crude (see Missing for production).

Where {query} matches by the book's title.

Requires at least Librarian access level

Lends a book to a given user for a given length of time.

Finishes the borrow for a given user.

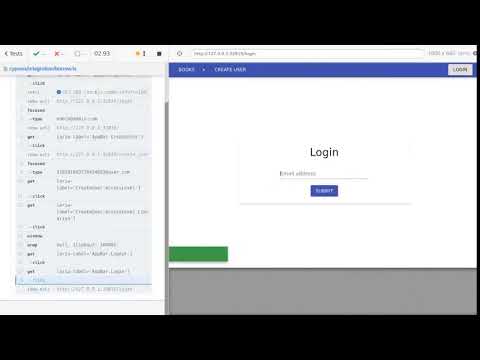

A Cypress recording of all the client-side features is available at https://www.youtube.com/watch?v=tR9ohvZu6Qo. Descriptions of each individual step executed in the recording can be found in the integration test file.

- Route protection gated by specific access levels per route.

- PgSQL database is ran in a container, which allows for spawning multiple environments for parallel integration test runs.

- Logging for all message-passing and errors in actors.

- Integration testing for all public APIs with logging of the message history to snapshots.

- Execution is driven by a Bash script instead of a Makefile, which is powerful and flexible enough to be used for starting everything.

- Rust is used for the server's application code.

- JavaScript is used for the UI.

- TypeScript for the integration test suite with Cypress.

- Bash is used everywhere else as the "glue" language.

The server is comprised of a Rest API ran inside of a Bastion supervisor and served through Tide.

Actors - Bastion

The Bastion Prelude compounds the functionality of several other crates for a runtime primed for reliability and recovery. Effectively, the supervisor will spawn each actor's thread in a dedicated thread pool, thus isolating crashes between actors. The execution is wrapped through LightProc so that, once there is a crash, the system will react to the error by sending a message to the parent supervisor, which freshly restarts the actor's closure in a new thread. Advantages and robustness aside, adopting this strategy streamlines the communication pattern for the system as whole, which I'll come back to in the How it works section.

Messages - crossbeam-channel

Since Bastion spawns each actor in a separate thread, the communication between them is made possible by sending one-shot (bounded with one capacity) crossbeam channels through messages, which will eventually reach the target actor's inbox and be replied to; more details in the How it works section.

Serving requests - Tide

When I started this project by late 2020, Tide had the most straigthforward and user-friendly API among the available options. I simply wanted a "facade" for turning HTTP requests into messages for the actors, which Tide is able to do well enough (see main.rs); notably, it does so without much cognitive burden and ceremony - the API seems simple enough to use, unlike other options I found at the time.

I also considered warp but its filter chain + rejections model seemed like unneeded ceremony for what I have in mind. Some other issues regarding the public API (1, 2) further discouraged my interest in it.

A sqlx + refinery lets me work with the SQL dialect directly. I much prefer this kind of approach rather than any ORM API.

refinery handles migrations while sqlx is used for queries. I find this setup to be much more productive than trying to mentally translate SQL into ORM APIs.

Server test snapshotting - insta + flexi_logger

Testing is done by collecting the logs through flexi_logger, then snapshotting them with insta (see the snapshots directory).

The UI is implemented in React + Redux because that's what I am most comfortable with, as corroborated by my other projects and professional experience.

I had looked into other Rust-based frontend solutions such as Seed but I judged it wouldn't as productive to use them since

- I have experience as a front-end developer using JS

- Their APIs were not mature at the time

Web UI tests - Cypress

Integration testing for the UI is done through Cypress.

While the server integration ensures the APIs are working, Cypress verifies that the API is being used properly through the front-end features.

If PostgreSQL is already running at localhost:5432 and the $USER role is

set up already, then Docker is not needed - in that case it'll just use your

existing service instance.

Otherwise, the Docker setup requires docker-compose and can be ran with

run db.

Requires the database to be up.

Start it with run server.

Run them with run integration_tests.

By virtue of the testing infrastructure leveraging lots of Unix-specific programs, it's assumed that the setup, as is, likewise, only works on Linux. The used executables should already be available in your distribution:

bash

pstree

awk

head

tail

kill

pkill

flock

ss

Additionally docker-compose should be installed for spawning test instances

and coordinating resources between them. Their purpose is explained in How

testing is done.

Needs every dependency mentioned in

Server integration tests plus Node.js

(see package.json). The tests can be ran

with node main.js (see main.js).

Entity refers to an entity in the traditional sense (ERM). For this implementation, entities map 1-to-1 with actors, i.e. the Book actor handles all messages related to the Book entity.

Resource simply is the entity (which is the data representation) as a "controller" (which offers API functionality for a certain entity). This concept was popularized by the Rails framework.

A crate which primarily hosts the data-centric aspects of the application, hence the name "entities". The structures' (source) names follow a pattern dictated by the macros, as it'll be shown in the following sections.

The actor_msg_resources

(source

and

usage)

macro has the following roles:

-

Wrap messages in a default structure which carries useful values along with the message's content (for instance, a reference to the database pool).

-

Generate enum wrappers for the all message types.

-

Define a

OnceCellnamed after the actor's name capitalized (e.g.BOOKfor the Book actor). This cell will be initialized to an emptyRwLockwhen the app is set up (source) and later replaced with a channel to the actors when they're spawned (source).

By virtue of code generation, this macro enforces a predictable naming convention for messages. For instance, the following:

actor_msg_resources::generate!(User, [(Login, User)])

Specified as

actor_msg_resources::generate!(#ACTOR, [(#MESSAGE_VARIANT, #REPLY)])

Expands to

pub struct UserLoginMsg {

pub reply: crossbeam_channel::Sender<Option<crate::resources::ResponseData<User>>>,

pub payload: entities::UserLoginPayload,

pub db_pool: &'static sqlx::PgPool,

}

pub enum UserMsg {

Login(UserLoginMsg),

}

Clearly there's a trend in naming there:

User Msg enum,

with an Login variant,

which wraps a User Login Msg.

The payload field references User Login Payload which is yet another

conventionally-named struct from the entitities crate.

Now that the messages' structures have been taken care of, the HTTP endpoints can be built.

The actor_response_handler macro

(source)

expands to a function which handles

- Parsing a request into a message

- Sending the message to its designated actor

- Wait for the reply message

- Mount the response body and return it

The parser function's name is dictated by convention. For a macro

actor_request_handler::generate!({

name: login,

actor: User,

response_type: User,

tag: Login

});

Since it's named login, there should be an accompanying function extract_

login to parse the HTTP request

(example).

Parser errors are easily enough handled through Tide, if any.

The function generated by this macro will be later used as an HTTP endpoint. Now that there's a way to extract the messages, an actor can be created for receiving and replying to them.

Given that Bastion's API for spawning actors is

children

.with_exec(move |ctx: BastionContext| async move {

// actor code

})

The first step for any given actor will be registering his own channel of

communication. As mentioned in the previous section,

their channel will be globally reachable through a OnceCell (note: reliance

on such mechanism means that redundancy cannot be achieved using this approach

as it currently is implemented, although it technically is possible).

Availability through the OnceCell is done for the sake of making this actor's

channel discoverable, always, whenever it comes up (it might crash at some

point and that's fine).

endpoint_actor expands to the

repetitive code

one would normally have to write by hand, which is unwrapping the enums and

forwarding the payload

(source)

to the function which will process it.

As an example:

endpoint_actor::generate!({ actor: User }, {

Login: create_session,

});

Specified as

endpoint_actor::generate!({ actor: #ACTOR }, {

#MESSAGE_VARIANT: #MESSAGE_HANDLER,

...

});

The expansion defines an actor which will handle the HTTP requests parsed in request handlers. Now we should have everything needed for serving a response.

In case you want to expand the API for a new endpoint, the following steps would be taken

- Edit the

messages

module where you'll either add another

(#MESSAGE_VARIANT, #REPLY)tuple to one of the existing actor definitions or create a new one.

actor_msg_resources::generate!(

Book,

[

+ (Create, Book),

]

);- Define new structs in the entities crate following the naming conventions already explained before

+ pub struct BookCreatePayload {

+ pub title: String,

+ pub access_token: String,

+ }-

Create or use an existing resource for handling the new message.

-

Define a new request handler with actor_request_handler

+ async fn extract_post(req: &mut Request<ServerState>) -> tide::Result<BookCreatePayload> {

+ // extract the payload

+ }

+ actor_request_handler::generate!(Config {

+ name: post,

+ actor: Book,

+ response_type: Book,

+ tag: Create

+ });Notice the convention between name: post and extract_post.

- Define a new message handler in the body of endpoint_actor

+ pub async fn create(msg: &BookCreateMsg) -> Result<ResponseData<Book>, sqlx::Error> {

+ // create the book

+ }

endpoint_actor::generate!({ actor: Book }, {

GetByTitle: get_by_title,

LeaseByTitle: lease_by_id,

EndLoanByTitle: end_loan_by_title,

+ Create: create,

});- Create the route

+ server

+ .at(format!(book_route!(), ":title").as_str())

+ .post(resources::book::post);- Connect to the database

- Run migrations

- Define the web servers' routes

- Initialize the supervision tree

There are many resources describing the advantages of actor models (e.g. the Akka guide goes in-depth on the whole topic), but I hadn't heard of the following highlights before working in this project

- Message-first: the actor model encourages one to model around simple plain old structures which can be sent easily across threads, as opposed to massive objects which host a lot of context and might hold dependencies to non-thread-safe elements.

- Logging: since execution is driven through messages, it's extremely easy to catpure the flow of execution at the message handler instead of remebering to add custom log directives at arbitrary points in the code.

- Inspectable: even if a component suddenly breaks before it's replied to, the lingering message will still likely offer some insight given that execution is driven by the data within them, instead of being arbitrarily spread through the execution of the main thread, which is liable to failure.

Resilience is the main selling point for wanting to model an application with

actors and supervisors. Having a fault-tolerant runtime motivates the use of

unwrap in places where you are expecting some invariant to hold up because

doing so will not crash your whole app - instead only the actor will crash

and then be recreated by the supervisor. This allows for avoiding "defensive

programming" against against errors: instead, let it crash and recover itself;

spend development time on testing instead of trying to defensively architect

around unexpected failures at runtime.

The Server integration tests section had a list of all the Linux utilities needed. How does it all come together?

It starts from the Bash script, run. A command may have dependencies

(example);

for instance, when logging is enabled, the logging folder has to be created

before the program is run. The Bash script therefore serves as a wrapper and

general way to configure and set up all the programs it can run.

Integration tests need both a clean database and a fresh server instance in

order to run; accordingly, both need processes need open ports to bind to,

which is where ss comes in handy for figuring out which ports are currently

in use.

For the database, a dockerized PostgreSQL instance is spawned especifically for

tests with run test_db. It's useful to have this dedicated container in

order to avoid accumulating test databases in the actual work instance, plus it

also means that the volume can be completely discarded when the container is

finished. The port being used will be written to $TEST_DB_PORT_FILE, a file

which will be automatically read when the tests are ran. The databases used for

integration tests will, therefore, all be created in this specific container.

For ensuring exclusive system-level resources acquisition, flock is used as a

synchronization tool so that two different test instances do not try to bind to

the same port. Ensuring exclusive the port acquisition means that tests can be

independently ran in parallel. As aforementioned in the

advantages, it's pretty easy to know exactly

what's being executed by snapshotting the logs (see the snapshot

directory).

Finally, the remaining utilities come in once on the teardown phase. Because

execution is driven by the Bash script, simply killing the process would only

kill the "wrapper", but not all the processes spawned indirectly. pstree is

used for finding the server's PID underneath of the wrapper, which is then

terminated with kill.

This project purposefully does not aim to become a production-ready application, but if it did, we'd be interested in implementing the features from this section.

- Authentication does not include password, which would not work.

- Title is used as primary key for books, but of course this wouldn't be acceptable normally.

- Books can only be lent for a full week, but the timeline could be customizable.

- Books are automatically considered available when their lease time expires, thus the current system doesn't account for lateness.

- See a user's history of borrowing.

- See a book's history of borrowing.

- Plotted metrics (% of books late, % probability of it being late, etc) in some sort of Admin dashboard.

- Searching and filtering books in the UI.

Currently tokens are issued once and don't degrade, ever.

Currently we have the single access_token field in the User entity which

wouldn't scale well with multiple devices.

Currently errors are logged to the file system, but not reported in any manner to some provider in the cloud.

The following are self-explanatory

- PostgreSQL setup has only been proven to work without password.

- Lacking CI Setup.

- No API specification (e.g. OpenAPI).

- No verification of the user profile's payload as received from the backend.

- Cached profile information in the front-end is never invalidated or degraded.