Repository created for GSoC work on grasping and pose estimation.

Project Title: DNN’s for precise manipulation of household objects.

-

Clone the repository recursively :

git clone --recurse-submodules https://github.com/robocomp/grasping.git

-

Follow READMEs in each sub-folder for installation and usage instructions.

-

components: contains all RoboComp interfaces and components. -

data-collector: contains the code for custom data collection using CoppeliaSim and PyRep. -

rgb-based-pose-estimation: contains the code for Segmentation-driven 6D Object Pose Estimation neural network. -

rgbd-based-pose-estimation: contains the code for PVN3D neural network as a git submodule.

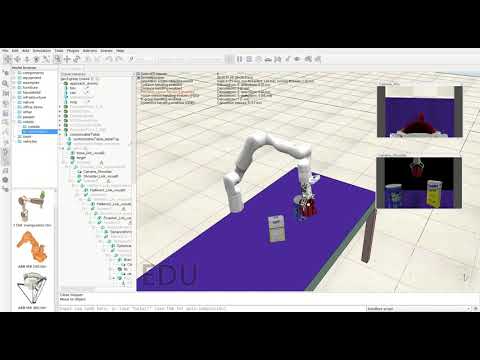

As shown in the figure, the components workflow goes as follows :

-

viriatoPyrepcomponent streams the RGBD signal from CoppeliaSim simulator using PyRep API and publishes it to the shared graph throughviriatoDSRcomponent. -

graspDSRcomponent reads the RGBD signal from shared graph and passes itobjectPoseEstimationcomponent. -

objectPoseEstimationcomponent, then, performs pose estimation using DNN and returns the estimated poses. -

graspDSRcomponent injects the estimated poses into the shared graph and progressively plans dummy targets for the arm to reach the target object. -

viriatoDSRcomponent, then, reads the dummy target poses from the shared graph and passes it toviriatoPyrepcomponent. -

Finally,

viriatoPyrepcomponent uses the generated poses bygraspDSRto progressively plan a successful grasp on the object.

For more information on DSR system integration and usage, refer to DSR-INTEGRATION.md.

Our system uses PyRep API to call embedded Lua scripts in the arm for fast and precise grasping. The provided poses are estimated using DNNs through RGB(D) data collected from the shoulder camera.

This demo verifies the arm's path planning using DNN-estimated poses.

This demo shows the ability of the arm to grasp and manipulate a certain object out of multiple objects in the scene, using DNN-estimated poses.