-

Notifications

You must be signed in to change notification settings - Fork 477

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Stroke caps #32

Stroke caps #32

Conversation

* Pulling in stroke-cap data from skishore/makemeahanzi#32

|

I updated Hanzi Writer to use this data. You can see all the stroke-capped characters here: https://chanind.github.io/hanzi-writer-data/ |

|

This is a really nice approach. Awesome work. Sorry that I haven't taken a look at it until now! I have a tendency to get overly detailed with code reviews at work which I don't want to bring here, so let me just make a few high-level comments. You tell me how feasible these things are - if they will take too much work, then I am happy to merge these commits without the changes.

Overall, my inclination is, let's merge this now and I can follow up with some of these changes if they are actually needed. What do you think? |

|

Ah I didn't know about the tools branch! Should I reopen this PR against that branch? Using shrink wrap and removing babel and the other requirements makes sense. Getting rid of the commander stuff should be no problem too. Ah yeah looking for sets of points in 2 strokes instead of looking for "L" should work too! Whichever is easiest. Yeah, the Nan stuff is to handle parallel lines. I'll add a comment about that! If it's easier for you to merge and make these changes yourself go for it! |

|

Let's merge this now. The way you've set it up (in a separate directory so the only dependency is the format of the data) will make refactoring possible at any point. Do you mind rebasing onto master? |

|

It looks like this is up to date with master, so it should be good to go |

|

I rebase rather than merging to keep a linear history of commits, so I did that offline. Merged! About 1% of characters actually change when I re-run the script. Any idea why it would be non-deterministic in that way? Getting this into the tool branch is well-worth it. There are two huge advantages of doing it that way:

It will take a little work to integrate this code into the tool branch properly, but here's a very quick-and-dirty first integration: the tool server can simply write a fixed "graphics.txt" temp file for the given character, run the script, and read it back in. I'll get that working over the weekend so we can have really nice SVG outputs, then update the README! |

|

Thanks for the feedback, cleanup, and merging!

I suspect it's from the rounding done in fixStrokes here. This might mean that after the script first runs, there's a few bridges that just barely didn't meet the thresholds for correction before but do now. I'll double-check to see if this is what's going on. Integrating into the tools branch sounds like the right thing to do. Let me know if there's more I can do to help! |

|

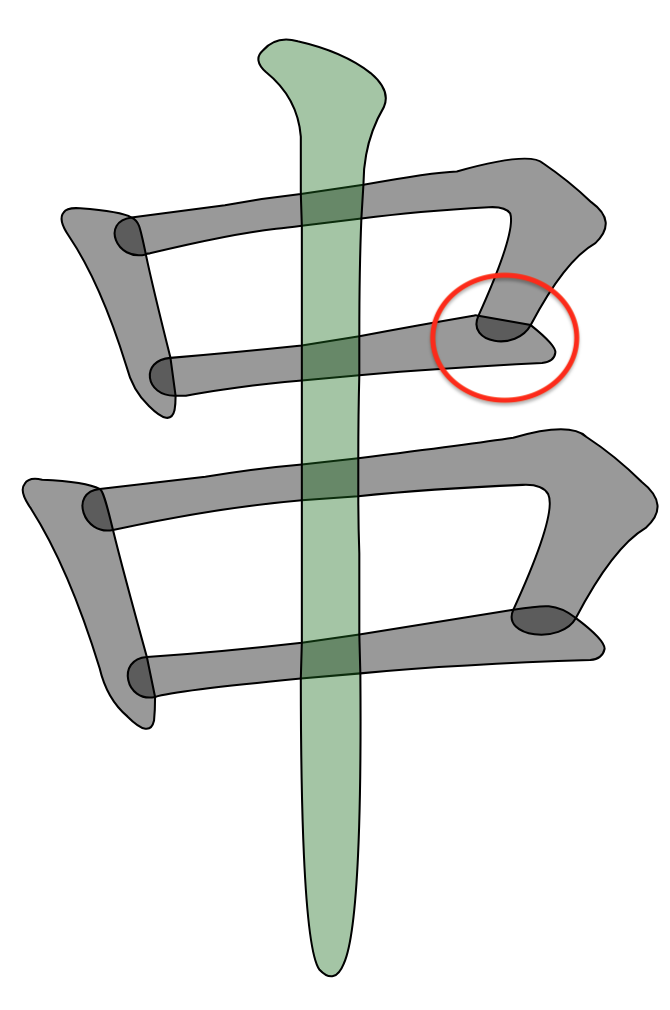

I looked into the non-deterministic running issues in more depth. It looks like the reason isn't due to rounding, but is due to the way the shape of the strokes is estimated by using 1000 points along the outline of the path. After the first run of this script, the shape of strokes change slightly due to the stroke caps being added, and as a result these estimation points also shift slightly. These points are used to calculate distances and cosine similarity, so these calculations change value slightly. The bridges that are modified are just barely above the cosine similarity threshold on the first run, and on the second run are just barely below it, just due to the noise of where the estimation points happen to be. It looks like the strokes being modified should in fact have been modified in the first run. An example of one of these strokes is shown below: This could be fixed by using more estimation points (maybe 2000 - 5000 or so?), and by increasing |

This PR is an attempt at addressing #28. This PR adds stroke caps by the following algorithm:

# # L # #strings in the stroke are possible clipping pointsd = 1.4 x <dist to middle clipping point>to make sure the bezier curve will extend far enough to cover up the whole stroke.This PR includes the updated graphics.txt and a

stroke_capsfolder with the code to update thegraphics.txtfile. You can run the update script withcd stroke_caps; npm install; node updateGraphicsTxt.js -v --input ../graphics.txt. The code is a bit of a mess - I can clean it up some more if needed.9346 / 9510 characters were modified. Below are some before / after examples: