-

Notifications

You must be signed in to change notification settings - Fork 110

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Merge branch 'latest' into 3618-docs-rfc-unite-some-get-started-pages…

…-into-one

- Loading branch information

Showing

86 changed files

with

2,677 additions

and

1,592 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,72 @@ | ||

| --- | ||

| title: Integrate <third-party tool name> with Timescale Cloud | ||

| excerpt: SEO friendly explanation of why the user will do it | ||

| keywords: [<third-party tool name>] | ||

| --- | ||

|

|

||

| import IntegrationPrereqs from "versionContent/_partials/_integration-prereqs.mdx"; | ||

|

|

||

| # Integrate <third-party tool name> with $CLOUD_LONG | ||

|

|

||

| // Explain what the third-party tool is and what it does in their own words, link to product docs. | ||

|

|

||

| // Provide context for the integration steps, for example, if an additional connector is used. | ||

|

|

||

| // See https://docs.timescale.com/use-timescale/latest/integrations/grafana/ for an example. | ||

|

|

||

| ## Prerequisites | ||

|

|

||

| <IntegrationPrereqs /> | ||

|

|

||

| - Install <third-party tool name> // Mention both server and cloud versions, if present. Link to installation pages. | ||

|

|

||

| ## Connect your $SERVICE_LONG | ||

|

|

||

| To connect to $CLOUD_LONG: | ||

|

|

||

| <Procedure> | ||

|

|

||

| 1. **Log in to <third-party tool name>** | ||

|

|

||

| // Sub-steps, code, screenshots if necessary. | ||

|

|

||

| 1. **Configure the connection** | ||

|

|

||

| // Sub-steps, code, screenshots if necessary. Link to [Find your connection details][connection-info]. | ||

|

|

||

| ... | ||

|

|

||

| 1. **Test the connection** | ||

|

|

||

| // Sub-steps, code, screenshots if necessary. | ||

|

|

||

| </Procedure> | ||

|

|

||

| ## Test the integration with $CLOUD_LONG | ||

|

|

||

| // Add only if there is a simple way to illustrate how the two solutions work together. | ||

|

|

||

| Take the following steps to <whatever the tool must do in conjunction with Timescale Cloud>: | ||

|

|

||

| <Procedure> | ||

|

|

||

| // Steps to test out the integration using a defined dataset. | ||

|

|

||

| </Procedure> | ||

|

|

||

| You have successfully integrated <third-party tool> with $CLOUD_LONG. | ||

|

|

||

| [connection-info]: /use-timescale/:currentVersion:/integrations/find-connection-details/ | ||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,20 @@ | ||

| name: Deploy redirects to lambda | ||

| on: | ||

| push: | ||

| paths: | ||

| - lambda/** | ||

|

|

||

| permissions: {} | ||

|

|

||

| jobs: | ||

| trigger: | ||

| name: Update changed lambda redirects | ||

| runs-on: ubuntu-latest | ||

| steps: | ||

| - name: Repository Dispatch | ||

| uses: peter-evans/repository-dispatch@26b39ed245ab8f31526069329e112ab2fb224588 | ||

| with: | ||

| token: ${{ secrets.ORG_AUTOMATION_TOKEN }} | ||

| repository: timescale/web-documentation | ||

| event-type: build-lambda | ||

| client-payload: '{ "branch": "${{ github.ref_name }}" }' |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,133 @@ | ||

| <Procedure> | ||

|

|

||

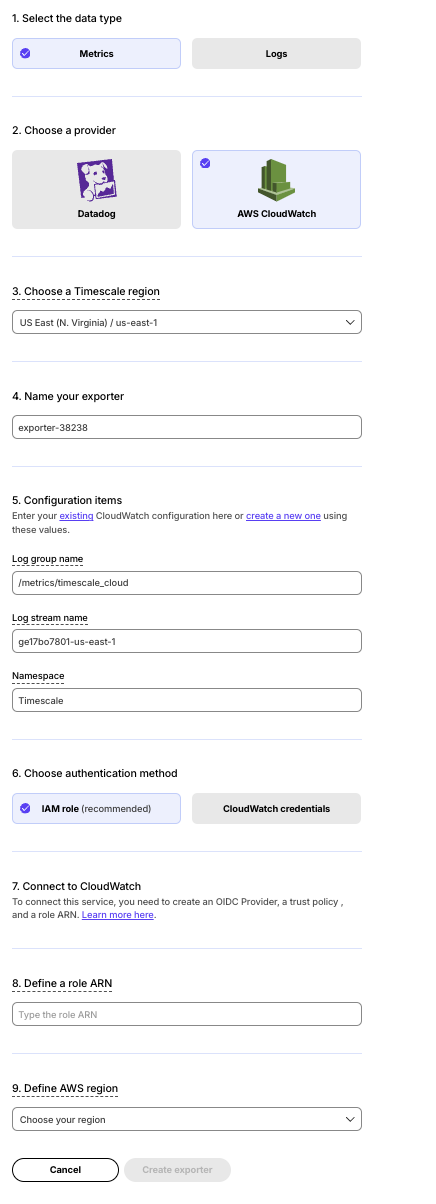

| 1. **In $CONSOLE, open [Integrations][console-integrations]** | ||

| 1. **Click `New exporter`** | ||

| 1. **Select the data type and specify `AWS CloudWatch` for provider** | ||

|

|

||

|  | ||

|

|

||

| 1. **Provide your AWS CloudWatch configuration** | ||

|

|

||

| - The AWS region must be the same for your $CLOUD_LONG exporter and AWS CloudWatch Log group. | ||

| - The exporter name appears in Cloud console, best practice is to make this name easily understandable. | ||

| - For CloudWatch credentials, either use an [existing CloudWatch Log group][console-cloudwatch-configuration] | ||

| or [create a new one][console-cloudwatch-create-group]. If you're uncertain, use | ||

| the default values. For more information, see [Working with log groups and log streams][cloudwatch-log-naming]. | ||

|

|

||

| 1. **Choose the authentication method to use for the exporter** | ||

|

|

||

| <Tabs label="Authentication methods"> | ||

|

|

||

| <Tab title="IAM role"> | ||

|

|

||

| <Procedure> | ||

|

|

||

| $SERVICE_LONGs run in AWS. Best practice is to use [IAM Roles for Service Accounts (IRSA)][irsa] to | ||

| manage access between your $SERVICE_SHORTs and your AWS resources. | ||

|

|

||

| Create the IRSA role following this [AWS blog post][cross-account-iam-roles]. | ||

|

|

||

| When you create the IAM OIDC provider: | ||

| - Set the URL to the [region where the exporter is being created][reference]. | ||

| - Add the role as a trusted entity. | ||

|

|

||

| The following example shows a correctly configured IRSA role: | ||

|

|

||

| - Permission Policy: | ||

|

|

||

| ```json | ||

| { | ||

| "Version": "2012-10-17", | ||

| "Statement": [ | ||

| { | ||

| "Effect": "Allow", | ||

| "Action": [ | ||

| "logs:PutLogEvents", | ||

| "logs:CreateLogGroup", | ||

| "logs:CreateLogStream", | ||

| "logs:DescribeLogStreams", | ||

| "logs:DescribeLogGroups", | ||

| "logs:PutRetentionPolicy", | ||

| "xray:PutTraceSegments", | ||

| "xray:PutTelemetryRecords", | ||

| "xray:GetSamplingRules", | ||

| "xray:GetSamplingTargets", | ||

| "xray:GetSamplingStatisticSummaries", | ||

| "ssm:GetParameters" | ||

| ], | ||

| "Resource": "*" | ||

| } | ||

| ] | ||

| } | ||

| ``` | ||

| - Role with a Trust Policy: | ||

|

|

||

| ```json | ||

| { | ||

| "Version": "2012-10-17", | ||

| "Statement": [ | ||

| { | ||

| "Effect": "Allow", | ||

| "Principal": { | ||

| "Federated": "arn:aws:iam::12345678910:oidc-provider/irsa-oidc-discovery-prod.s3.us-east-1.amazonaws.com" | ||

| }, | ||

| "Action": "sts:AssumeRoleWithWebIdentity", | ||

| "Condition": { | ||

| "StringEquals": { | ||

| "irsa-oidc-discovery-prod.s3.us-east-1.amazonaws.com:aud": "sts.amazonaws.com" | ||

| } | ||

| } | ||

| }, | ||

| { | ||

| "Sid": "Statement1", | ||

| "Effect": "Allow", | ||

| "Principal": { | ||

| "AWS": "arn:aws:iam::12345678910:role/my-exporter-role" | ||

| }, | ||

| "Action": "sts:AssumeRole" | ||

| } | ||

| ] | ||

| } | ||

| ``` | ||

|

|

||

| </Procedure> | ||

|

|

||

| </Tab> | ||

|

|

||

| <Tab title="CloudWatch credentials"> | ||

|

|

||

| <Procedure> | ||

|

|

||

| When you use CloudWatch credentials, you link an Identity and Access Management (IAM) | ||

| user with access to CloudWatch only with your $SERVICE_LONG: | ||

|

|

||

| 1. Retrieve the user information from [IAM > Users in AWS console][list-iam-users]. | ||

|

|

||

| If you do not have an AWS user with access restricted to CloudWatch only, | ||

| [create one][create-an-iam-user]. | ||

| For more information, see [Creating IAM users (console)][aws-access-keys]. | ||

|

|

||

| 1. Enter the credentials for the AWS IAM user. | ||

|

|

||

| AWS keys give access to your AWS services. To keep your AWS account secure, restrict users to the minimum required permissions. Always store your keys in a safe location. To avoid this issue, use the IAM role authentication method. | ||

|

|

||

| </Procedure> | ||

|

|

||

| </Tab> | ||

|

|

||

| </Tabs> | ||

|

|

||

| 1. Select the AWS Region your CloudWatch services run in, then click `Create exporter`. | ||

|

|

||

| </Procedure> | ||

|

|

||

| [console-integrations]: https://console.cloud.timescale.com/dashboard/integrations | ||

| [console-cloudwatch-configuration]: https://console.aws.amazon.com/cloudwatch/home#logsV2:log-groups | ||

| [console-cloudwatch-create-group]: https://console.aws.amazon.com/cloudwatch/home#logsV2:log-groups/create-log-group | ||

| [cloudwatch-log-naming]: https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/Working-with-log-groups-and-streams.html | ||

| [cross-account-iam-roles]: https://aws.amazon.com/blogs/containers/cross-account-iam-roles-for-kubernetes-service-accounts/ | ||

| [reference]: #reference | ||

| [list-iam-users]: https://console.aws.amazon.com/iam/home#/users | ||

| [create-an-iam-user]: https://console.aws.amazon.com/iam/home#/users/create | ||

| [aws-access-keys]: https://docs.aws.amazon.com/IAM/latest/UserGuide/id_users_create.html#id_users_create_console | ||

| [irsa]: https://aws.amazon.com/blogs/opensource/introducing-fine-grained-iam-roles-service-accounts/ |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,17 @@ | ||

| <Procedure> | ||

|

|

||

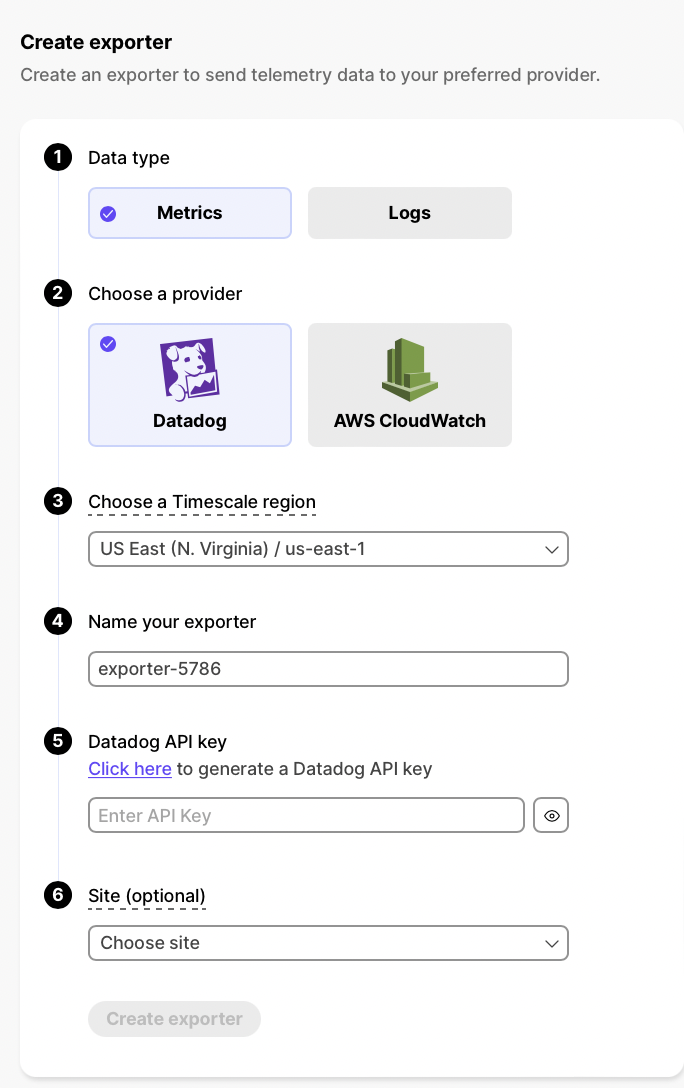

| 1. **In $CONSOLE, open [Integrations][console-integrations]** | ||

| 1. **Click `New exporter`** | ||

| 1. **Select `Metrics` for `Data type` and `Datadog` for provider** | ||

|

|

||

|  | ||

|

|

||

| 1. **Choose your AWS region and provide the API key** | ||

|

|

||

| The AWS region must be the same for your $CLOUD_LONG exporter and the Datadog provider. | ||

|

|

||

| 1. **Set `Site` to your Datadog region, then click `Create exporter`** | ||

|

|

||

| </Procedure> | ||

|

|

||

| [console-integrations]: https://console.cloud.timescale.com/dashboard/integrations |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,5 @@ | ||

| Before integrating: | ||

|

|

||

| * Create a [target $SERVICE_LONG][create-service]. | ||

|

|

||

| [create-service]: /getting-started/:currentVersion:/services/ |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,9 @@ | ||

| Before integrating: | ||

|

|

||

| * Create a [target $SERVICE_LONG][create-service]. You need [your connection details][connection-info] to follow this procedure. | ||

|

|

||

| This procedure also works for [self-hosted $TIMESCALE_DB][enable-timescaledb]. | ||

|

|

||

| [create-service]: /getting-started/:currentVersion:/services/ | ||

| [enable-timescaledb]: /self-hosted/:currentVersion:/install/ | ||

| [connection-info]: /use-timescale/:currentVersion:/integrations/find-connection-details/ |

Oops, something went wrong.