-

-

Notifications

You must be signed in to change notification settings - Fork 16.5k

Train Custom Data

📚 This guide explains how to train your own custom dataset with YOLOv5 🚀. UPDATED 17 Novemeber 2022.

Use this guide with the YOLOv5 Custom Training Notebook.

Clone repo and install requirements.txt in a Python>=3.7.0 environment, including PyTorch>=1.7. Models and datasets download automatically from the latest YOLOv5 release.

git clone https://github.com/ultralytics/yolov5 # clone

cd yolov5

pip install -r requirements.txt # install

Creating a custom model to detect your objects is an iterative process of collecting and organizing images, labeling your objects of interest, training a model, deploying it into the wild to make predictions, and then using that deployed model to collect examples of edge cases to repeat and improve.

YOLOv5 models must be trained on labelled data in order to learn classes of objects in that data. There are two options for creating your dataset before you start training:

Use Roboflow to label, prepare, and host your custom data automatically in YOLO format 🚀 NEW (click to expand)

Your model will learn by example. Training on images similar to the ones it will see in the wild is of the utmost importance. Ideally, you will collect a wide variety of images from the same configuration (camera, angle, lighting, etc) as you will ultimately deploy your project.

If this is not possible, you can start from a public dataset to train your initial model and then sample images from the wild during inference to improve your dataset and model iteratively.

Once you have collected images, you will need to annotate the objects of interest to create a ground truth for your model to learn from.

Roboflow Annotate is a simple web-based tool for managing and labeling your images with your team and exporting them in YOLOv5's annotation format.

Whether you label your images with Roboflow or not, you can use it to convert your dataset into YOLO format, create a YOLOv5 YAML configuration file, and host it for importing into your training script.

Create a free Roboflow account

and upload your dataset to a Public workspace, label any unannotated images,

then generate and export a version of your dataset in YOLOv5 Pytorch format.

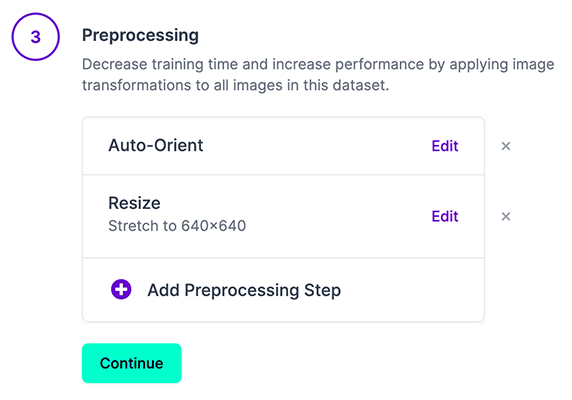

Note: YOLOv5 does online augmentation during training, so we do not recommend applying any augmentation steps in Roboflow for training with YOLOv5. But we recommend applying the following preprocessing steps:

- Auto-Orient - to strip EXIF orientation from your images.

- Resize (Stretch) - to the square input size of your model (640x640 is the YOLOv5 default).

Generating a version will give you a point in time snapshot of your dataset so you can always go back and compare your future model training runs against it, even if you add more images or change its configuration later.

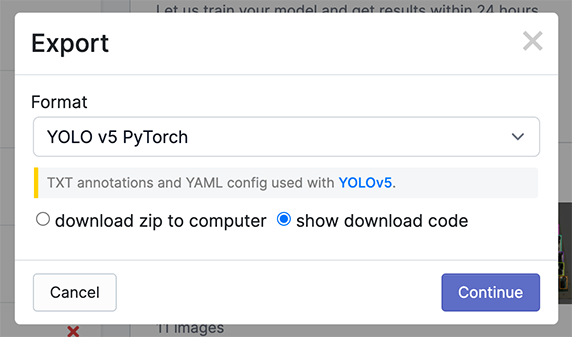

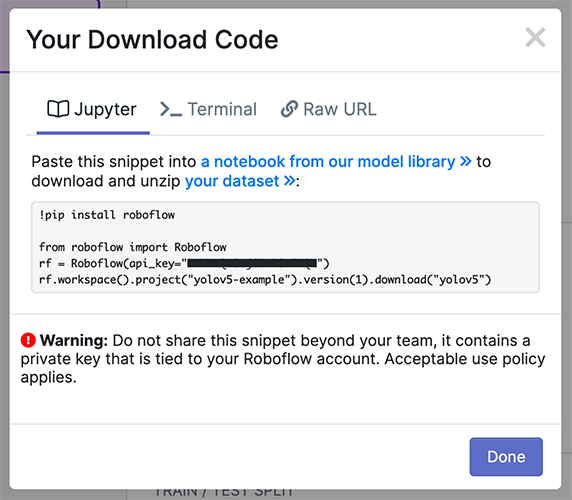

Export in YOLOv5 Pytorch format, then copy the snippet into your training

script or notebook to download your dataset.

Now continue with 2. Select a Model.

Or manually prepare your dataset (click to expand)

COCO128 is an example small tutorial dataset composed of the first 128 images in COCO train2017. These same 128 images are used for both training and validation to verify our training pipeline is capable of overfitting. data/coco128.yaml, shown below, is the dataset config file that defines 1) the dataset root directory path and relative paths to train / val / test image directories (or *.txt files with image paths) and 2) a class names dictionary:

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: ../datasets/coco128 # dataset root dir

train: images/train2017 # train images (relative to 'path') 128 images

val: images/train2017 # val images (relative to 'path') 128 images

test: # test images (optional)

# Classes (80 COCO classes)

names:

0: person

1: bicycle

2: car

...

77: teddy bear

78: hair drier

79: toothbrush

After using a tool like Roboflow Annotate to label your images, export your labels to YOLO format, with one *.txt file per image (if no objects in image, no *.txt file is required). The *.txt file specifications are:

- One row per object

- Each row is

class x_center y_center width heightformat. - Box coordinates must be in normalized xywh format (from 0 - 1). If your boxes are in pixels, divide

x_centerandwidthby image width, andy_centerandheightby image height. - Class numbers are zero-indexed (start from 0).

The label file corresponding to the above image contains 2 persons (class 0) and a tie (class 27):

Organize your train and val images and labels according to the example below. YOLOv5 assumes /coco128 is inside a /datasets directory next to the /yolov5 directory. YOLOv5 locates labels automatically for each image by replacing the last instance of /images/ in each image path with /labels/. For example:

../datasets/coco128/images/im0.jpg # image

../datasets/coco128/labels/im0.txt # label

Select a pretrained model to start training from. Here we select YOLOv5s, the second-smallest and fastest model available. See our README table for a full comparison of all models.

Train a YOLOv5s model on COCO128 by specifying dataset, batch-size, image size and either pretrained --weights yolov5s.pt (recommended), or randomly initialized --weights '' --cfg yolov5s.yaml (not recommended). Pretrained weights are auto-downloaded from the latest YOLOv5 release.

# Train YOLOv5s on COCO128 for 3 epochs

$ python train.py --img 640 --batch 16 --epochs 3 --data coco128.yaml --weights yolov5s.pt💡 ProTip: Add --cache ram or --cache disk to speed up training (requires significant RAM/disk resources).

💡 ProTip: Always train from a local dataset. Mounted or network drives like Google Drive will be very slow.

All training results are saved to runs/train/ with incrementing run directories, i.e. runs/train/exp2, runs/train/exp3 etc. For more details see the Training section of our tutorial notebook.

ClearML is completely integrated into YOLOv5 to track your experimentation, manage dataset versions and even remotely execute training runs. To enable ClearML:

pip install clearml- run

clearml-initto connect to a ClearML server (deploy your own open-source server here, or use our free hosted server here)

You'll get all the great expected features from an experiment manager: live updates, model upload, experiment comparison etc. but ClearML also tracks uncommitted changes and installed packages for example. Thanks to that ClearML Tasks (which is what we call experiments) are also reproducible on different machines! With only 1 extra line, we can schedule a YOLOv5 training task on a queue to be executed by any number of ClearML Agents (workers).

You can use ClearML Data to version your dataset and then pass it to YOLOv5 simply using its unique ID. This will help you keep track of your data without adding extra hassle. Explore the ClearML Tutorial for details!

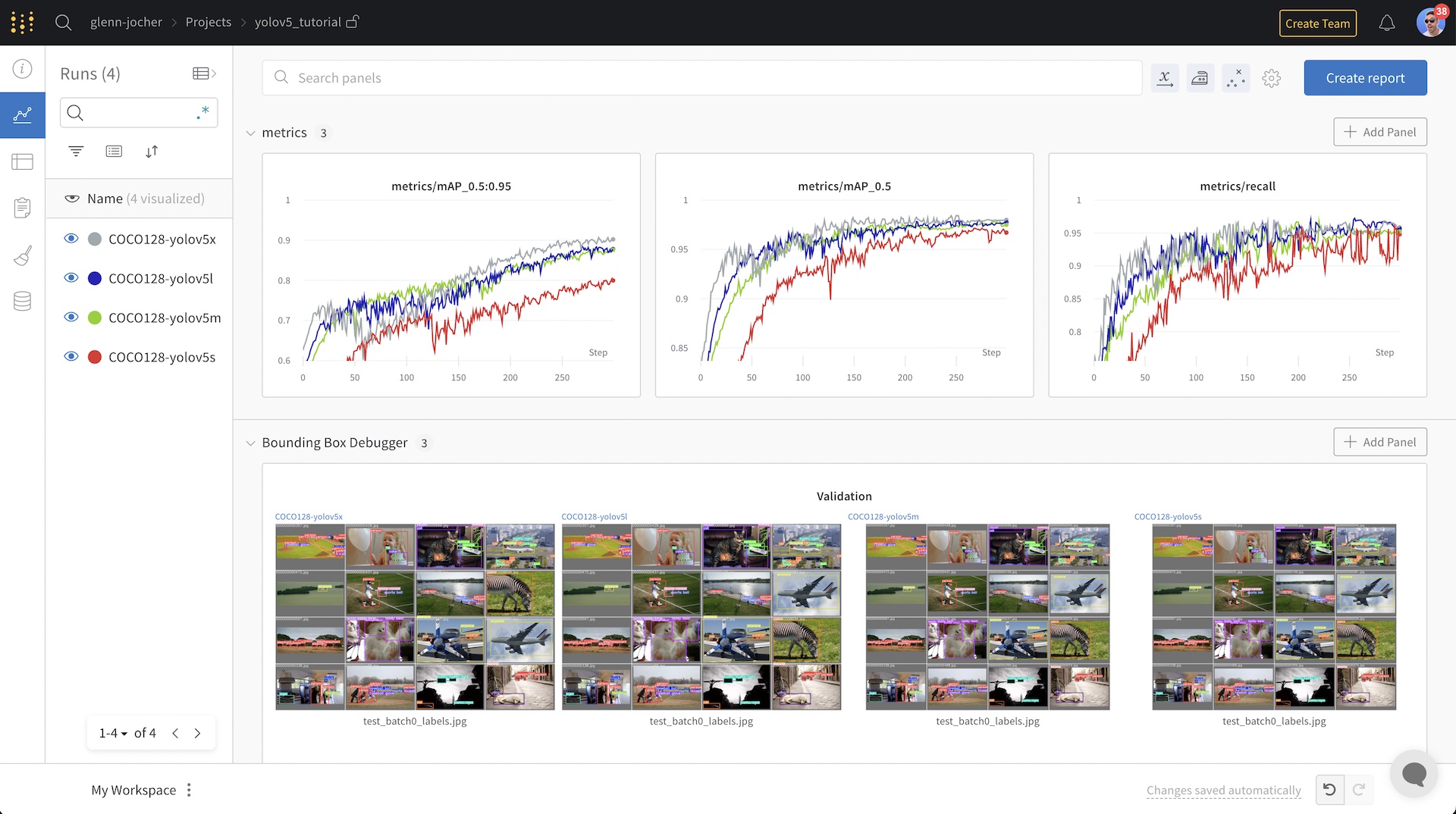

Weights & Biases (W&B) is integrated with YOLOv5 for real-time visualization and cloud logging of training runs. This allows for better run comparison and introspection, as well improved visibility and collaboration for teams. To enable W&B pip install wandb, and then train normally (you will be guided through setup on first use).

During training you will see live updates at https://wandb.ai/home, and you can create and share detailed Reports of your results. For more information see the YOLOv5 Weights & Biases Tutorial.

Training results are automatically logged with Tensorboard and CSV loggers to runs/train, with a new experiment directory created for each new training as runs/train/exp2, runs/train/exp3, etc.

This directory contains train and val statistics, mosaics, labels, predictions and augmentated mosaics, as well as metrics and charts including precision-recall (PR) curves and confusion matrices.

Results file results.csv is updated after each epoch, and then plotted as results.png (below) after training completes. You can also plot any results.csv file manually:

from utils.plots import plot_results

plot_results('path/to/results.csv') # plot 'results.csv' as 'results.png'

Once your model is trained you can use your best checkpoint best.pt to:

- Run CLI or Python inference on new images and videos

- Validate accuracy on train, val and test splits

- Export to TensorFlow, Keras, ONNX, TFlite, TF.js, CoreML and TensorRT formats

- Evolve hyperparameters to improve performance

- Improve your model by sampling real-world images and adding them to your dataset

YOLOv5 may be run in any of the following up-to-date verified environments (with all dependencies including CUDA/CUDNN, Python and PyTorch preinstalled):

-

Notebooks with free GPU:

- Google Cloud Deep Learning VM. See GCP Quickstart Guide

- Amazon Deep Learning AMI. See AWS Quickstart Guide

-

Docker Image. See Docker Quickstart Guide

If this badge is green, all YOLOv5 GitHub Actions Continuous Integration (CI) tests are currently passing. CI tests verify correct operation of YOLOv5 training, validation, inference, export and benchmarks on MacOS, Windows, and Ubuntu every 24 hours and on every commit.

© 2024 Ultralytics Inc. All rights reserved.

https://ultralytics.com