-

Notifications

You must be signed in to change notification settings - Fork 316

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Allow caches to opt-in to granular cleanup #863

Comments

|

The old world browser cache (non-SW) does not require any cleanup or special care, and that's very convenient. In SW I'd also would like to have the same type of cache, where I can carelessly keep putting things in forever, and have browser worry about managing the disk usage and cleanup. I'd rather not specify any maximum size or cleanup method — I'm OK with it being left up to the implementation. In my app I have maybe 5 files for which I need guarantee that they'll stay (HTML + JS), and an ever-growing list of thousands of assets (images, subpages) that can come and go, and the app will recover if they suddenly go missing, so a leaky cache would be great for them. |

|

I think this is covered by the v2 storage spec proposal. It had a way of marking a box such that individual items could be removed based on LRU or frecency. Doing it at the storage spec level would in theory create a consistent system for other storage APIs as well. |

|

I'm worried that the storage spec won't be able to provide the required granularity, but if it can, great! |

|

Yeah, the way we could do this is by filling out the details of whatwg/storage#18. We have a box that can used by dozens of APIs to store stuff on. We should probably define what kind of items go in a box and what kind of metadata they carry. |

|

As I don't know the details about storage API perhaps what I'm going to comment is already covered but it would be convenient to have some kind of functional event triggered under memory pressure that allow us to implement a resource release strategy or to opt for a built-in one. |

I believe this is what @slightlyoff has favored in the past (and now?), but gecko storage folks don't like it. Some of our reasons:

I feel like I'm missing one. Maybe @sicking remembers. So from the gecko team's perspective we prefer an API that lets an origin declare the relative persistence of data and then let the browser reason about those resources in aggregate. The browser can make better decisions with a master list of frecency. Right now that list just operates at the origin level, but the proposed spec would let entries in the frecency list refer to individual boxes or individual items within a box. |

|

I think that is correct, https://wiki.whatwg.org/wiki/Storage#Why_no_eviction_event.3F captures it too. |

|

I see. Thank you for the clarifications. What about allowing client code to annotate usage metadata to improve global reasoning... ? I think I'm going to read the storage spec. |

|

@delapuente yeah, I think that's something everyone is comfortable with, though how exactly that should be done is still unclear. It's very much a a post-v1-feature. |

|

Wouldn't simply reflect eventual expiration header be useful? So that the server can give an hint about how long it should be kept and the cache be aware about it? It would also simplify grateful engagement. Is this a bad idea? Has this approach been considered already? Is this already the case? Thanks |

|

Enhancement * |

|

@wanderview I agree that this wouldn't be very useful for compaction on a global level, but what about at a local level? You can't rely on local storage anyway, so you end up checking if something is in the cache, fetching it if it isn't, and pushing it into the cache. Managing your resource quota entails purging according to a TTL index or managing increasingly complex accounting on what has been used. I would love to be able to specify the size of the box and let the browser handle the rest. |

|

It would, however, be convenient if there was a direct way to get the "last accessed time" (and maybe even the "added time") in a fictive Note that this is already possible now, but there is quite some overhead—via @wanderview's comment: var cache;

cache.open('foo').then(function(foo) {

cache = foo;

return cache.match(request);

}).then(function(response) {

if (response) {

// update order of entries in keys()

cache.put(request, response.clone()); // <== overhead happens here

return response;

}

var maxItems = 100;

return addToLRU(cache, maxItems, request);

});

function addToLRU(cache, maxItems, request) {

return cache.keys().then(function(keys) {

if (keys.length < maxItems) {

return cache.add(request);

}

return cache.delete(keys[0]).then(function() {

return cache.add(request);

});

});

}This would not take care of eviction (why no eviction), but simplify common cache replacement policies, without having to resort to |

|

I like the idea of this, but I'm not 100% certain we know what the common cleanup approaches are desirable or the config needed as a result (Workbox has some of this but illustrating the behavior is difficult and it causes a lot of developer confusion, possible due to implementation problems and / or debugging troubles for end users for local testing). Aside: If IndexedDB wasn't so painful to use, I don't think this feature would be as desirable as it seems on the surface. |

|

Thanks, @gauntface! Any further thoughts from the Workbox team or others in general? Should I fork this (this being a |

|

The problem with "last accessed time" is the difference between "accessed" and "used". If I do |

|

Probably only calls to |

|

"last matched" then? I guess that's useful. Could you show some code developers would write to clear up a cache, using the proposed API? |

|

And what about explicitly marking them for disposal? What if we provide a general disposal queue and the developer can simply mark a response as disposable: let response = await Caches.match(request);

if (response) {

handle(response);

response.isDisposable(true); // adds to the disposal queue

}It gives control over semantics to the developer. We can provide extra parameters to common methods to ease batch operations: let keys = await cache.keys(request, { disposable: true });

keys[keys.length - 1].isDisposable(false); // all matches are disposable except the last one |

|

If we want auto-cleanup within a cache I think it'd be more convenient if the settings applied to a cache rather than an individual response. await cache.setEvictionBehaviour(…);That way you're applying caching behaviour to the cache. |

|

@jakearchibald I'd prefer it if we can sort out if we can do this on top of buckets somehow. Where you create a bucket with a policy and then associate cache items with that bucket. Maybe that's too complex though and we need buckets to contain an entire cache or nothing, but it's worth exploring a bit. In particular as you might want to share the eviction logic with items stored in other places, such as IDB. |

|

(Note, the above comment doesn't apply to exposing "last matched time". Just to any kind of storage policy.) |

|

@jakearchibald, as a response to your comment, here is a still raw sketch of an API of a fictive // Create `CacheItem` foo at timestamp 1000

await cache.add('foo');

// Get statistics about the never matched `CacheItem`

await cache.has('foo');

// returns `{key: 'foo', timeCached: 1000, timeMatched: Infinity}`

// Get statistics about a non-existent `CacheItem`

await cache.has('bar');

// returns `undefined`

// Use `CacheItem` foo at timestamp 2000

await cache.match('foo');

// Get statistics about the now matched (=used) `CacheItem`

await cache.has('foo');

// returns `{key: 'foo', timeCached: 1000, timeMatched: 2000}`This would allow for relatively simple to implement cache replacement policies that are independent of Indexed Database, like, for example, Least Recently Used: const MAX_CACHE_ITEMS = 5;

const cache = await caches.open('lru-cache');

const addToCache = async (cache, url) => {

const keys = await cache.keys();

if (keys.length < MAX_CACHE_ITEMS) {

return await cache.add(url);

}

const cacheItems = await Promise.all(

keys.map(async key => await cache.has(key))

);

const lruCacheItem = cacheItems.sort(

(a, b) => a.timeMatched - b.timeMatched

)[0];

await cache.delete(lruCacheItem.key);

return await cache.add(url);

};Something I'm unsure about is the appropriateness of |

|

@annevk do you think the buckets approach would allow granular eviction within the bucket, or just control when the whole bucket can be evicted? |

|

@jakearchibald I'm not sure, I was thinking per bucket, but we really haven't explored it much yet. I just think this is a problem that spans across storage APIs, so it'd be nice if we figured out a way to tackle it for all of them, so you don't end up having to put stuff in the Cache API that doesn't really fit there because of the eviction feature. |

|

Adding metadata seems like a good idea. We should look at what workbox stores, and help them do what they do, but faster. |

|

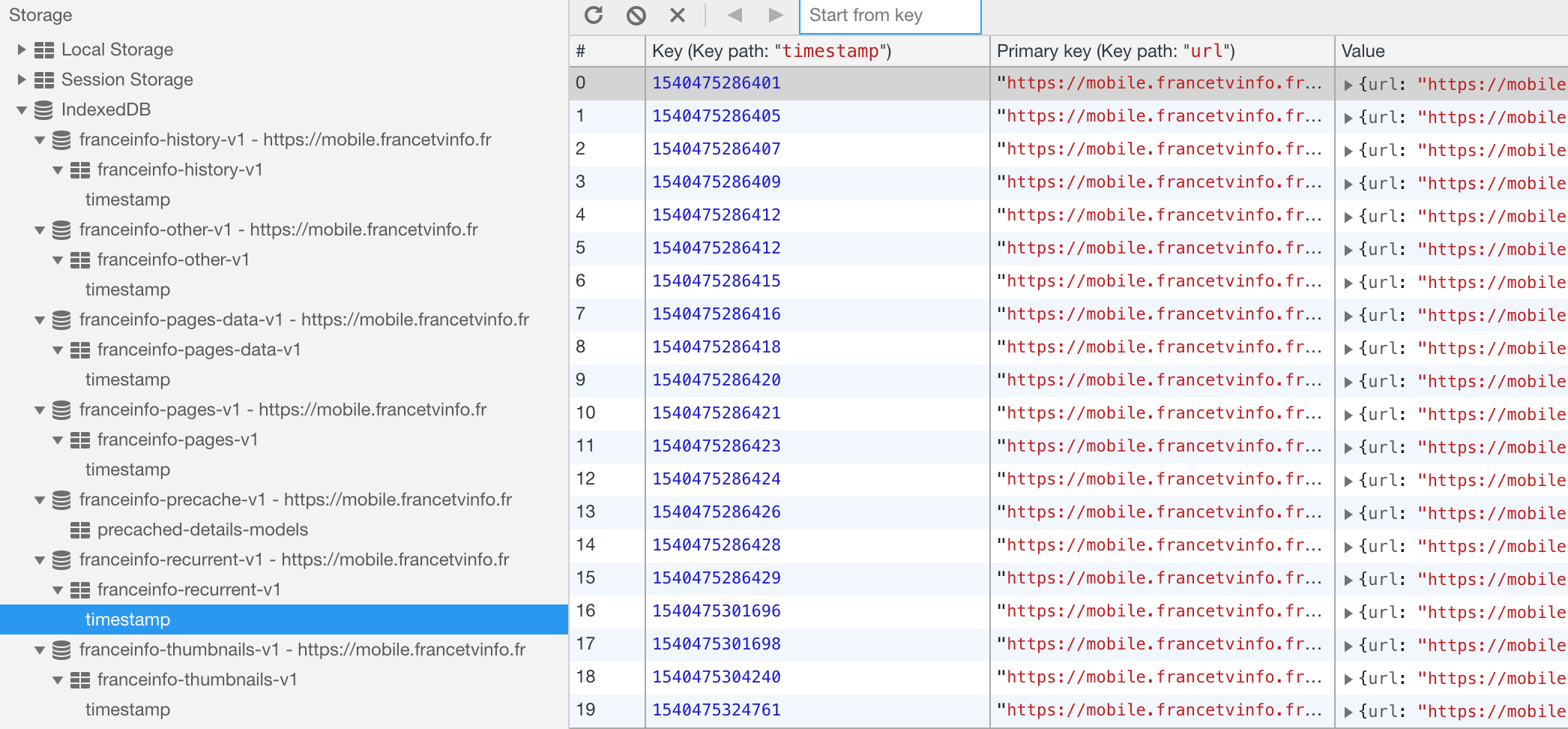

As an additional data point, Workbox, which is widely used in the industry, handles cache expiration via IDB timestamps: |

|

Seems like it would be better to store request/response into IDB. |

|

FYI: @jeffposnick, @philipwalton for Workbox requirements. The tl;dr of this issue is:

|

|

(Some random thoughts, with the caveat that I might be missing context from the F2F discussion.) If there aren't going to be any changes to the relevant standards' status quo, I am not sure that it makes sense to move away from Workbox's current model (IDB for timestamp metadata + the Cache Storage API for the My main concern going into this is that it locks folks into using Workbox (or But perhaps if the official guidance is that IndexedDB should be used for this sort of thing, someone from the community see that as an opportunity to write a standalone helper library to implement storage and expiration. That could then end up being an alternative for folks who would rather not opt-in to using a framework like Workbox or |

|

Some thoughts as well:

|

When I wrote this, I meant functional requirements to make cache expiration work (as in: "What data does Workbox need for its cache expiration logic?"); and not so much the way it's currently implemented and working. Sorry for not formulating the question more clearly. @jeffposnick, I get that the current model is battle-tested and working well, yet as @philipwalton writes in his first bullet, the current experience for developers if they want to implement cache expiration themselves (i.e., not using Workbox) is not great. So with your "Workbox implementor glasses" off |

Allowing arbitrary data to be stored with a response doesn't help this much. The data can easily get out of sync, although it's true both will be cleared at the same time.

Agreed. This would be useful for background fetch too. |

I think it's pretty obvious that arbitrary metadata storage along with responses would have made workbox easier. I don't think anyone's saying otherwise. You could equally say "implementing Ember would have been easier if the whole of Ember was already in the platform". The issues we raised in the F2F are:

|

|

I'm at a bit of a loss whether there's specific feedback (if any) folks are looking for—it sounds like all of the relevant points were already discussed during the F2F, and I trust that the folks involved understand the developer needs and are balancing that against platform complexity. |

Right, my specific concern was: given that some browsers apparently reserve the right to clear entries from the cache at their discretion. Developers storing request metadata in IDB will need to write extra code to account for that possibility. |

Also, I believe there are different "Clear browsing data" options available to the user which allows a user to clear Cache but not IDB. When does Workbox check expiration dates? Our experience has been that IDB latency is not reliably low enough to block time-sensitive operations, like serving requests. We put our expiration dates as a header in the cached response, and check the header when serving, to make sure the asset isn't expired. The IDB latency issue is discussed more in #1331. Are there any other known use cases for

|

Workbox has a freshness sanity check prior to calling It expires entries based on IndexedDB metadata (maximum entries and/or maximum age) via a separate codepath that runs after |

I don't think this is quite accurate, at least not in chrome and firefox. The separate "cached" data that can be cleared is http cache. I believe quota-managed storage is always cleared atomically. This also matches the clear-site-data mechanism which can clear http cache separately, but always cleared cache API and IDB together under "storage": https://w3c.github.io/webappsec-clear-site-data/#grammardef-storage |

|

@wanderview, not sure if you were referencing my comment or just @asakusuma's, but I was basing my claim on a post from the webkit.org blog:

I'm not sure how often this actually happens in the wild though. |

|

@philipwalton Yea, I'm aware of that, although I've never understood how the webkit team expects sites to manage that kind of unpredictable platform behavior. There are many web platform features built around the concept of quota storage persisting together or being wiped all at once. Expecting sites to handle partial state due to unpredictable partial wipes seems quite challenging. |

|

@aarongustafson has brought this topic up again in a new MSEdgeExplainer document. |

Something like:

…where the developer can allow the browser to auto-delete items from the cache based on some kind of strategy.

Apologies for the really rough idea, just wanted to add something before I forgot 😄

The text was updated successfully, but these errors were encountered: