- Clone repository:

git clone https://github.com/walzimmer/bat-3d.git - Install npm

- Linux:

sudo apt-get install npm - Windows: https://nodejs.org/dist/v10.15.0/node-v10.15.0-x86.msi

- Linux:

- Install PHP Storm or WebStorm (IDE with integrated web server): https://www.jetbrains.com/phpstorm/download/download-thanks.html

- [OPTIONAL] Install WhatPulse to measure the number of clicks and key strokes while labeling: https://whatpulse.org/

- Open folder

bat-3din PHP Storm. - Move into directory:

cd bat-3d. - Download sample scenes extracted from the NuScenes dataset from here and extract the content into the

bat-3d/input/folder. - Install required packages:

npm install - Open

index.htmlwith chromium-browser (Linux) or Chrome (Windows) within the IDE. Right click on index.html -> Open in Browser -> Chrome/Chromium

Reference: https://arxiv.org/abs/1905.00525

Reference: https://arxiv.org/abs/1905.00525

Link: https://www.youtube.com/watch?v=gSGG4Lw8BSU

Link: https://www.youtube.com/watch?v=gSGG4Lw8BSU

To annotate your own data, follow this steps:

- Create a new folder under the input folder (e.g.

bat-3d/input/waymo) - For each annotation sequence create a separate folder e.g.

input/waymo/20210103_waymo

input/waymo/20210104_waymo

- Under each sequence create the following folders:

input/waymo/20210103_waymo/annotations (this folder will contain the downloaded annotations)

input/waymo/20210103_waymo/pointclouds (place your point cloud scans (in .pcd ascii format) here)

input/waymo/20210103_waymo/pointclouds_without_ground (optional: Remove the ground using the scripts/nuscenes_devkit/python-sdk/scripts/export_pointcloud_without_ground_nuscenes.py script to use the checkbox "Filter ground". Change the threshold of -1.7 to the height of the LiDAR sensor.)

input/waymo/20210103_waymo/images (optional: For each camera image, create a folder: e.g. CAM_BACK, CAM_BACK_LEFT, CAM_BACK_RIGHT, CAM_FRONT, CAM_FRONT_LEFT, CAM_FRONT_RIGHT)

Make sure, that the LiDAR scan file names follow this naming format 000000.pcd, 000001.pcd, 000002.pcd and so on. Same for image file names: 000000.png, 000001.png, 000002.png) and the annotation file names: 000000.json, 000001.json, 000002.json).

- When annotating only point cloud data, make sure to set this variable to

trueinjs/base_label_tool.js:

pointCloudOnlyAnnotation: true

- Open the annotation tool in you web browser and change the dataset from NuScenes to your own dataset (e.g. waymo) in the drop down field.

- Watch raw video (10 sec) to get familiar with the sequence and to see where interpolation makes sense

- Watch tutorial videos to get familiar with (translation/scaling/rotating objects, interpolation and how to use helper views)

- Start WhatPulse. Login with koyunujiju@braun4email.com and password: labeluser

- Draw bounding box in the Bird's-Eye-View (BEV)

- Move/Scale it in BEV using 3D arrows (drag and drop) or sliders

- Choose one of the 5 classes (Car, Pedestrian, Cyclist, Motorbike, Truck)

- Interpolate if necessary

- Select Object to interpolate by clicking on a Bounding Box

- Activate 'Interpolation Mode' in the menu (checkbox) -> start position will be saved

- Move to desired frame by skipping x frames

- Translate object to new position

- Click on the 'Interpolate' button in the menu

- Repeat steps 4-7 for all objects in the sequence

- Download labels to your computer (JSON file)

- Stop the time after labeling is done.

- Make screenshots of keyboard and mouse heat map, record number of clicks and keystrokes

Hints:

- Select

Copy label to next framecheckbox if you want to keep the label (position, size, class) for next frame - Use helper views to align object along z-axis (no need to switch into 3D view)

- Label one object from start to end (using interpolation) and then continue with next object

- Do not apply more than one box to a single object.

- Check every cuboid in every frame, to make sure all points are inside the cuboid and look reasonable in the image view.

- The program has been quite stable in my use cases, but there is no guarantee that it won't crash. So please back up (download) your annotated scenes (~every 10 min). Saving to local storage (browser) is done automatically.

- Download the annotation file into the following folder:

bat-3d/input/<DATASET>/<SEQUENCE>/annotations - Please open new issue tickets on Github for questions and bug reports or write me an email (wzimmer@eng.ucsd.edu). Thanks!

- Minimum LIDAR Points :

- Label any target object containing at least 10 LIDAR points, as long as you can be reasonably sure you know the location and shape of the object. Use your best judgment on correct cuboid position, sizing, and heading.

- Cuboid Sizing :

- Cuboids must be very tight. Draw the cuboid as close as possible to the edge of the object without excluding any LIDAR points. There should be almost no visible space between the cuboid border and the closest point on the object.

- Extremities :

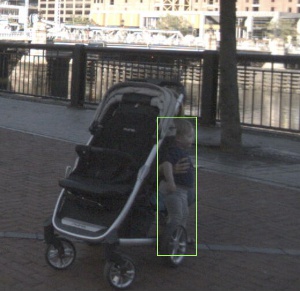

- If an object has extremities (eg. arms and legs of pedestrians), then the bounding box should include the extremities.

- Exception: Do not include vehicle side view mirrors. Also, do not include other vehicle extremities (crane arms etc.) that are above 1.5 meters high.

- Carried Object :

- If a pedestrian is carrying an object (bag, umbrella, tools etc.), such objects will be included in the bounding box for the pedestrian. If two or more pedestrians are carrying the same object, the bounding box of only one of them will include the object.

- Use Images when Necessary:

- For objects with few LIDAR points, use the images to make sure boxes are correctly sized. If you see that a cuboid is too short in the image view, adjust it to cover the entire object based on the image view.

For every bounding box, include one of the following labels:

-

Car: Vehicle designed primarily for personal use, e.g. sedans, hatch-backs, wagons, vans, mini-vans, SUVs, jeeps and pickup trucks (a pickup truck is a light duty truck with an enclosed cab and an open or closed cargo area; a pickup truck can be intended primarily for hauling cargo or for personal use).

-

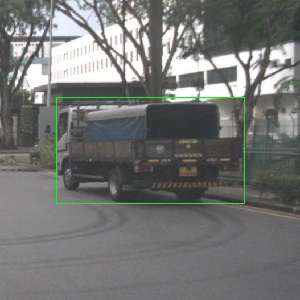

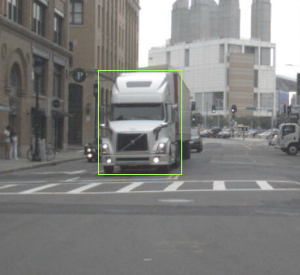

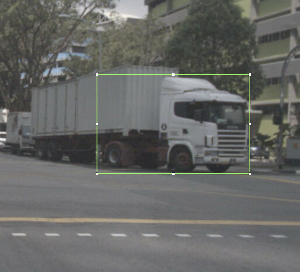

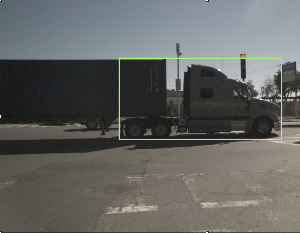

Truck: Vehicles primarily designed to haul cargo including lorrys, trucks.

-

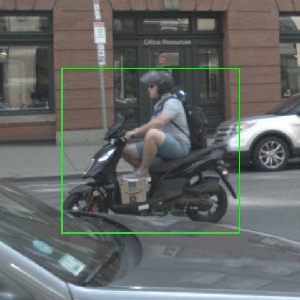

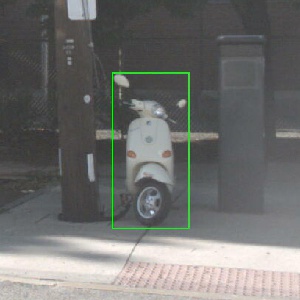

Motorcycle: Gasoline or electric powered 2-wheeled vehicle designed to move rapidly (at the speed of standard cars) on the road surface. This category includes all motorcycles, vespas and scooters. It also includes light 3-wheel vehicles, often with a light plastic roof and open on the sides, that tend to be common in Asia (rickshaws). If there is a rider and/or passenger, include them in the box.

-

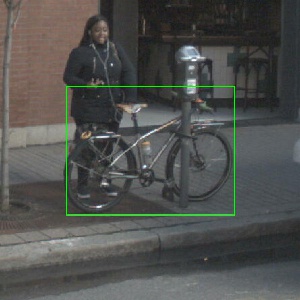

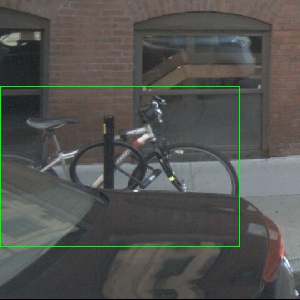

Bicycle: Human or electric powered 2-wheeled vehicle designed to travel at lower speeds either on road surface, sidewalks or bicycle paths. If there is a rider and/or passenger, include them in the box.

-

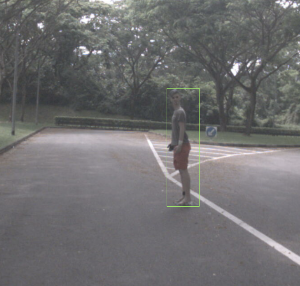

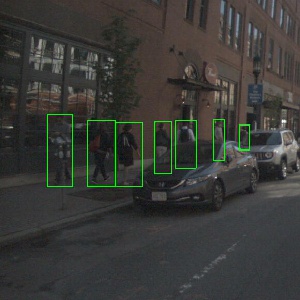

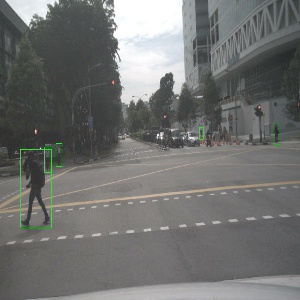

Pedestrian: An adult/child pedestrian moving around the cityscape. Mannequins should also be annotated as

Pedestrian.

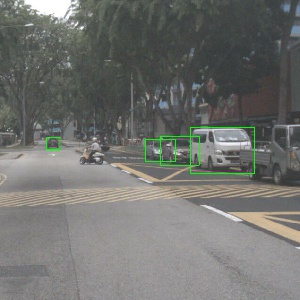

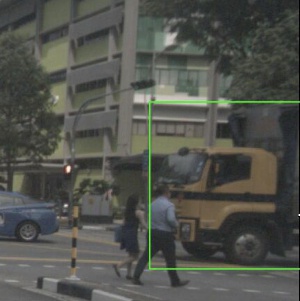

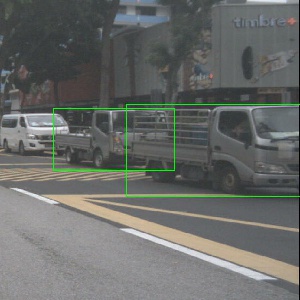

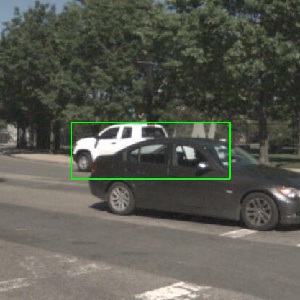

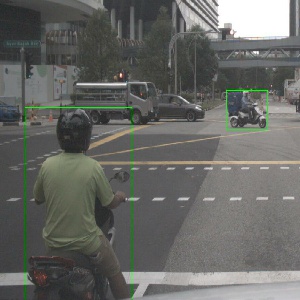

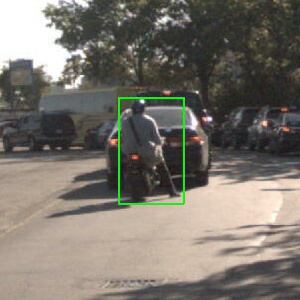

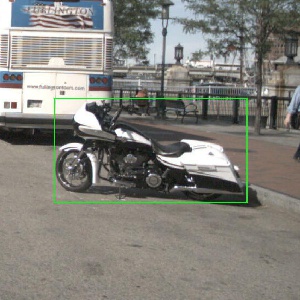

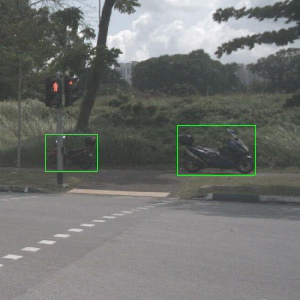

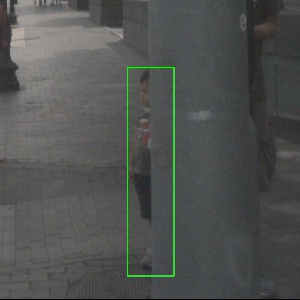

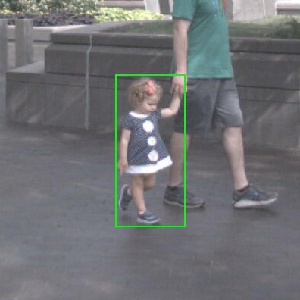

Bounding Box color convention in example images:

- Green: Objects like this should be annotated

-

Vehicle designed primarily for personal use, e.g. sedans, hatch-backs, wagons, vans, mini-vans, SUVs and jeeps.

- If it is primarily designed to haul cargo it is a truck.

-

Vehicles primarily designed to haul cargo including lorrys, trucks, pickup truck (a pickup truck is a light duty truck with an enclosed cab and an open or closed cargo area; a pickup truck can be intended primarily for hauling cargo or for personal use).

-

Gasoline or electric powered 2-wheeled vehicle designed to move rapidly (at the speed of standard cars) on the road surface. This category includes all motorcycles, vespas and scooters. It also includes light 3-wheel vehicles, often with a light plastic roof and open on the sides, that tend to be common in Asia (rickshaws).

- If there is a rider, include the rider in the box.

- If there is a passenger, include the passenger in the box.

- If there is a pedestrian standing next to the motorcycle, do NOT include in the annotation.

-

Human or electric powered 2-wheeled vehicle designed to travel at lower speeds either on road surface, sidewalks or bicycle paths.

- If there is a rider, include the rider in the box

- If there is a passenger, include the passenger in the box

- If there is a pedestrian standing next to the bicycle, do NOT include in the annotation

-

An adult/child pedestrian moving around the cityscape.

- Mannequins should also be treated as pedestrian.

Copyright © 2019 The Regents of the University of California

All Rights Reserved. Permission to copy, modify, and distribute this tool for educational, research and non-profit purposes, without fee, and without a written agreement is hereby granted, provided that the above copyright notice, this paragraph and the following three paragraphs appear in all copies. Permission to make commercial use of this software may be obtained by contacting:

Office of Innovation and Commercialization

9500 Gilman Drive, Mail Code 0910

University of California

La Jolla, CA 92093-0910

(858) 534-5815

innovation@ucsd.edu

This tool is copyrighted by The Regents of the University of California. The code is supplied “as is”, without any accompanying services from The Regents. The Regents does not warrant that the operation of the tool will be uninterrupted or error-free. The end-user understands that the tool was developed for research purposes and is advised not to rely exclusively on the tool for any reason.

IN NO EVENT SHALL THE UNIVERSITY OF CALIFORNIA BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES, INCLUDING LOST PROFITS, ARISING OUT OF THE USE OF THIS TOOL, EVEN IF THE UNIVERSITY OF CALIFORNIA HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. THE UNIVERSITY OF CALIFORNIA SPECIFICALLY DISCLAIMS ANY WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE TOOL PROVIDED HEREUNDER IS ON AN “AS IS” BASIS, AND THE UNIVERSITY OF CALIFORNIA HAS NO OBLIGATIONS TO PROVIDE MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.