-

Notifications

You must be signed in to change notification settings - Fork 22

Home

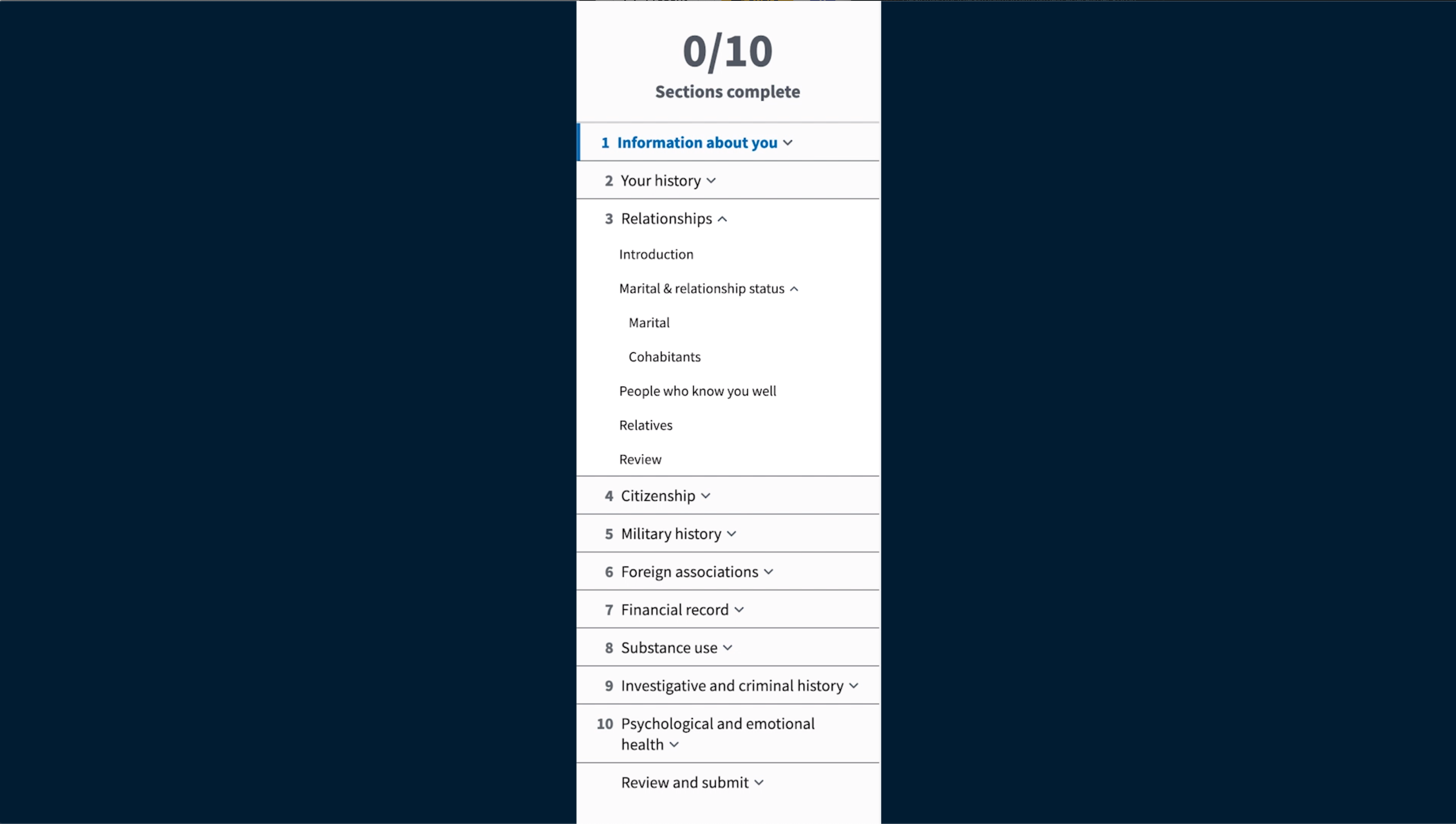

NBIS (National Background Investigation System) is responsible for creating a new platform that would replace e-QIP and other existing OPM systems to modernize the background investigation process. 18F has worked closely with NBIB (OPM) and NBIS (DISA) to replace the applicant-facing functionality of e-QIP by testing and deploying a new system called eApp. Culper is the open source component of eApp.

With over 3.4 million clearance holders between federal and industry employees the background investigation process is a large undertaking. The first step for applicants is to provide detailed personal information which will be used throughout the remainder of the investigation. On average it takes 3 weeks to complete the first step in this process. That is where Culper (as part of eApp) gets involved. This new system was built to accelerate the background investigation process and address the most painful part of the process for applicants, the form.

Culper employed a user-centered design approach leveraging key principles from the U.S. Digital Services Playbook and the accessible, open source US Web Design System. The project team employed agile development methods, ensuring an adaptive process that responded to emergent user needs and changing requirements.

Take a look at our demo video for more details

- Utilize a modern technology stack

- Employ user centered design to focus product improvements on user needs

- Successfully integrate with the larger NBIS platform for the full background investigation process

- Provide applicants a faster and more accurate tool for submitting their information for the background investigation process

- Speed up the overall background investigation process by seeding it with accurate and well formatted data

- Create a more flexible system for developing and releasing updates to background investigation forms

- National Background Investigation System (NBIS)

- Office of Personnel Management (OPM)

- Defense Security Service (DSS)

- Defense Information Systems Agency (DISA)

- National Background Investigation Bureau (NBIB)

- Applicants for varying levels of security clearances (both Federal and industry employees)

- Government and industry agencies requesting background investigations for employees

- Investigators and adjudicators conducting background investigations

- NBIS technology teams integrating all background investigation systems

We believe that by introducing a simpler, more accurate, and less burdensome system, we will speed up the time it takes for applicants to complete the first step in the investigation process. And, we believe that this will result in a faster overall investigation while providing more accurate background data.

The team took a holistic look at the organization of the standard Federal Investigation forms and how the current online tool (e-QIP) currently displayed the forms in order to better understand the challenges with the current system. User interviews with applicants and agencies uncovered insights within these challenges and opportunities for improvement.

Insight: Form sections are not being grouped in a logical way.

Improvement: We reorganized sections and subsections of the SF forms into more intuitive and logical groupings. This new organization eases the applicant into the form. The first few sections cover quick personal details while the later sections dig deeper into more complicated situations.

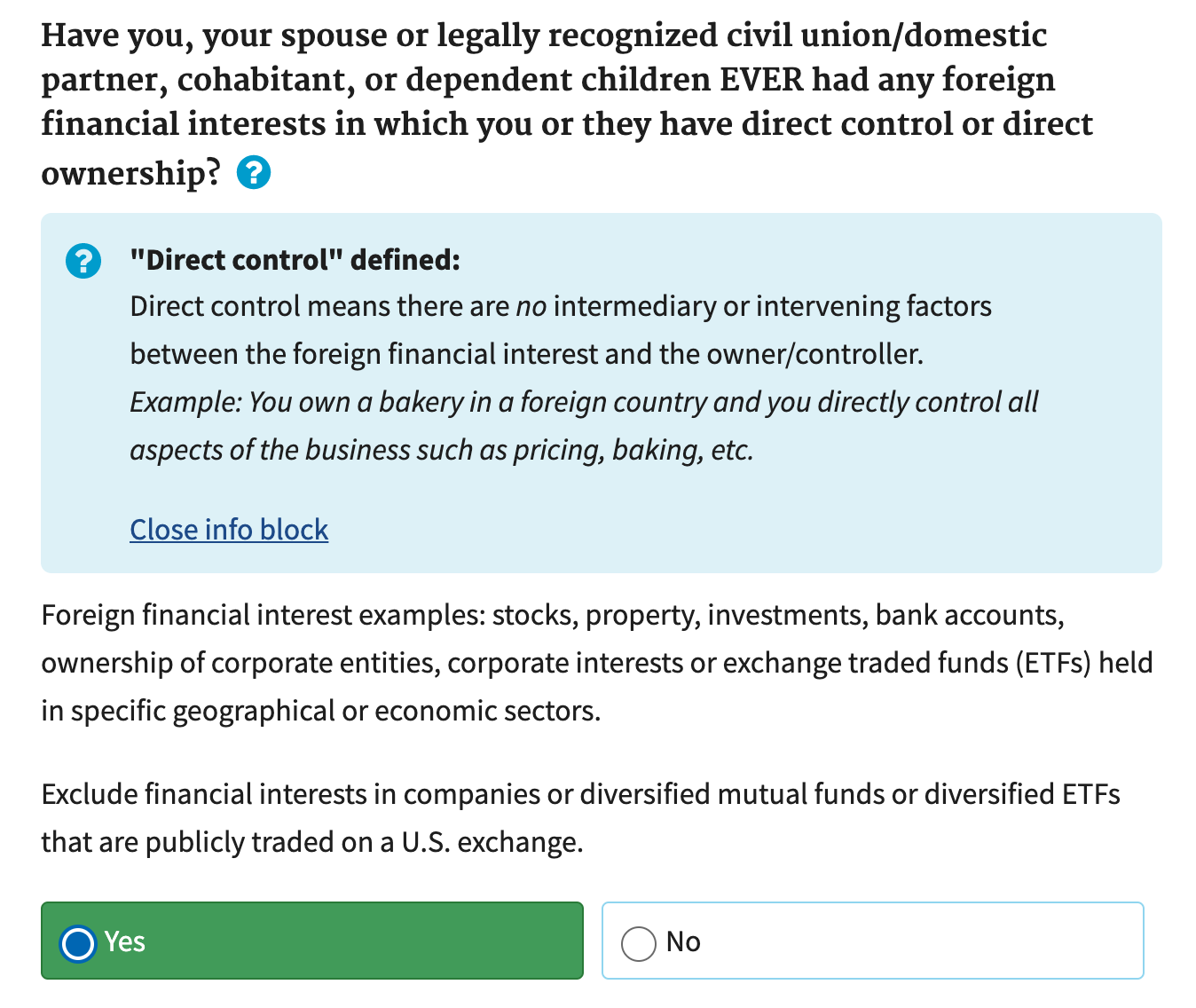

Insight: Questions are not easily understood due to jargon, acronyms, or unknown phrases.

Improvement: We provide additional help text for certain questions within the form. These additional help callouts provide clarifying definitions of jargon or government acronyms in context of each question they're applied to.

Insight: Applicants have to navigate through all questions in the form, even if some aren't relevant to them.

Improvement: Top level yes/no questions provide helpful question branching. By answering “No” to certain questions, the following questions are hidden and the applicant can proceed to the next section. This branching ensures that the applicant only sees questions relevant to them as they are moving through the form.

Insight: Paper forms or e-QIP are not easily accessible from devices other than a desktop computer.

Improvement: We built the new system as a responsive web application. This means that applicants can access the SF forms from any desktop or mobile device. The layout of the application will respond to each device size making it easier for applicants to the system from home, an office, or on the go using a tablet or smartphone.

Insight: Applicants are worried about saving their information between multiple sessions filling out their forms.

Improvement: As applicants are filling out their forms, the system will autosave their progress. This helps to boost confidence that their information will be safe if they leave the page or sign out.

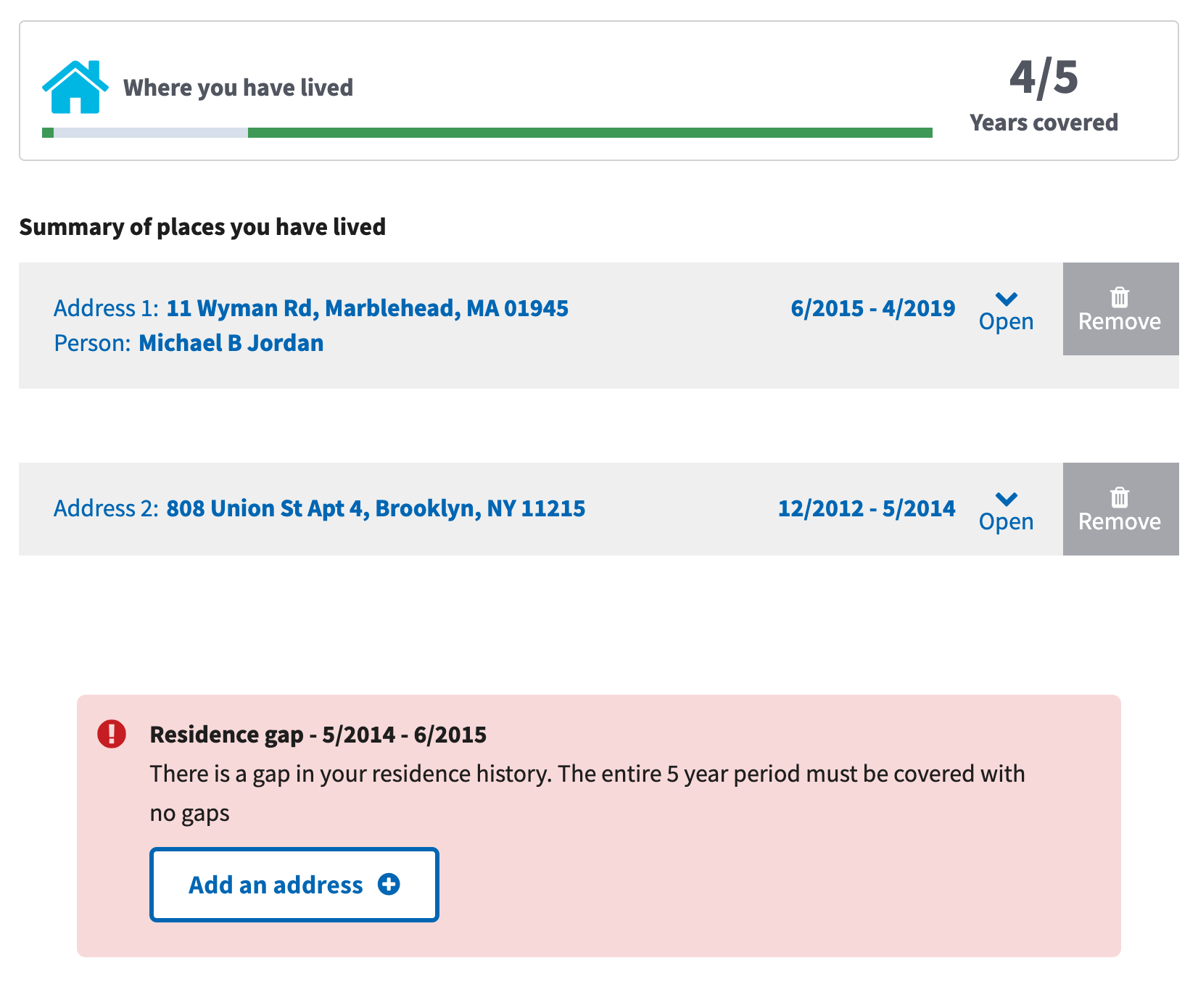

Insight: Addresses account for the most inaccuracies across the entire form.

Improvement: We incorporated a U.S. Postal Service address validation tool to validate many of the addresses across the form. Validating and helping the applicant to correct these addresses before submission helps to cut down the number of errors/kickbacks from an agency reviewer.

Insight: Remembering information from past experiences (as far as 5-10 years back) is difficult.

Improvement: We built a timeline validation tool in each section of the form that requests a series of events over a specific period of time. This tool helps the applicant to identify which parts of this time period they have successfully entered data and which parts are still missing data.

Each of these timeline sections of the form will also show the applicant gap errors when they are missing information within a certain period of time.

Insight: Applicants can be overwhelmed with errors all at once.

Improvement: Many of the fields across the system validate in real time. This means that an applicant will know if they have made a mistake within a form field as soon as they leave that field. This helps to catch errors quickly and reduces the number of errors that an applicant has to fix across the form.

Improvement: Applicants are provided section review pages after each section that they complete. These section review pages help validate the full SF form in smaller increments so that the applicant is not overwhelmed with a large number of flags at the end of the form. It also allows applicants to double check their information at multiple point throughout the form.

Usability testing: Throughout development the product and design team conducted regular usability testing sessions with a wide variety of users. These sessions were done in person, remotely via video chat, or using usertesting.com. More than 100 people have participated in these usability sessions with roughly 5-7 participants testing per sprint. These sessions helped the team iteratively improve the UI as well as the organization of the form itself.

Take a look at an example of usability testing feedback and solution exploration. (Usability findings presentation)

User acceptance testing (UAT): In addition to usability testing, UAT testing has been conducted throughout the development process. UAT testing is focused on finding bugs or regressions in the software. This type of testing is done less frequently than usability testing. Issues or bugs found during UAT are captured and tracked in an internal NBIS tracking tool. In 2018 and 2019 OPM and the Army have done a majority of the testing. In the summer of 2019 more agencies are being on-boarded to test as part of the full Investigation Management testing process.

The majority of the content presented to applicants is part of a set of standard Federal Investigation forms. These forms correspond to a particular levels of clearance that an applicant is applying for. Much of the content within these forms overlap and are based on a tiered system. The SF-86 is the largest of the forms while the SF-85P and the SF-85 are subsets of that larger form with their own small differences. An audit and comparison of all content across these forms was necessary in order to build a flexible form system. This work was tracked in a shared tracking document and was regularly used during planning and development of each form type.

Our development setup and other engineering resources are located in this repositories README file.

The name Culper refers to the first counterintelligence operation in American history. The effort was organized by American Major Benjamin Tallmadge under orders from General George Washington in the summer of 1778, during the British occupation of New York City at the height of the American Revolutionary War.

The name is fitting due to the app’s pivotal role in recording background information on individuals and using it to vet them for security clearances.