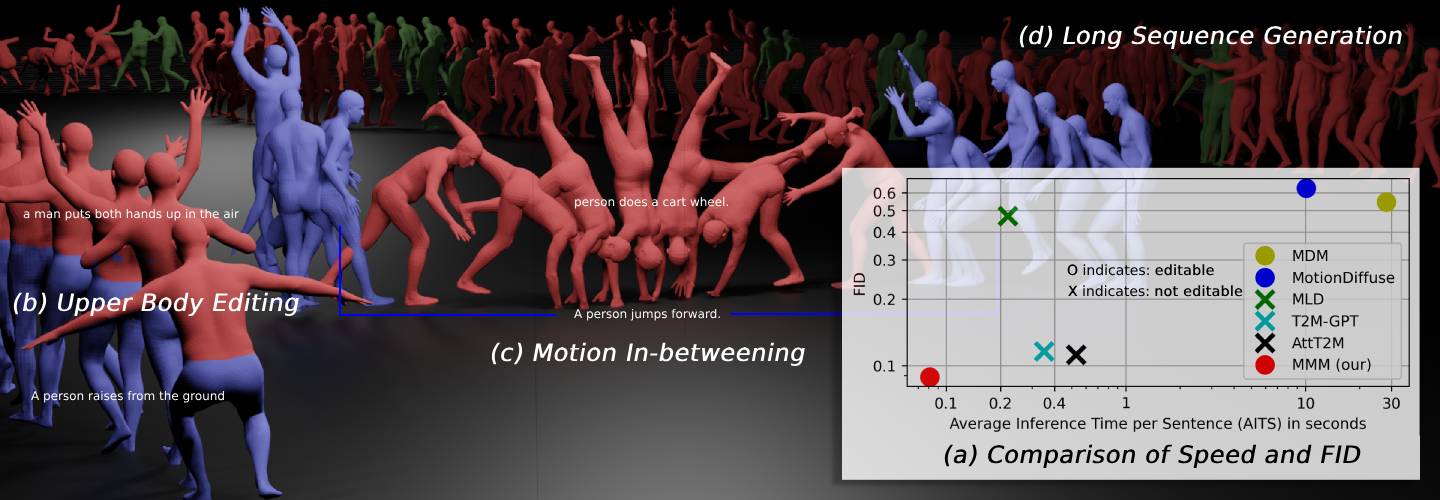

MMM: Generative Masked Motion Model (CVPR 2024, Highlight)

The official PyTorch implementation of the paper "MMM: Generative Masked Motion Model".

Please visit our webpage for more details.

If our project is helpful for your research, please consider citing :

@inproceedings{pinyoanuntapong2024mmm,

title={MMM: Generative Masked Motion Model},

author={Ekkasit Pinyoanuntapong and Pu Wang and Minwoo Lee and Chen Chen},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024},

}

📢 July/2/24 - Our new paper (BAMM) is accepted at ECCV 2024

📢 June/10/24 - Update pretrain model with FID. 0.070 (using batchsize 128)

📢 June/8/24 - Interactive demo is live at huggingface

📢 June/3/24 - Fix generation bugs & add download script & update pretrain model with 2 local layers (better score than reported in the paper)

conda env create -f environment.yml

conda activate MMM

If you have a problem with the conflict, you can install them manually

conda create --name MMM

conda activate MMM

conda install plotly tensorboard scipy matplotlib pytorch torchvision pytorch-cuda=11.8 -c pytorch -c nvidia

pip install git+https://github.com/openai/CLIP.git einops gdown

pip install --upgrade nbformat

bash dataset/prepare/download_glove.sh

We use the same extractors provided by t2m to evaluate our generated motions. Please download the extractors.

bash dataset/prepare/download_extractor.shbash dataset/prepare/download_model.shbash dataset/prepare/download_model_upperbody.shWe are using two 3D human motion-language dataset: HumanML3D and KIT-ML. For both datasets, you could find the details as well as download link [here].

Take HumanML3D for an example, the file directory should look like this:

./dataset/HumanML3D/

├── new_joint_vecs/

├── texts/

├── Mean.npy # same as in [HumanML3D](https://github.com/EricGuo5513/HumanML3D)

├── Std.npy # same as in [HumanML3D](https://github.com/EricGuo5513/HumanML3D)

├── train.txt

├── val.txt

├── test.txt

├── train_val.txt

└── all.txt

python train_vq.py --dataname t2m --exp-name vq_name

python train_t2m_trans.py --vq-name vq_name --out-dir output/t2m --exp-name trans_name --num-local-layer 2

- Make sure the pretrain vqvae in

output/vq/vq_name/net_last.pth --num-local-layeris number of cross attention layer- support multple gpus

export CUDA_VISIBLE_DEVICES=0,1,2,3 - we use 4 gpus, increasing batch size and iteration to

--batch-size 512 --total-iter 75000 - The codebook will be pre-computed and export to

output/vq/vq_name/codebook(It will take a couple minutes.)

python GPT_eval_multi.py --exp-name eval_name --resume-pth output/vq/2024-06-03-20-22-07_retrain/net_last.pth --resume-trans output/t2m/2024-06-04-09-29-20_trans_name_b128/net_last.pth --num-local-layer 2

The log and tensorboard data will be in ./output/eval/

--resume-pthpath for vevae--resume-transpath for transformer

python generate.py --resume-pth output/vq/2024-06-03-20-22-07_retrain/net_last.pth --resume-trans output/t2m/2024-06-04-09-29-20_trans_name_b128/net_last.pth --text 'the person crouches and walks forward.' --length 156The generated html is in output folder.

For better visualization of motion editing, we provide examples of all editing tasks in Jupyter Notebook. Please see the details in:

./edit.ipynbThis code is distributed under an LICENSE-CC-BY-NC-ND-4.0.

Note that our code depends on other libraries, including CLIP, SMPL, SMPL-X, PyTorch3D, T2M-GPT, and uses datasets that each have their own respective licenses that must also be followed.