An Attention-based Transformer for Neural Question-Answering on Knowledge Graph, via Sequence-to-sequence Approach, with Automatic Templates Generation from Long Text.

The project is Stuart Chen's research in Google 2019 GSoC in collaboration with DBpedia and AKSW Research Group.

Here is the website for blogging the research development.

- Python 3.6

- TensorFlow 1.14.0

- TensorFlow Hub

- NumPy

- NLTK

- spaCy

- Unidecode

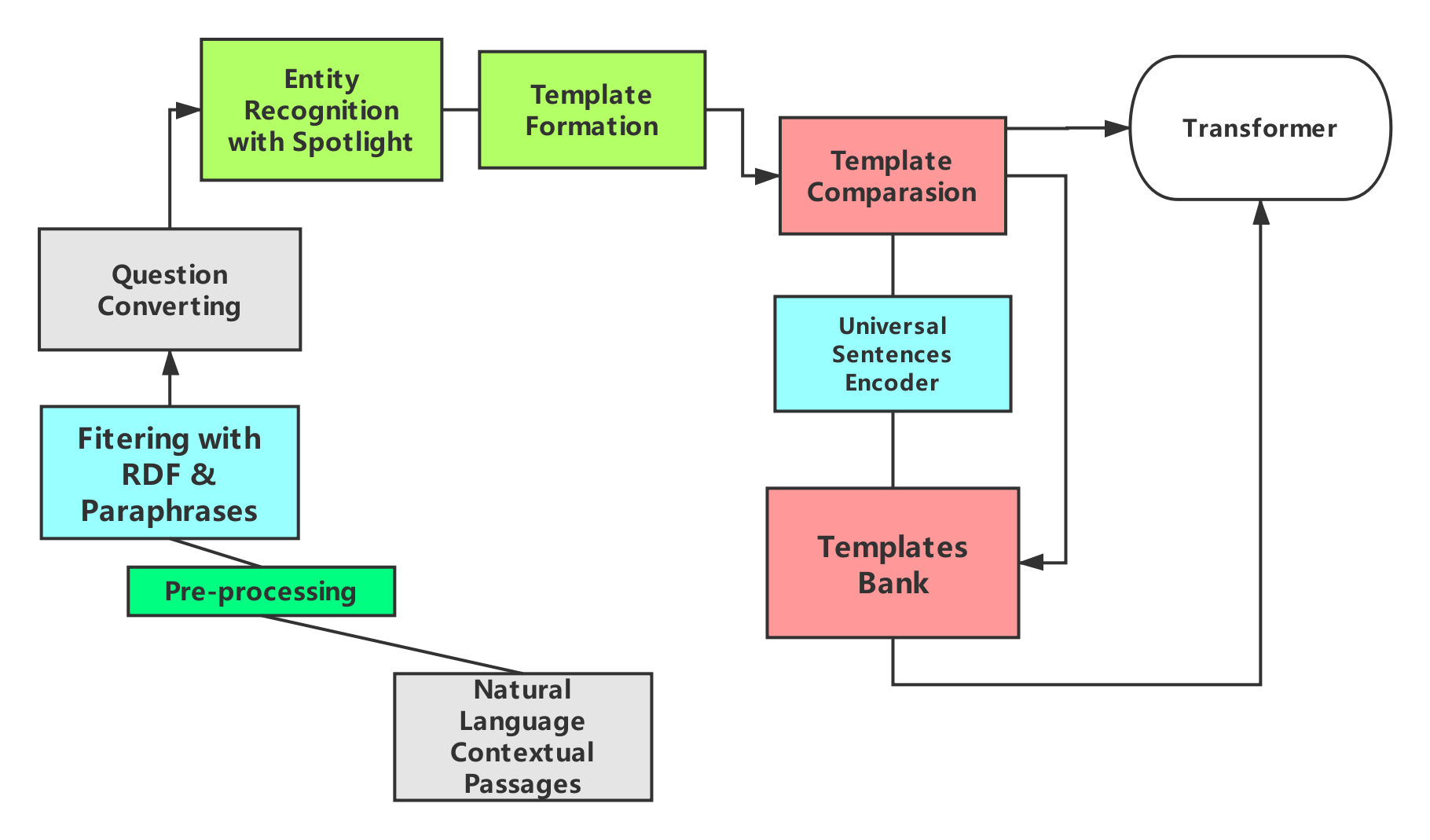

- xmldict

In this project, I propose a methodology that leverages the Transformer for long-context questions answering with the knowledge graph. The main pipeline is using named-entity annotator and syntactic parser to generate a question template from the passages. A template is a question that contains entities and relations of the knowledge graph. Then in storing the templates, the templates are embedded into universal sentence encodings to categorize them by measuring semantic similarity, which also avoids the redundancy in storage. After that, we train a Transformer on the templates for translating a natural language question with annotated triples into SPARQL to get an answer from the knowledge graph. The answer accuracy in the evaluation was raised to 11.4% from the precedent 0.93%.

To show the workflow, the model architecture is like:

- To begin with, please run the requirements.txt to set up all the dependencies. Before running all the scripts, please mind that this repository folder should have been exported to the system path

$PYTHONPATH. Also, the modelen-core-web-sm==2.1.0for spaCy need you to download, see the instruction on official page of spaCy.Also, please make a folder called 'glove2wordvec' in 'neural-qa/data', and put the word2vec file into it.

The component aims at automating the templates generation from the long text, with the help of Universal Sentence Encoder, DBpedia-Spotlight, DBpedia-Lookup, NLTK, and Spacy.

We need abundant natural language textual materials to get more questions with RDFs of DBpedia, to transform them into templates.

For example, if you want to get the articles about Brack Obama(dbr:Barack_Obama), we set DBR_NAME=Barack_Obama, then

neural-qa/templates_generator> python questions_generate_main.py --dbo_class=$DBR_NAMEhere, the variable $DBR_NAME should be a certain entity, like Barack_Obama.

the scripts will automatically make a Bank directory in the neural-qa/data/ folder to save the articles.

The script sentences_filter.py is for filtering out those sentences pertinent to the RDFs that we need.

The question_convertor.py is the part responsible for converting the caught sentences to template-questions with entity placeholders.

e.g. She was born in France? --> where <A> was born in ?1.4. Matching these questions towards the template questions in exiting templates-sets with Universal Sentence Encoder

This sentence_encoder.py is from the implementation of Universal Sentence Encoder[1] which shows efficiency in semantic sentences matching, it helps to match whether there is an existing correspondent template for the new question that we have.

1.5. If the matching similarity score can not pass the threshold, the questions go to the query composing part

To use the pipeline, please run the templates_generate_main.py after the step 1 above,

python templates_generate_main.py --dbo_class=$DBO_CLASS --temps_fpath=$EXISTING_TEMPLATES_FILE_PATH --text_fpath=$TEXT_FILE_PATH --ntriple_fpath=$NTRIPLES_FILE_PATH --train_vec=$WHETHER_TO_TRAIN_THE_VECTOR --vecpath=$FILE_PATH_THAT_SAVES_VECTORS --temp_save_path=$FILE_PATH_SAVING_RESULTS which will automatically initiate the pipeline.

Please have a look at the parameters:

-

- for

--dbo_class=$DBO_CLASS, the$DBO_CLASSshould be a ontology category, like:Person,Monument, etc.

- for

-

- for

--temps_fpath=$EXISTING_TEMPLATES_FILE_PATH, the$EXISTING_TEMPLATES_FILE_PATHshould be a file path to the template set for the DBpedia entity resource(dbr), like, forBarack_Obama, we should use the template set forPerson.

- for

-

- for

--text_fpath=$TEXT_FILE_PATH, the$TEXT_FILE_PATHshould be the text article extracted from the Wikipage.

- for

-

- for

--ntriple_fpath=$NTRIPLES_FILE_PATH, it should be the ntriple file.

- for

-

- for

--train_vec=$WHETHER_TO_TRAIN_THE_VECTOR, the default is to use the prepared vectors, however, if you want, you can set it toTrue, which trains the vector by Universal Sentence Encoder.

- for

-

- for

--vecpath=$FILE_PATH_THAT_SAVES_VECTORS, it's the file path where the vectors are stored.

- for

-

- for

--temp_save_path=$FILE_PATH_SAVING_RESULTS, please set the file path where you want to save the new template set generated.

To find the ntriple files and text files automatically saved, please go into the

neural-qa/data/Bank/DBresources/, you will see the folder correspondent to the entity's ontology category, like, forBarack_Obamais in categoryPerson, then you can find the folderneural-qa/data/Bank/DBresources/Person/Barack_Obama, the ntriple file and the text file will be seen there. - for

-

one result of our works can be seen here, which facilitates to clarify the structure of Templates Bank directory with the output results inside

Bank\DBresourses\Person\Barack_Obama.

For example, we run the program for dbr_Barack_Obama, we should use the command as below:

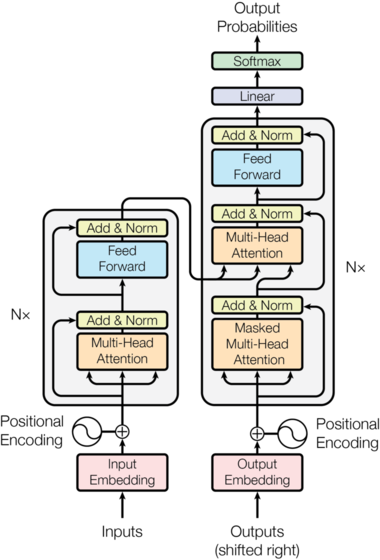

neural-qa/templates_generator> python templates_generate_main.py --dbo_class=Person --temps_fpath=../data/annotations_Person.csv --text_fpath=../data/Bank/DBresourses/Person/Barack_Obama/Barack_Obama.txt --ntriple_fpath=../data/Bank/DBresourses/Person/Barack_Obama/Barack_Obama.ntriples --vecpath=../data/Bank/DBresourses/Person/Barack_Obama/Barack_Obama.vectors --temp_save_fpath=../data/Bank/DBresourses/Person/Barack_Obama/Barack_Obama.template.csvThe implementation of this neural transformer part gets inspiration from the paper Attention Is All You Need[2] and its official model by TensorFlow[3].

We use the templates in CSV format provided by SPARQL as a Foreign Language[4] to generate the training data for the experiments.

The generated data consists of two parts, namely, data.en the source data, and data.sparql the target data.

In the data.en are the natural language questions with RDF entities annotated to be translated into RDF structured query language SPARQL, like in this example,

"who is the spouse of dbr_Barack_Obama ?"

"who is the partner of dbr_Audrey_Hepburn ?"

...

To begin with, please run the data generation:

- this one command must be run in Python 2.7, since it was from the previous project.

cd neural-qa/

mkdir data/QALD7

neural-qa> python generator.py --transformer=True --templates data/QALD-7.csv --output data/QALD7after which this script will convert the data into a training set and validation set with building the vocabulary:

cd neural-qa/transformer_atten/transformerthen, we make a folder named 'data' in the transformer folder, and again make a folder QALD7 in the folder data, please copy the generated data files in to the ./data/QALD7/ folder:

neural-qa/transformer_atten/transformer> python data_preprocess.py --data_dir=./data/QALD7Then, we need to pre-process the data and build the vocabulary file and split the data into tarining set and validation set:

neural-qa/transformer_atten/transformer> python transformer_main.py --data_dir=./data/QALD7/DATA_DIR --model_dir=./data/QALD7/model_QALD7 --vocab_file=./data/QALD7/vocab.en_sparql --param_set=big

- Please make sure the folders and paths that have been set in the commands already exist.

- We strongly encourage to use one previously generated dataset can be found here, and put it in

neural-qa/transformer_atten/transformer. Decompress the zipped file and put it in theneural-qa/transformer_atten/transformer/data/QALD7.

To conduct the training, please notice the parameters to set:

- Please put all the tfrecord files in the

neural-qa/transformer_atten/transformer/data/QALD7/DATA_DIR/to prevent the running issues.

PARAM_SET=big

DATA_DIR=$path/to/the/data

MODEL_DIR=$path/to/your/model

VOCAB_FILE=$DATA_DIR/vocab.en_sparql- just a side note, please make sure the generated date for training are put in a folder that only contains the data without any file else, and we should put the generated tfrecords into a

DATA_DIRfolder intransformer/data/QALD7, otherwise it might raise the tf.errors.DataLossError. The model has risk at handling the threads and the corrupted data loss error, the feasible solution that we know is to put the tfrecords in a separated folder, and make sure the access to write/read the files inside are already authorized.

In our experiment, we use the command below:

python transformer_main.py --data_dir=./data/QALD7/DATA_DIR --model_dir=./data/QALD7/model_QALD7 --vocab_file=./data/QALD7/vocab.en_sparql --param_set=big -

To see more instructions, this refers to the official model.

-

NOTIFICATION: Since the current model officially by TensorFlow still has a potential issue, we strongly recommend you to train it on CPU or check the CUDA environment in case that the memory run out of storage and the threads get killed.

- Training Time

-

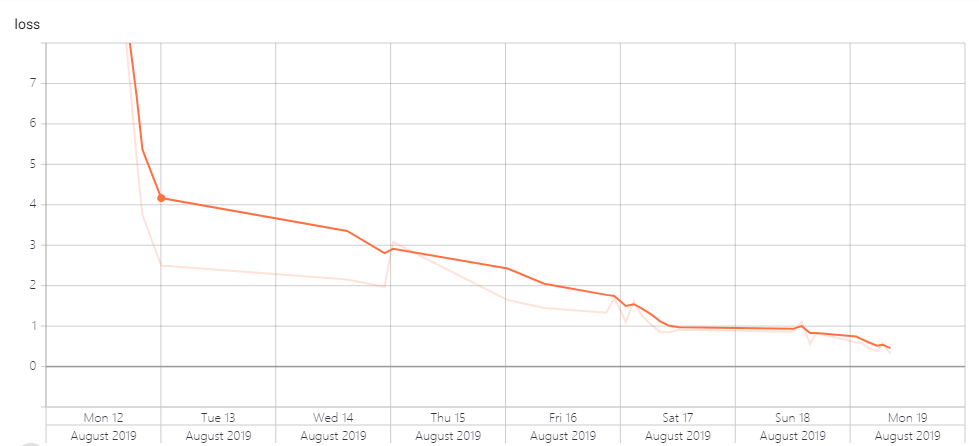

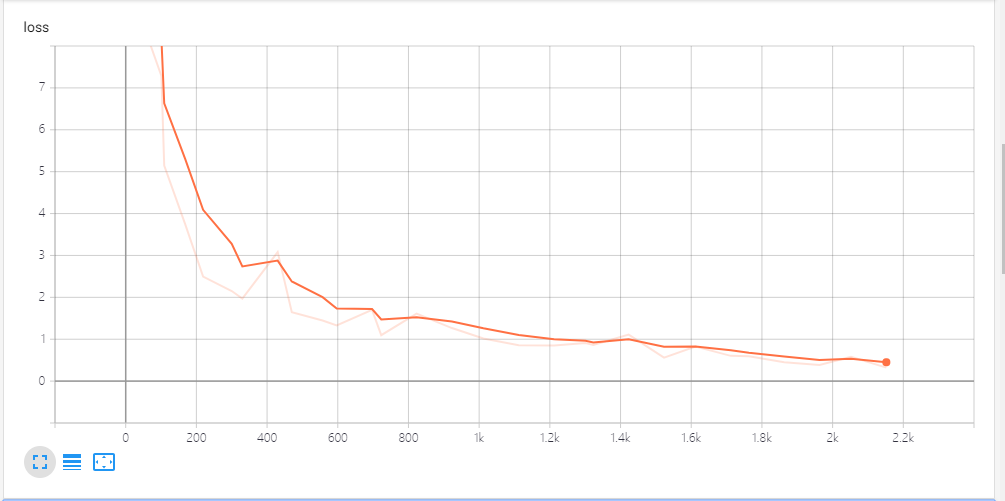

Loss

This shows the cross-entropy loss while training:

-

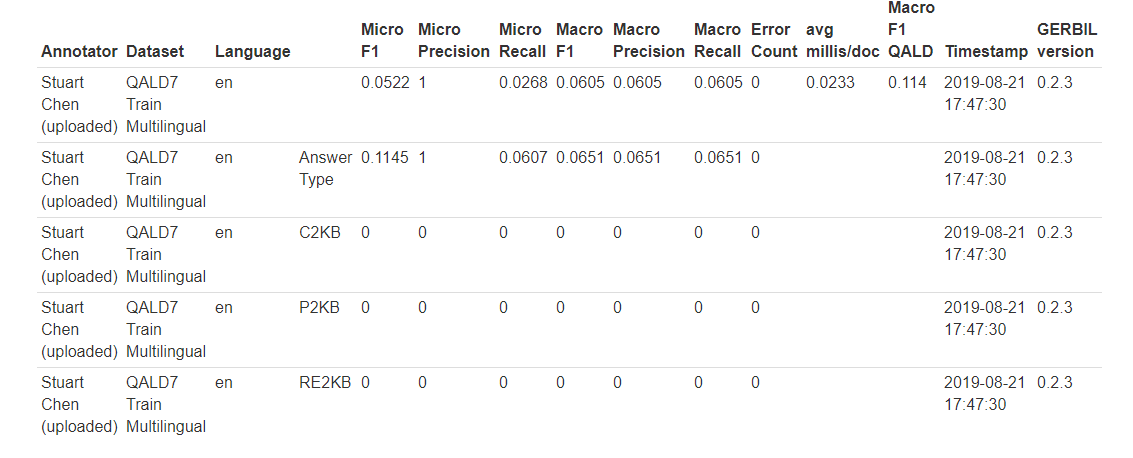

GERBIL Evaluation

The table shows the evaluation result for the QALD-7 benchmark:

The GERBIL is an online platform to do the question-answering F1-score evaluation with the confusion matrix, and this table shows the answering accuracy of the model's output.

I am so glad to have this scientific research experience with my excellent researchers from Google and DBpedia. I get more profound insights during our research in natural language processing, knowledge graph, and deep learning. It's profounding my mind in scientific research, which ignites the flame of unquenchable curiosity in artificial intelligence.

So here, I want to talk about this project. We are using long natural language text to generate the question templates for reasoning on the knowledge graph.

Also, we tried to make the best use of the state-of-the-art model, Transformer of attention mechanism, to play the role of the learner from natural language questions into the SPARQL queries on the graph-structured relational database.

What's more, we want to make the system a never-ending-learner, like the Never-Ending Learning for Open-Domain Question Answering over Knowledge Bases[5], to keep the long loop of accumulating knowledge. I believe this is a crucial key to artificial general intelligence.

In the beginning, I mean in the initial proposal, we wanted to use graph embedding to do the SQuAD machine reading comprehension tasks with reinforcement learning, but gradually we realized the performance of the neural SPARQL machine is highly dependent on the training data which indicate the crucial necessity of automating the templates generation from long contextual passages. The Wikipedia is a wonderful source of plenty of such articles relevant to DBpedia RDF triples, so we decided to evolve an intelligent neural SPARQL machine with automated templates generation, comparison, and accumulation to try to approach a never-ending-learning intelligent agent.

Of course, during the coding, I have countered so many difficulties, like doing the benchmark evaluations and some tough impediments, but as now I think about these problems, I think they gave me a totally thorough growth. I got to learn more and more about the newest products in the industry and get more adequate with the international coding standards which open my door to a bigger world. For example, in the part of calculating the vector similarity to match existing templates, we first used word mover distance with GLoVe vectors via gensim, but we found that was too heavy and too slow, then we used spaCy and found it much speedier. And soon after this, we found the Universal Sentence Encoder is even better in this task, which is a huge evolution in our development.

Another thing that I still remember is the paraphrasing of the predicates, we used to think load all the phrases in RAM and do the matching. I still remember that the file was so huge even more than 17.6 GB. Then I found the wordnet from nltk can accomplish this paraphrasing task without such a huge cost, which is a smart solution.

We hope to keep on the work on making the question generation even better and including ASK queries, queries that require filter (how many, how much, etc.) and complex queries as well. Because we believe this can make the neural SPARQL machines get even better and better performance.

As an expansion after this project, I continued to work on improving the answering accuracy of the model, where it made thorough use of the knowledge vector space for inferring the answer. Different from the other neural methodologies that concentrate on optimizing the SPARQL query generation to get the correct entity. I took a step further, leveraging the embeddings of the relevant triples in the question to infer its answer as a vector, with the reinforcement learning algorithm to optimize the vector distance in embedding space.

[1] Daniel Cer et al. (2018) Universal Sentence Encoder

[2] Ashish Vaswani et al. (2017) Attention Is All You Need

[3] TensorFlow - Official Models: https://github.com/tensorflow/models

[4] Tommaso Soru et al. (2017) SPARQL as a Foreign Language

[5] Abdalghani Abujabal et al. (2018) Never-Ending Learning for Open-Domain Question Answering over Knowledge Bases

[6] Rajarshi Das et al. (2017) Question Answering on Knowledge Bases and Text using Universal Schema and Memory Networks

[7] Haitian Sun et al. (2018) Open Domain Question Answering Using Early Fusion of Knowledge Bases and Text

[8] Svetlana Stenchikova et al. (2018) QASR: Spoken Question Answering Using Semantic Role Labeling