-

-

Notifications

You must be signed in to change notification settings - Fork 241

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Extraction of 5GB (ZIP64) archive created with Go failed #423

Comments

|

First time using Go. Can you provide the script used for the compression? |

|

@philip-firstorder I just need an example that sets the Store method. |

|

Maybe I'm just missing how to call the bundled zip package in Go? |

|

I'll take a look at #422 once I can create Store packages |

|

Just use this: https://golangcode.com/create-zip-files-in-go/ |

|

Did a quick test and it results in a corrupted file. I'm gonna do some more tests. |

|

If you can manage to unarchive from terminal using the unzip command. Or using The Unarchiver (Mac/7zip (Windows), then the archive is not really corrupted. |

|

My test consist in a big MKV file (~5GB) and another file of indifferent size, similar to the test you've reported. Both 7-Zip and The Unarchiver warn there's some corruption. The Unarchiver, after dismissing the warning, extracts properly the big file and ignores the little one while 7-Zip extracts both. Using Keka in the default configuration throws an error message and the result files are the same as with The Unarchiver, since it uses So even if the file can be read and extracted, I think either the script or the Go library is creating bad headers. |

|

Thank you for your tests, It’s very good you could reproduce the error. If the go library creates bad headers, then we need to find out what those are. Can you compare their headers with a valid archive to see the differences? |

|

I did more tests archiving/unarchiving an Archive 5GB.zip file together with a Small image.png here are the unarchiving results: Go.zip: archived with GoArchive Utility (10.11): Error 2 - No such file or directory => Nothing extracted Keka.zip: archived with Keka (1.1.17)Archive Utility (10.11): Error 2 - No such file or directory => Nothing extracted Archive Utility.zip: archived with Finder (macOS 10.14.5)Archive Utility (10.11): No errors => Both files successfully extracted |

|

And here are the directory records for all the files:

|

|

Just to be clear, let me know if this assumptions are correct:

|

|

Yes. To be precise: macOS 10.14.5 (18F132). It's very interesting how Finder compresses the big files, seems totally not standard. Also I noticed that Keka doesn't set the "UT extra field modtime" |

64 bit ZIP are badly build using Finder at least since Mac OS X 10.9, already investigated that one on #18. macOS 10.15 has enhancements to the Archive Utility, did not checked it yet but most probably they finally fixed that one. |

|

Let's hope they did, everyone is angry at them on the forums :) Anyways zip64 will be more and more used, so we need to solve these problems for all standard vendors as it will only get worse with time. For unarchiving I also updated the Keka results using both unar and p7zip. For archiving I used the default settings. |

It does store it, just in another way: This should be enhanced.

Just did a quick test in the beta, sadly I think it still does it wrong. |

I can't reproduce this one, works for me.

Also the error from Archive Utility looks like this in my tests: |

|

Can you try with these 5.72 GB archives that I used for my tests? They all contain these same 2 files: |

|

With your test files I get the same results as you. Thanks for providing them! |

|

Nice! Did you also try to archive the 2 files on your side with Keka and then unarchive with all the vendors to see if you get the same messages? This way we know if the different behaviour comes from the archiving stage or the unarchiving stage. |

Yes did that and got the same results. |

|

Very good, now you need to identify what field causes the problem. This is a difficult bug, but if we can identify it then we can open tickets also for the other vendors to make sure they don't create bad archives that our clients cannot open. |

|

I don't get why the latest The Unarchiver 4.1.0 fails. The Unarchiver 3.11.1 and |

|

Do you have a link for the 4.1.0 sources so I can check the differences? |

|

I think it's no more open source. You have the XADMaster sources, but that one works. |

|

I also did some tests today with 2 blank files: Archived them with Go and Keka and inspected them with Hex Fiend: Go.zipThe selected bytes is the data descriptor of the previous file, which Keka doesn't have.This was a bug that appended an empty json {} followed by a new line characted. I corrected it, the 3 bytes should not appear anymore. Keka.zip |

|

I got it now. Go does not fill the disk number in the ZIP64 extra data: // append a zip64 extra block to Extra

var buf [28]byte // 2x uint16 + 3x uint64

eb := writeBuf(buf[:])

eb.uint16(zip64ExtraId)

eb.uint16(24) // size = 3x uint64

eb.uint64(h.UncompressedSize64)

eb.uint64(h.CompressedSize64)

eb.uint64(h.offset)

h.Extra = append(h.Extra, buf[:]...)XADMaster's // append a zip64 extra block to Extra

var buf [32]byte // 2x uint16 + 3x uint64 + 1x uint32

eb := writeBuf(buf[:])

eb.uint16(zip64ExtraId)

eb.uint16(28) // size = 3x uint64 + 1x uint32

eb.uint64(h.UncompressedSize64)

eb.uint64(h.CompressedSize64)

eb.uint64(h.offset)

eb.uint32(0) // Number of disk

h.Extra = append(h.Extra, buf[:]...)So here Go does it perfectly, since those fields need only to be present if they are informed. Looking at the rest of the code makes me think Go only creates a volume, not multi-volume archives. So the disk field should not be present in the extra block: Already fixed that one in the parser for 1.1.18. Thanks a lot for all the detailed information @philip-firstorder! |

|

This build should fix this issue: Keka-r3332 |

I didn't find this code anywhere, can you post a link to it? |

You have it there 😊 |

|

Ahhh! The modified one is not posted anywhere. I've modified in my local

With that I meant with their code (the first one). I'm editing the comment. |

Tested this and all files are correctly extracted in the Go folder, however: But it works, both methods unzip all contents, which I even checked in Hex Fiend for integrity. |

But in that case you forgot to add this line: eb.uint64(h.offset) |

I did not looked into

Right! Added that one. |

|

Can you create an archive with your modified go writer and then test it with p7zip? Cause then you can open a ticket to Go to fix it, because indeed they missed the extra flag. |

I did and it warn anyway.

The point is that those fields are not fixed. They only need to appear if in the local/central directory are set as 0xFFFF or 0xFFFFFFFF. Go does not create disks, so it does not inform that field. Since I did not checked |

|

I found this code in go reader, which is linked to this issue: // Should have consumed the whole header.

// But popular zip & JAR creation tools are broken and

// may pad extra zeros at the end, so accept those

// too. See golang.org/issue/8186.

for _, v := range b {

if v != 0 {

return ErrFormat

}

}I think go writer shouldn't add this extra line from you eb.uint32(0) // Number of disk linebecause they didn't set the corresponding disk to FFFFFFFF just few lines below: b = b[4:] // skip disk number start and internal file attr (2x uint16)However I think now that their assumption that popular zip & JAR tools are broken is wrong, as maybe that represents the extra disk flag |

|

Also a note regarding reading from LOCAL HEADERS from Streamed archives: The Compressed and Uncompressed length cannot be known beforehand, so they are set to 0. This information is put both in the data descriptor after each file data is streamed and also in the Central directory. In the archives generated with keka, the file sizes are also set in the Local headers, because the archive is not streamed. The unarchivers should ignore the local headers anyways, but was worth the mention. |

|

I wrote an email to Robert Rezabek from BetterZip (p7zip) which also is giving errors. If p7zip gets fixed then Keka (p7zip) and many other unarchivers will eventually get fixed. |

|

In p7zip 16.02 check out CInArchive::ReadExtra(...) in the file CPP/7zip/Archive/Zip/ZipIn.cpp. Looks right to me, all the checks for 0xFFFFFFFF are there. |

I don't have an environment to debug but I wonder is it's this line CPP/7zip/Archive/Zip/ZipIn.cpp#L674 if (remain != 0)

{

ExtraMinorError = true;

// 7-Zip before 9.31 created incorrect WsAES Extra in folder's local headers.

// so we don't return false, but just set warning flag

// return false;

}In 7zip 19.00 the same function was modified and looks like this: https://github.com/kornelski/7z/blob/cb75c2b5bf0d347114d59ff7ba9b51d435c01e40/CPP/7zip/Archive/Zip/ZipIn.cpp#L982 |

|

Igor from 7zip discussed here this problem. But I still get a warning message with 7zip v19.00 so I wrote to him in the thread to test the Go.zip.zip archive.

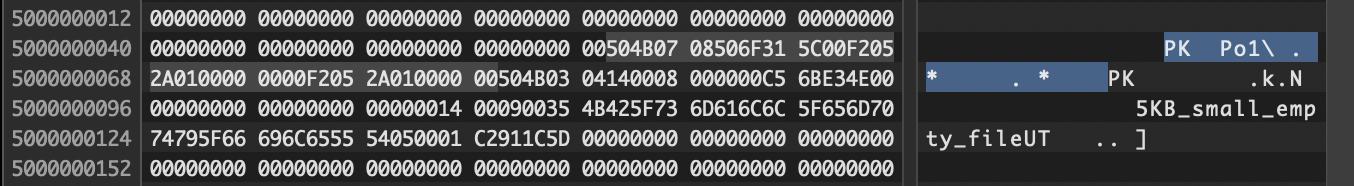

For example when compressed and uncompressed are 0xFFFFFFFF and offset is 0, they they add all 3 fields = 24 bytes, instead of just the first 2 maxed out ones = 16 bytes This is why for entry #1: 5GB_big_empty_file with offset 0 Go wrongly adds 24 bytes: Instead the Keka (p7zip) archive has 16 bytes (compressed and uncompressed): Also for entry #2: 5KB_small_empty_file Go adds all 24 bytes (compressed, uncompressed and offset) instead of ONLY 8 bytes (offset). Because for this second small file it is ONLY the offset value that is maxed out. I will open a pull request to Go to fix it. |

To reproduce you need to zip a file bigger than 4GB with another smaller file, in my case my resulting archive it 5GB.

ZIP Method: Storage

Archiver: https://golang.org/src/archive/zip/writer.go

Error when unarchiving:

I managed to unarchive from terminal using the

unzipcommand. Also using The Unarchiver (Mac), 7zip (Windows). So the archive is not corrupted.Here are the headers I printed from terminal.

The text was updated successfully, but these errors were encountered: