-

Notifications

You must be signed in to change notification settings - Fork 3

Part 2: Regression

What is Machine Learning (ML)?

(Source: @Yakoove, 2020 - ML seen as part of AI (left) and parts of ML only seen of AI (right) by some)

In the previous notes, we did not cover the definition and what ML aims to achieve. It is the study of algorithms by the use of data or observations (known as samples used as "training data"), which its models use to make predictions or decisions without being explicitly programmed, finding underlying patterns hidden in our data that are nothing more than functions or decision boundaries.

It's seen as a sub-field of AI (Artificial Intelligence), an intelligence demonstrated by machines that are without consciousness and emotionally displayed by humans and animals in natural intelligence.

Deep Learning is part of a broader family of machine learning methods based on ANN (Artificial Neural Networks) with Representation learning.

(Source: Serafeim Loukas, 2020)

These models learn from a labeled dataset that comes from some observations (e.g. images of animals, name of animals), and then are used to predict future events or classify.

- During training, input data which is a known training data set are provided alongside its corresponding labels (tied to the data set). The model would then be able to adjust parameters or weights of how it ought to identify any new unknown data set.

- After training, when provided with new unseen observations (or test data set), it is able to make predictions (predicted output) about it.

- It can be compared against its expected intended output (e.g. ground truth label) and find errors in order to modify itself accordingly (e.g. via back-propagation).

-

Classification: Problem when the output variable is a category (e.g. disease or no disease). Examples include the SVM (Support Vector Machine), the Random Forest Classification, and the Naive Bayers Classifer.

-

Regression: Problem when the output variable is a real continuous value (e.g. stock price prediction). Examples include the Linear Regression, the Random Forest Regression, and the SVR (Support Vector Regression).

(Source: Serafeim Loukas, 2020)

These models unlike supervised learning are intended to explore the data and draw inferences from a dataset (that isn't labeled) to describe hidden structures, and not make the right predictions about the output.

- Simply provide an unlabelled dataset and it will produce observational patterns like groupings.

-

Clustering: Problem where you want to unveil the inherent groupings in the data (e.g. grouping animals based on characteristics like the length of its tail or its species). Examples include the K-means clustering and the hierarchical clustering.

-

Association: Rule learning where you want to discover association rules (e.g. people that buy a brand Y handbag buy a brand X shoe). Examples include the Apriori algorithm and the Eclat algorithm.

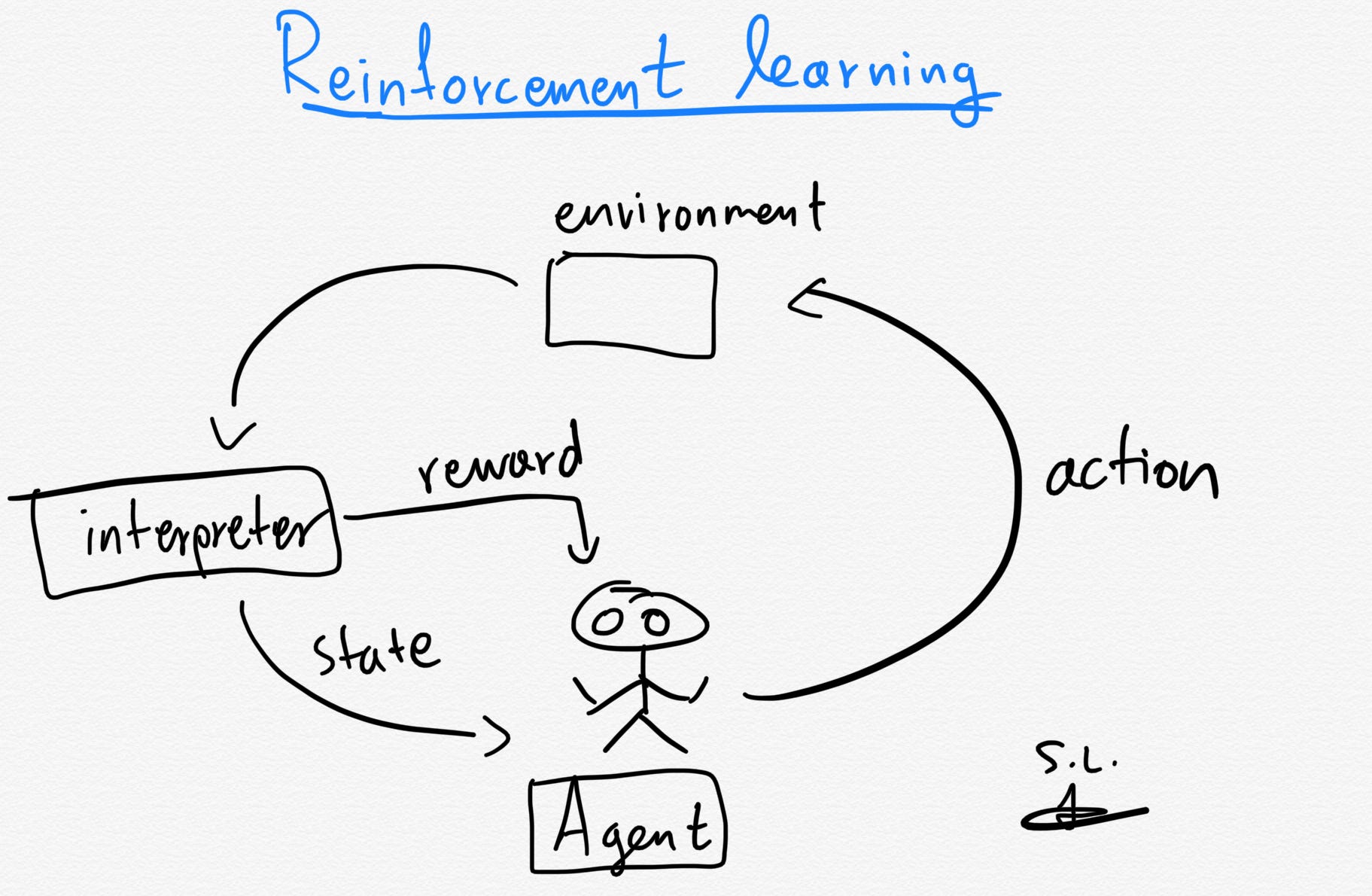

(Source: Serafeim Loukas, 2020)

These models' algorithms use estimated errors as rewards or penalties. If the error is big, the result is a high penalty and low reward. If the error is small, the result is a low penalty and high reward. Trial and error search and delayed reward are the most relevant characteristics of reinforcement learning. It allows the automatic determination of the ideal behavior within a specific context in order to maximize the desired performance. The reinforcement signal (known as the reward feedback) is required for the model to learn which action is best and to take next.

-

The agent (e.g. drone making a delivery, super Mario navigating a game) is the algorithm that makes the possible actions (e.g. running left or right, jumping high or low, crouching or standing still) it can take in its current environment (e.g. laws of physics, rules of society and its action and consequences, game rules).

-

The interpreter in the agent would take the agent's current state and action as input, and based on the state the agent finds itself in, a reward is the feedback returned that measures the success or failure of the agent's action (e.g. if Mario touches a coin, he wins a point). The agent then employs the policy (aka the strategy it uses) to determine the next action based on the current state. It maps the states to actions that promise the highest reward.

Examples include the Monte Carlo algorithm, and the Q-learning algorithm.

Models require only a small amount of labeled data with a large amount of unlabeled data during training. These algorithms falls between unsupervised learning and supervised learning, and are a special instance of Weak supervision (where noisy, limited, or imprecise sources are used to provide supervision signal for labeling large amounts of training data in a supervised learning setting).

Above is are examples of the common equations written for regression.

-

or

(for

) represents the weight (or coefficients). It represents the strength of the relationship, and increasing the input influences the output, which conversely, decreasing weights to near zero means this input does not change the output.

-

represents the dependent variable.

-

or

(for

) represents the independent variable that derives the value of the dependent variable.

-

or

represents the bias (or intersection). It represents how far off our predictions are from real values. High bias allows algorithms to learn fast but makes it less flexible (as it results in over-fitting and lower predictive performance), low bias creates less assumptions about the form of the target function (which results in under-fitting).

(Source: @Pluviophile, 2020 - Example of independent and dependent variable)

(Source: Mehmet Toprak, 2020 - Example of independent and dependent variables in dataset)

(Source: Kamaruzaman Jusoff, 2009 - Example of independent and dependent variables in real-world research dataset)

Regression analysis is the process of estimating the relationship between a dependent variable and an (or more than one) independent variable. It can be utilized to access the strength of the relationship between variables and for modeling future relationship(s) between them. In other words, it means utilizing a function (from a selected family of functions) to the sample data set using some error function (e.g. Cost function which is implemented by algorithms like Gradient descent). It is one of the most basic tools used in the area of ML for prediction, and you can fit a function on the available data in an attempt to predict the outcome for future data points.

(Source: Aqeel Anwar, 2021 - Left the graph our minds attempt to estimate the number from by 2018, Right the actual data)

For context (from the graph on the left), suppose we have a graph with data points from the year 2001 to 2013 for the total number of college graduates with master's degrees. Based on the underlying pattern, you can assume and estimate it around 2.0-2.1 million.

Now on the actual data (from the graph on the right), we see it actually goes to 2.0 million. In fact from the beginning, we could see that the number of graduates increases almost linearly with the year. Our mind is able to estimate that as this is a simpler problem of fitting a line to data, which process is known as Regression analysis. Food for thought: What happens in the context of machines if this is a pattern that our minds could detect?

Fitting a function based on the available data can serve 2 purposes: Interpolation and Extrapolation.

(Source: Aqeel Anwar, 2021 - Left shows the current data, Right shows the estimated line)

Assume we have access to sparse data we know about the number of graduates for every 4 years (on the left), we want to estimate the number of graduates for all the missing years in-between. To do that, we can fit a line to the limited data points, which is the interpolation process.

In short, it is by using the function (the line we drew) to predict the value of a dependent variable for an independent variable in the midst of our data (e.g. in-between data points).

(Source: Aqeel Anwar, 2021 - Left shows the current data, Right shows the estimated line)

Assume we have access to limited data from the year 2001 to 2012, and we want to predict the number of graduates from 2013 to 2018. Since the number of graduates increases almost linearly with each year, it would make sense to fit a line to the dataset to assume the prediction. Then based on the actual dataset, we can see that the prediction is very close.

In short, it is by using the function (the line we drew) to predict the value of a dependent variable for an independent variable that is outside the range of our data (e.g. beyond all possible data points).

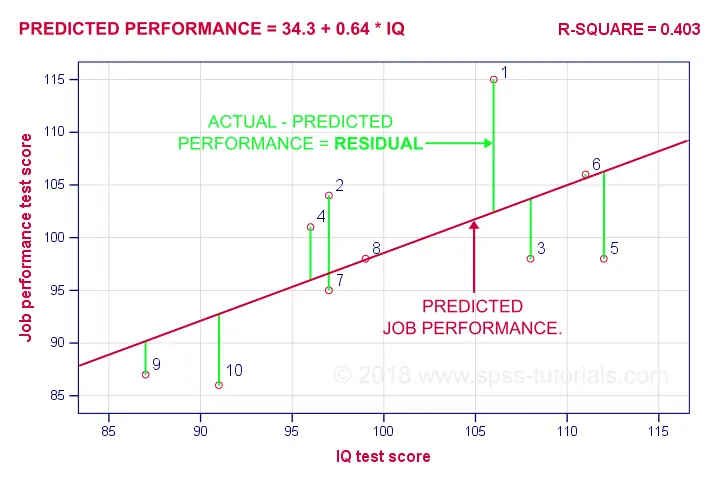

(Source: Ruben Geert van den Berg, n.d.)

It is a linear regression model used to estimate and establish a statistical relationship between the two quantitative variables (with one independent variable and dependent variable

), and find a linear function

(a non-vertical straight line) to predict as accurate as possible the dependent variable values as a function of the independent variable.

This is the model function where we expect to start drawing our line from,

-

is the estimated (or predicted) value against the actual value

.

-

is the intercept (aka the predicted value of

when

).

-

is the regression coefficient (e.g. how much we expect

to change as

increases).

-

is the independent variable at point

.

However, because not all data points would fit, this would be the representation of the actual function of ,

-

is the error of the estimate at point

(e.g. how much variation there is in our estimate of the regression coefficient). This is also known as the residual.

Re-arranging it to make the subject gives,

We want to find the minimum total residual error, and to achieve that we use the Least squares approach, where we want to find such that for all

data rows,

Let and

be estimated values of

and

. Finding these values would help us find

.

The mean for and

are

and

respectively.

This leads us to the following formulas to derive the coefficients where,

(Source: matan, 2021)

It is similar to the Simple linear regression model except with independent variables from

to

.

For the case of multiple variables, we are going to denote them in matrix form, where the representation of the actual function is,

Where,

Still using the Least-squares approach, we derive the following,

(Source: M. Bremer, 2012)

In order to derive the coefficients, let and

be estimated values of

and

.

This would give us,

Which by manipulation of the matrix systems equation, we get this,

(Source: ANIMOID10, 2018 - Green showing linear regression fit, Red showing polynomial regression fit)

Unlike the linear regression model, it tries to find a polynomial function instead of a linear function to predict the dependent variable.

Read here for more information.

Similarly to the Multiple linear regression model, we denote it as matrix form where,

Except that,

The approach to solving for the coefficients is the same as the Multiple linear regression model.

Finding the polynomial that works for the model with the data set is experimental (meaning it is a trial-and-error to which fits most data points more accurately). For more information to find the optimal polynomial

for the model, you may read here.

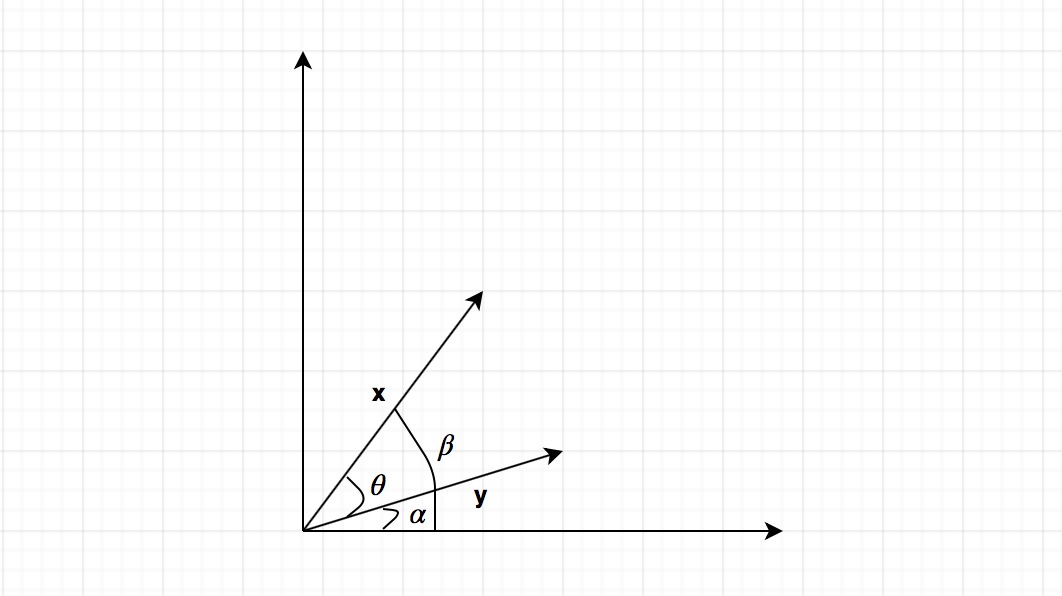

(Source: Patrick H. Winston, 2010)

(Source: Octoplus, 2015)

The Support Vector Machine (SVM) is a classification algorithm, hence we are going to describe the mechanism behind the SVM and then use its intuition to describe how it aids in SVR as it is an extension from SVM.

Before we continue, these are some terms to be aware of,

-

Kernel: Helps us find a hyperplane in the higher dimensional space (hence decreasing the computational cost), or in a non-linear setting by replacing the

term with a non-linear kernel function.

- Hyperplane: The separating line between two data classes in SVM. In regression, this will be the line that is used to predict the continuous output.

- Decision boundary: The demarcation line on both the positive or negative sides, which is used to classify a data point as positive or negative, and the same concept in SVM will be used in SVR. The vectors that create the decision boundaries are called the support vectors.

Note that this covers the gist and may not be well-covered. To gain a better understanding, read here and here.

(Source: Shu Zhan Fan, 2018)

The hyperplane is a set of data points that satisfies the plane equation such that,

Where is a vector with

dimensions such that,

For SVM, the upper and lower bounds of the hyperplane are the decision boundaries, where they are margins that serve as a separation for classification. The wider the margin, the lower the generalization error for classification.

The decision boundaries could be configured as such where it is a set of data points that satisfies the plane equation such that,

-

Upper boundary (

+1): -

Lower boundary (

-1):

Now we would need to classify groups into the relevant boundaries since from the hyperplane equation, we can start to sort the groups into the relevant sections such that,

With that, we would need to optimize the margins that allow us to start configuring the classification of the groups relevantly.

(Source: MLMath.io, 2019)

The approach of hard-margin is to assume no errors within the margins, meaning that has to be a perfect fitting but could result in overfitting.

So to prevent the data points from falling into the margin, it tries to optimize the following by making,

- For data points in

+1group, make.

- For data points in

-1group, make.

Which summaries into this optimization statement,

Where finding for the and

values solves the statement above. This can be solved using Lagrange multipliers.

Note that optimizing for also does the same thing.

(Source: Nishant Nikhil, 2016)

(Source: Pankaj Porwal, 2020) (Source: Sandipan Dey, 2018)

Unlike the hard margin, it modifies the former by extending the margin to prevent overfitting, but this process may result in underfitting,

Because the algorithm may not work for all data points, we need to account for the possibility of errors larger than (the margin width) using the slack variables

, which are points that are incorrectly classified from the margin (or within the margin band).

Slack variables that are,

- Within the margin are

- Outside the margin (opposite side) are

- On the hyperline is

.

- Are the support (

0) or classified correctly are

The hyperparameter can be configured such that as it increases, the tolerance for points outside

increases. If

, approaches

0, the tolerance depletes until it has less room for errors.

To know more about choosing the best value, read more here. Read more here on the dual problem.

(Source: Tom Sharp, 2020)

Unlike its classification counterpart, its ideal end-goal is to optimize the margins to be small as possible, and predicted data points that fall within the margin ( insensitive tube) are considered as errors that are negligible.

(Source: SHIVAM5152, 2021)

For non-linear scenarios (or even higher-dimensions), the solution is by applying the kernel trick (but this differs as every dot product is replaced by a non-linear kernel function).

We denote the kernel function as such , where

and

. Here are some common kernel functions for SVM,

-

Radial basis function (RBF):

for

(where sometimes it is parameterized as

)

-

Polynomial:

(for inhomogeneous

).

-

Hyperbolic:

for

and

.

(Source: kassambara, 2018)

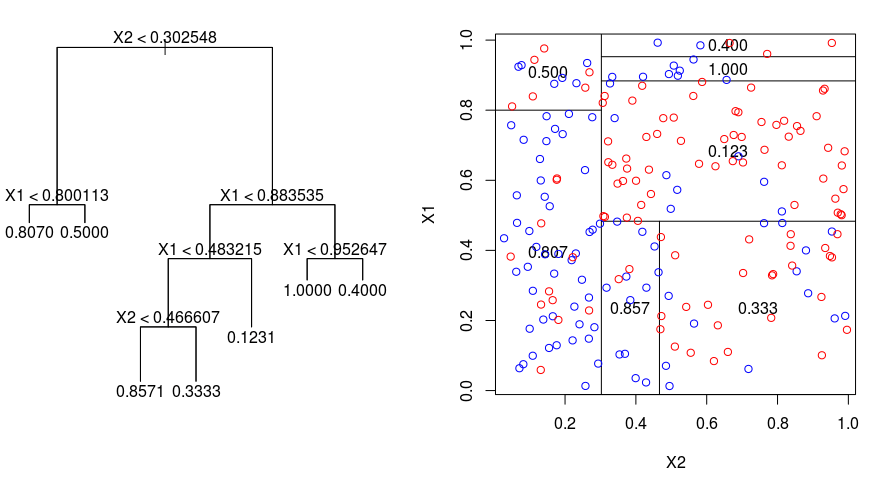

Decision trees can be used to solve regression and classification problems and predicts the class or value of the target variable by learning the decision rules inferred from the training data (and its features). For this reason, they are sometimes referred to as Classification And Regression Trees (CART). These models are an example of a more general area of machine learning known as adaptive basis function models, and unlike linear regression, the models are not linear in the parameters and are only able to compute a locally optimal Maximum Likelihood Estimate (MLE) for the parameters.

The prediction starts from the root of the tree, which attributes are compared with the record's attributes, and gets branched to the next node through comparison again until it reaches the terminal node, in which the predicted value is the terminal node's value. How the tree is formed is by repeatedly partitioning the data into multiple sub-spaces and that the outcomes in each final sub-space are as homogeneous as possible (which process is called recursive partitioning). For classification trees, the variable is a categorical variable, whereas, for regression trees, the variable is a continuous variable.

Some terminologies to know:

- Root Node: Represents the entire population or sample and this further gets divided into sub-trees.

- Splitting: Process of dividing a node into two or more sub-nodes.

- Decision Node: When a sub-node splits into further sub-nodes, then it is called the decision node.

- Leaf/Terminal Node: The final nodes that do not split further.

- Pruning: Process of removing sub-nodes of a decision node. It is the opposite of the splitting process.

- Branch/Sub-Tree: Subsection of the entire tree.

- Parent and Child Node: Parent node contains child nodes (sub-nodes of the parent node).

For classification trees, it seeks to minimize using the Gini Impurity metric, whereas the regression trees seek to minimize using the Residual Sum of Squares (RSS) or Mean Squared Error (MSE) metric (via Least squares method).

(Source: James Le, 2018)

The algorithm for each branch can be described as (uses Eucleadian distance to calculuate distance),

Where is the set containing all existing branch data points (and

is the count),

represents the count of data points in

partition (and

represents its mean) for example.

Each iteration would start from the first element, and split into partitions (left partition) and

(right partition). Once it reaches the second last element, the iteration stops. It attempts to find the minimum sum of all RSS at each

data points in the current branch. In summary, the algorithm works like this,

- From the current branch (or the root if it's the first process), iterate them until the minimum RSS (or MSE) is found.

- Start partitioning them into 2 branches based on the point with the least RSS (or MSE) (hence the

min()). - Repeat step 1 to continue partitioning the branches until it is irreducible, in which the terminal nodes are the value of the single sample (or the average of the samples) itself.

For a more graphical description, read here.

(Source: Afroz Chakure, 2019)

Random forest regression extension of the Decision Tree algorithm utilizing the bagging technique from the ensemble learning method. It operates by constructing a multitude of decision trees at training time (which trees are run in parallel) and outputs the predicted mean of all the individual trees.

The number of features that can be split at each node is limited to some percentage of the total, which ensures that the ensemble model does not rely too heavily on any individual feature and makes fair use of all potentially predictive features. Each tree draws a random sample from the original data when generating its splits, which adds a further element of randomness that prevents overfitting (unlike decision trees).

In summary, the algorithm works like this,

- Pick at random

data points from the training set.

- Build a decision tree associated with these

data points.

- Choose the number

of trees to build, and repeat step 1 and 2.

- For a new data point, make each one of the

-tree trees predict the value of

for the data point in question and assign the new data point to the average across all of the predicted

values.

(Source: Robi Polikar, 2008)

Ensemble learning is a technique that aims at improving the accuracy of results in models by combining multiple models instead of using a single model and can be a combination of the same model or different models.

(Source: Fernando López, 2020 - Left is "hard" mode where the final answer is considered a vote and Right is "soft" mode where the total probabilities count for the final vote in the max voting)

Here's an overview of the simple ensemble techniques (basic ideas apart from the advanced ensemble techniques such as stacking, bagging and boosting),

-

Max Voting: Each prediction from a model is considered as a vote. The final prediction would be taken from the majority vote. This technique is used generally for classifications.

-

Averaging: Takes an average of predictions from all models to make the final prediction. This technique is used generally for making predictions in regressions problems or calculating probabilities for classification problems.

-

Weighted Average: Extension of the averaging method except all models are assigned different weights (defining the importance of each model for prediction), and the total weighted average determines the final prediction.

(Source: Fernando López, 2020)

Stacking is a technique that uses predictions from multiple models (e.g. decision tree, KNN or SVM) to build a new model.

(Source: Aishwarya Singh, 2018)

For example,

- Train set is split into 10 parts.

- For each training set, the base model (e.g. a decision tree) is fitted on 9 parts, and predictions are made for the 10th part.

- The base model is then fitted on the whole training data set, and then predictions are made on the test set.

- Then steps 2 to 4 are repeated for another base mode (e.g. KNN), which results in another set of predictions for the training set and test set.

- Predictions from the training set are then used as features to build a new meta model, which would be used to make final predictions on the test prediction set.

(Source: Fernando López, 2020)

Blending follows the same approach as stacking except it uses only a validation set from the training set to make predictions. The validation set and the predictions are then used to build a model which is run on the test set.

(Source: Aishwarya Singh, 2018)

For example,

- The training set is split into training and validation sets.

- Model(s) are fitted on the training set, and the predictions are made on the validation set and the test set.

- The validation set and its predictions are used to build a new meta-model.

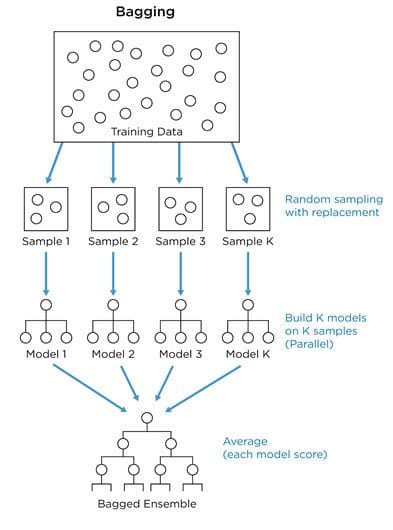

Bagging combines the results of multiple models (e.g. all decision trees) to get a generalized result. It simply uses the subsets of data sets (bags) to get a fair idea of the distribution (complete set).

(Source: Jay Budzik, 2019)

For example,

- Multiple subsets are created from the original dataset.

- For each of these subsets, a base model (weak model) is created, and these models run in parallel and are independent of each other.

- The combination of all the predictions of all the models determines the final prediction.

(Source: Jay Budzik, 2019)

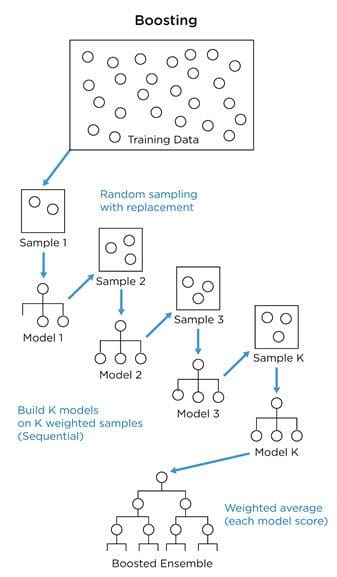

Boosting is a sequential process where each subsequent model attempts to correct the errors of the previous model, and the succeeding models are dependent on the previous model.

(Source: Aishwarya Singh, 2018)

For example,

- Initially, all data points are given equal weights. Then from a subset of data, a base model is created and predictions are made.

- Observations that are incorrectly predicted are given higher weights.

- Then, another model is created and predictions are made on the dataset.

- This process continues until the final model, which contains the weighted mean of all the models. The boosting algorithms combine a number of weak learners (previous models) to form a strong learner, as individual models will not perform well on the entire dataset, therefore it boosts the performance of the ensemble.

This is a statistical measure of how close the data is fitted to the regression line (also known as the coefficient of determination), such as representing the proportion of the variance for a dependent variable that's explained by an independent variable(s)

(for

dimension) in a regression model. However, it doesn't tell you whether your chosen model is good or bad, nor will it tell you whether the data and predictions are biased.

The formula is as follows, where the total value is by subtracting from 1 the Residual Sum of Squares (RSS) divided by the Total Sum of Squares (TSS),

Due to the problem of not being able to determine whether the coefficient estimates and predictions are biased, there is a need to assess the residual plots, which the adjusted

is able to address.

For example, the problems are:

- Adding more predictions to the model may cause the model to appear to have a better fit due to it having more terms, instead of decreasing the value.

- More predictions and higher-order polynomials may cause the model to contain random noise in the data, resulting in overfitting which may produce a misleading high

value and hence lessening the model's ability to make accurate predictions.

The formula is as follows (where is the total number of data points and

numbers of independent variables (e.g.

dimensions) (including the constant) in the model),

Although both and

gives an idea of how many data points fall within the line of the regression equation, the main difference between them is that the

assumes that every single independent variable explains the variation in the dependent variable, and tells you the percentage of variation explained only by the independent variables that actually affect the dependent variable.

It can penalize you for adding independent variables that do not fit in the model. This model may produce negative values and if the value dips below 0, it indicates that the model is a poor fit for the data set.