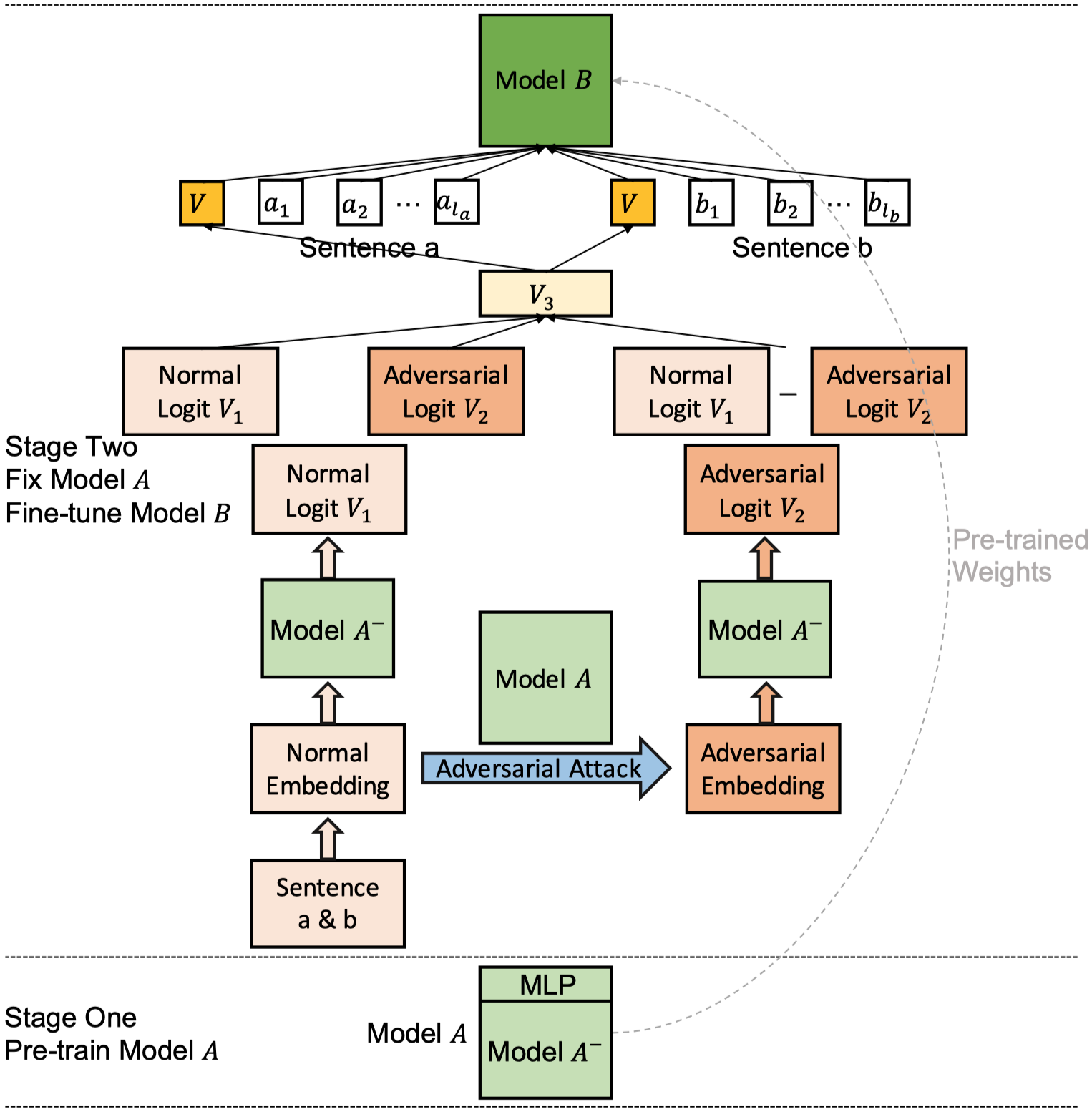

This repository includes the source code of the paper "Enhancing Neural Models with Vulnerability via Adversarial Attack". Please cite our paper when you use this program! 😍 This paper has been accepted to the conference "International Conference on Computational Linguistics (COLING20)". This paper can be downloaded here.

@inproceedings{zhang2020enhancing,

title={Enhancing Neural Models with Vulnerability via Adversarial Attack},

author={Zhang, Rong and Zhou, Qifei and An, Bo and Li, Weiping and Mo, Tong and Wu, Bo},

booktitle={Proceedings of the 28th International Conference on Computational Linguistics},

pages={1133--1146},

year={2020}

}

python3

pip install -r requirements.txt

Data preprocessing configs are defined in config/preprocessing.

Training configs are defined in config/training.

All the data preprocessing scripts are in scripts/preprocessing.

cd scripts/preprocessing

python preprocess_quora.py

python preprocess_snli.py

python preprocess_mnli.py

cd scripts/preprocessing

python preprocess_quora_bert.py

python preprocess_snli_bert.py

python preprocess_mnli_bert.py

Please use the bert-as-service, then train/validate/test BERT.

python esim_quora.py

python esim_snli.py

python esim_mnli.py

python bert_quora.py

python bert_snli.py

python bert_mnli.py

python top_esim_quora.py

python top_esim_snli.py

python top_esim_mnli.py

python top_bert_quora.py

python top_bert_snli.py

python top_bert_mnli.py

The validation process is contained in each training process. And you can validate by just running the training scripts to get the validation results before training.

The testing processes are contained in each training epoch for QQP and SNLI datasets.

For MultiNLI dataset, the following scripts should be run to get submission files, and then the files should be submited to MultiNLI Matched Open Evaluation and MultiNLI Mismatched Open Evaluation.

python esim_mnli_test.py

python top_bert_mnli_test.py

Please let us know if you encounter any problems.

The contact email is rzhangpku@pku.edu.cn