-

Notifications

You must be signed in to change notification settings - Fork 1.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Modularize the character atlas system, add a LRU-cache based dynamic character atlas #1327

Conversation

| ctx.globalAlpha = DIM_OPACITY; | ||

| } | ||

|

|

||

| // Draw the non-bold version of the same color if bold is not enabled |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't know what we should do here. The old implementation did this, but it's a bit tricker for me to do it here, since I don't have a reference to terminal; I'd need to add it to the atlas config.

It doesn't look like the _drawUncachedChar codepath supports this, so as-is, this rendering behavior is inconsistent. Either we should make sure it's consistent everywhere, or get rid of it.

IMO, it doesn't seem worth the effort to implement since this is a deprecated option.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I guess we'll mark moving to the dynamic cache as the default as v3 to allow breaking changes?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This isn't an issue with the dynamic atlas. It's an bug with the drawing behavior of the static atlas being inconsistent with _drawUncachedChar, and I think this is a bug on master.

So I don't know if I should fix the bug, or if so, how I should fix the bug. My proposal is that we should delete the logic and make StaticCharAtlas behave like _drawUncachedChar does.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @bgw, I had a quick look and made some high level comments.

src/renderer/atlas/BaseCharAtlas.ts

Outdated

| * Perform any work needed to warm the cache before it can be used. May be called multiple times. | ||

| * Implement _doWarmUp instead if you only want to get called once. | ||

| */ | ||

| public warmUp(): Promise<any> { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For IE11 can a native Promise polyfill just be loaded before xterm.js is?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As I understood it, xterm already required a Promise polyfill to be loaded.

| return new Promise(r => r(canvas.transferToImageBitmap())); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't think it will get to that point on IE as it doesn't support createImageBitmap

| const newEntry: ICharAtlasCacheEntry = { | ||

| bitmap: generateCharAtlas(window, canvasFactory, newConfig), | ||

| atlas: new charAtlasImplementations[terminal.options.experimentalCharAtlas]( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What do you think about pulling the dynamic cache into an addon for now and monkeypatching some factory method or something to inject while it's still being evaluated?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Seems reasonable. We'll still probably need/want to add the BaseCharAtlas stuff though, so we have a sane way to hook into the atlas/drawing system.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

👍

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've spent a little bit of time trying to pull DynamicCharAtlas off into a separate addon, but it's a bit painful given the number of shared types and utility functions, I don't feel like it provides much benefit since we'd still need to leave a large amount of the changed code in place to decouple the renderer and atlas, and it would make it more difficult for us to make DynamicCharAtlas the primary atlas later on, since we'd need to re-integrate it.

How important is this to you?

| export default class DynamicCharAtlas extends BaseCharAtlas { | ||

| // An ordered map that we're using to keep track of where each glyph is in the atlas texture. | ||

| // It's ordered so that we can determine when to remove the old entries. | ||

| private _cacheMap: Map<GlyphCacheKey, IGlyphCacheValue> = new Map(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do you know if you are using any Map APIs that aren't supported in IE11's implementation? https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Map

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have to use iterators to extract the first element from the map. It's theoretically possible to use .forEach for that, but it involves throwing and catching an exception to avoid iterating over the entire map, which is both awkward and messy.

I'm going to take a stab at replacing Map with a custom ordered map implementation that fits our use-case better. Map is eating up about 10% of each frame's execution time, and I think a large part of that is the awkward ways that we need to use it's API to manipulate insertion order.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What I was thinking is if we can maybe advise IE11 users to load a map polyfill themselves as it's well supported except for IE. I'd prefer to rely on newer stuff where ever possible since this is typically a developer tool so users will generally have up to date browsers.

| import BaseCharAtlas from './BaseCharAtlas'; | ||

| import { clearColor } from '../../shared/atlas/CharAtlasGenerator'; | ||

|

|

||

| // In practice we're probably never going to exhaust a texture this large. For debugging purposes, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would have thought exhausting the texture is a real concern? Some things that should put more load on the cache:

- CJK characters - I noticed reading through that only ascii is cached, was this just done to simplify the step to the dynamic cache or is there a reason we shouldn't do this? Any strategy for supporting wide chars like CJK/emoji?

- Background colors (are these cached currently?)

- Truecolor (in the future Terminal does not render true color #484)

- High DPI screen (devicePixelRatio of 2 will x4 the pixels in the glyph

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would have thought exhausting the texture is a real concern?

Exhausting the texture shouldn't generally be much of a concern. If we do exhaust it, we can evict stale entries.

You need at least enough space to store each unique glyph that's currently drawn on the screen once, plus whatever is likely to be rendered on the next frame. Most people are only going to be using a small set of characters (usually Latin characters) in a small set of colors at the same time. Even if you have truecolor support, your editor/tool is only going to use so many colors at once.

Most time spent drawing from the cache is probably going to be spent either re-drawing parts of the screen that haven't changed (TextRenderLayer is redrawing too much stuff right now, but that's out of scope here), or dealing with scrolling of some content.

So yes, there are contrived ways to exhaust the cache every frame (use a different bg/fg combination for every cell on screen), and yes, we'd start thrashing and pay a large performance penalty for that, but I think it's actually pretty hard to do in practice.

It might make sense to re-evaluate this at some point, and make it larger, configurable, or maybe adjust it based on how frequently we're evicting elements, but a magic number keeps this simpler for the initial version.

- CJK characters - I noticed reading through that only ascii is cached, was this just done to simplify the step to the dynamic cache or is there a reason we shouldn't do this? Any strategy for supporting wide chars like CJK/emoji?

Yes, I'm very intentionally only supporting single-width characters for now, and ascii is a convenient approximation of that. We can tackle double-width characters later, but that seemed like a lot more work, and is a lower priority for me personally. At least this shouldn't regress in performance on those characters.

There's a couple different ways to pack glyphs onto a texture. One is to pack the glyphs tightly using a bin-packing algorithm:

This can make writes to the atlas slower (bin-packing), and more importantly, there's no clear strategy for evicting/replacing glyphs when we run out of space later on. This might be a good approach for the static character atlas to take at some point.

The approach I took was to pack each glyph on a fixed grid. This makes cache eviction and replacement easy (just draw over and re-use that cell). However, this means that I need a defined cell width and height. I could make every cell double-width, but that wastes half of the texture space for most glyphs.

If we want to cache double-width characters, I think it makes sense to have two textures, one for single-width characters and another for double-width characters.

It's also possible to implement an LRU-like cache with two textures:

- When drawing a glyph, cache it to the first texture, until we run out of space. Once we're out of space,

- When drawing a glyph, see if it's in the first texture but not the second texture. If it's not in the second texture, copy it from the first texture to the second texture. Repeat this until we're out of space in the second texture.

- Swap the first and second texture. Clear the new "second" texture. Repeat from step 2.

Old content is always kept in the old texture, and it isn't re-used soon enough, it gets wiped with the rest of that old texture.

I personally like this algorithm (It's kind of like a copying garbage collector!), and it doesn't require overwriting glyphs in the texture, so this could support variable-width glyphs cleanly, but it also require a lot more copying of data between textures, so I opted not to follow this approach for v1. I might change my mind later (especially with the Map performance issue I've run into).

- Background colors (are these cached currently?)

Yes, we draw the background, and then the foreground so that sub-pixel AA works, before removing most of the background color.

If any attribute of IGlyphIdentifier changes, that results in a new cache entry.

- Truecolor (in the future Terminal does not render true color #484)

Our performance is relative to how many colors you're using on the screen at once, not how many you could theoretically draw on the screen at once. My goal is to make normal use-cases fast, and most people use true color for things like vim's syntax highlighting. I don't care about optimizing for playing video content with VLC through AALib.

- High DPI screen (devicePixelRatio of 2 will x4 the pixels in the glyph)

Yes, worth considering, but I had no performance issues using vim and tmux with a 512x512 atlas on my 1440p screen, so I figured I'd double that, and we'd be fine.

TL;DR: If you want to bump this to 2048x2048 or something for now, I'd be fine with that, but we need to pick some number.

| ctx.globalAlpha = DIM_OPACITY; | ||

| } | ||

|

|

||

| // Draw the non-bold version of the same color if bold is not enabled |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I guess we'll mark moving to the dynamic cache as the default as v3 to allow breaking changes?

| "lib": [ | ||

| "DOM", | ||

| "ES5", | ||

| "ScriptHost", |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What does ScriptHost and DOM do? We were referencing DOM stuff before, no?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

These are just the default libraries for the ES5 compilation target.

Note: If

--libis not specified a default list of librares are injected. The default libraries injected are:

► For--target ES5:DOM,ES5,ScriptHost

► For--target ES6:DOM,ES6,DOM.Iterable,ScriptHost— https://www.typescriptlang.org/docs/handbook/compiler-options.html

I might actually be able to omit these. I assumed I couldn't, but I should check.

|

I found a regression with the dynamic cache that I'd like to understand. The following script runs much slower with the dynamic cache turned on: for clbg in {40..47} {100..107} 49 ; do

#Foreground

for clfg in {30..37} {90..97} 39 ; do

#Formatting

for attr in 0 1 2 4 5 7 ; do

#Print the result

echo -en "\x1b[${attr};${clbg};${clfg}m ^[${attr};${clbg};${clfg}m \x1b[0m"

done

echo #Newline

done

doneDynamic cache cold: Dynamic cache warm: Static cache: I notice that the warm dynamic cache keeps drawing to the cache. Upon further inspection it appears to be happening because the cache is full: Some thoughts:

|

|

@Tyriar Yes, this is a pathological worst-case. I can look into heuristics to disable the dynamic atlas if it's thrashing, but I don't think this is a common use-case, so I was intentionally was ignoring it. I'd rather not special-case certain characters, because that's making an assumption about the font you're using (though maybe it's not an unreasonable assumption), and that only partially solves this problem. @chabou Good catch, should be easy to fix. |

| // We'll use the a ctx without alpha when possible, because that will preserve subpixel RGB | ||

| // anti-aliasing, and we'll fall back to a canvas with alpha when we have to. | ||

| private _tmpCtx: CanvasRenderingContext2D; | ||

| private _tmpCtxWithAlpha: CanvasRenderingContext2D; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Won't we only ever need one of the other unless options change? Elsewhere we only maintain a single canvas based on the allowTransparency option

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, if allowTransparency is false, we'll never need _tmpCtxWithAlpha. However, if allowTransparency is true, I'd like to hold onto two ctx objects so that we can draw characters on an opaque background with subpixel AA when possible. This is, however, inconsistent with the _drawUncachedChar codepath, which likely won't use subpixel AA anywhere.

Allocating both in the allowTransparency: false case is wasteful, but it's pretty small, and it kept the code simpler, so I didn't think it mattered.

If you'd like me to use a single canvas, I can do that pretty easily, as long as you're aware of the tradeoff this makes.

(I don't have much personal preference, since I don't use allowTransparency)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'd like to hold onto two ctx objects so that we can draw characters on an opaque background with subpixel AA when possible.

I actually liked that allowTransparency was also a switch to enable or disable SPAA completely. I think if we try to do SPAA for some cells and not others then the font might look inconsistent and weird?

|

Figured out the issue: hyper is passing in I assumed that because It's really just a fluke that (Sorry, I'd test this stuff in hyper myself, but it's difficult for me because of the issues with hyper/electron transparency on linux) |

|

Don't be sorry, you're making an amazing job, and I can help you on this, no problem. I'll try to figure out why soon, but if I use |

|

Ok I found the cause. I tested and it works great 🎉 |

|

Ah, it looks like I'm reworking how some of the color parsing works, so that we parse and store colors in 32-bit uints from arbitrary CSS color strings (instead of #RRBBGG format) which should make this stuff saner. Hopefully I'll be able to get that PR up tonight. Since @Tyriar wants |

This mostly worked before, but clearColor made the assumption that the colors were always in #RRBBGG format. See the discussion here: xtermjs#1327 (comment) This changes the internal representation of a color so that we hold onto into an object containing the original css string along with an RGBA value encoded as a 32-bit uint. clearColor can then use that RGBA representation. Additionally, this deduplicates some code between ColorManager and Terminal. Terminal was generating the 256 ansi colors the same way ColorManager was, so this just makes Terminal use the same constant.

Pros: - The code is simpler. - AA rendering is consistent between cached and uncached characters. - No long requires parsing color values to determine the alpha, which means that we no longer depend on #rrggbbaa color value support. Cons: - We're drawing without SPAA in some cases where we could be. Addresses this comment: xtermjs#1327 (comment)

This mostly worked before, but clearColor made the assumption that the colors were always in #RRBBGG format. See the discussion here: xtermjs#1327 (comment) This changes the internal representation of a color so that we hold onto into an object containing the original css string along with an RGBA value encoded as a 32-bit uint. clearColor can then use that RGBA representation. Additionally, this deduplicates some code between ColorManager and Terminal. Terminal was generating the 256 ansi colors the same way ColorManager was, so this just makes Terminal use the same constant.

This mostly worked before, but clearColor made the assumption that the colors were always in #RRBBGG format. See the discussion here: xtermjs#1327 (comment) This changes the internal representation of a color so that we hold onto into an object containing the original css string along with an RGBA value encoded as a 32-bit uint. clearColor can then use that RGBA representation. Additionally, this deduplicates some code between ColorManager and Terminal. Terminal was generating the 256 ansi colors the same way ColorManager was, so this just makes Terminal use the same constant.

This mostly worked before, but clearColor made the assumption that the colors were always in #RRBBGG format. See the discussion here: xtermjs#1327 (comment) This changes the internal representation of a color so that we hold onto into an object containing the original css string along with an RGBA value encoded as a 32-bit uint. clearColor can then use that RGBA representation. Additionally, this deduplicates some code between ColorManager and Terminal. Terminal was generating the 256 ansi colors the same way ColorManager was, so this just makes Terminal use the same constant.

This mostly worked before, but clearColor made the assumption that the colors were always in #RRBBGG format. See the discussion here: xtermjs#1327 (comment) This changes the internal representation of a color so that we hold onto into an object containing the original css string along with an RGBA value encoded as a 32-bit uint. clearColor can then use that RGBA representation. Additionally, this deduplicates some code between ColorManager and Terminal. Terminal was generating the 256 ansi colors the same way ColorManager was, so this just makes Terminal use the same constant.

This mostly worked before, but clearColor made the assumption that the colors were always in #RRBBGG format. See the discussion here: xtermjs#1327 (comment) This changes the internal representation of a color so that we hold onto into an object containing the original css string along with an RGBA value encoded as a 32-bit uint. clearColor can then use that RGBA representation. Additionally, this deduplicates some code between ColorManager and Terminal. Terminal was generating the 256 ansi colors the same way ColorManager was, so this just makes Terminal use the same constant.

This limits the amount of damage a pathological program can cause in thrashing the LRU cache. I ran the script described here: xtermjs#1327 (comment) Without this patch, the entire output would get drawn in one frame that took about 140 ms to draw. With this patch, it takes about 60 ms. It's still nowhere as good as the static or none atlas implementations, but it's not terrible, like it was. This should have minimal impact on real-world applications: it'll just take a little longer for the atlas to warm up. The implementation is a little hacky, since it involves throwing some code into TextRenderLayer that violates the separation of concerns, but we can clean this up later.

|

@Tyriar 83cf279 should mostly fix the performance regression you found. I'll rebase or merge this onto master once #1346 lands. I think the current set of comments have been addressed with the exception of:

|

|

FYI: we released a new Hyper canary release (v2.0.0-canary.15) with this PR merged (9e54b86) 🎉 |

|

@bgw Thanks! I'll check it out as soon as I can, we're in the test/release phase of VS Code so I have limited time for the next few days. |

There were two bugs: - We need to clear the background of _tmpCtx instead of just drawing on top if the background color is transparent. - We should set the background to fully transparent if it's partially transparent, to avoid drawing the transparent background twice.

Drawing to a ctx with `{alpha: true}` causes the canvas API (on chrome

at least) to switch to grayscale AA, instead of RGB subpixel AA.

This fixes it by drawing to a ctx that's using `{alpha: false}` when

posisble, and switching to a ctx that's using `{alpha: true}` only when

we have to.

Bug: When appending a node, we weren't setting the tail's `next` value correctly.

Pros: - The code is simpler. - AA rendering is consistent between cached and uncached characters. - No long requires parsing color values to determine the alpha, which means that we no longer depend on #rrggbbaa color value support. Cons: - We're drawing without SPAA in some cases where we could be. Addresses this comment: xtermjs#1327 (comment)

This limits the amount of damage a pathological program can cause in thrashing the LRU cache. I ran the script described here: xtermjs#1327 (comment) Without this patch, the entire output would get drawn in one frame that took about 140 ms to draw. With this patch, it takes about 60 ms. It's still nowhere as good as the static or none atlas implementations, but it's not terrible, like it was. This should have minimal impact on real-world applications: it'll just take a little longer for the atlas to warm up. The implementation is a little hacky, since it involves throwing some code into TextRenderLayer that violates the separation of concerns, but we can clean this up later.

- We need to make a clone of this array, because ColorManager mutates it. - We only want to compare the first 16 colors instead of all 256, since only the first 16 will ever change. In DynamicCharAtlas, we can pull the additional colors from DEFAULT_ANSI_COLORS. I tested this out by loading the demo and changing the theme a few times.

It turns out that `glyph.char.charCodeAt(0)` isn't equivalent to the character's actual code because of utf-16 garbage, so this adds `glyph.code` to the `glyph` object so that we can use the `code` value, and don't need to regenerate it.

|

Is it possible that this PR disables |

|

@chabou it looks like fontWeight is still being used and wasn't really touched: https://github.com/xtermjs/xterm.js/pull/1327/files#diff-0f9aebb75af9d3b431640c5def0cfe48L124 |

|

It looks like this did indeed break |

|

👍 Good catch. Should be easy-ish to fix. |

|

On a related note, I figured out why Mac users keep complaining that Hyper's text is heavier (or "not sharp") by default than what they're used to. iTerm2 has an option (enabled by default) for making fonts use "thin strokes" on retina screens. They do this by calling an undocumented |

The atlas wasn't using these values when setting the font.

|

@chabou I've fixed the font weight issue, and merged the latest version of master in. @Tyriar I'm going to wait until #1391 merges, and then update this branch so that I'm the one to deal with the messiness of that merge, and so that I can make sure the new option works with the dynamic atlas. I think I've addressed all of your comments; do you want to review anything else? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@bgw font weight was the only thing, merge away when the conflicts are resolved 🎉

I did a bunch of testing of various ansi escape codes (including italics) with all three char atlas implementations (none, static, dynamic), with and without `drawBoldTextInBrightColors`, and with and without `enableBold` to test all of the edge cases that I could.

|

This is fantastic! Thank you so much for your hard work 🙏 |

|

Appreciation msg here! Sometimes the fundamentals need some tweakin' |

I've split this into a series of small commits, which should hopefully make reviewing this easier.

This introduces a character atlas API (defined by BaseCharAtlas), and three implementations of that API (static, dynamic, and none). The static character atlas is default for now, but you can switch between implementations using the

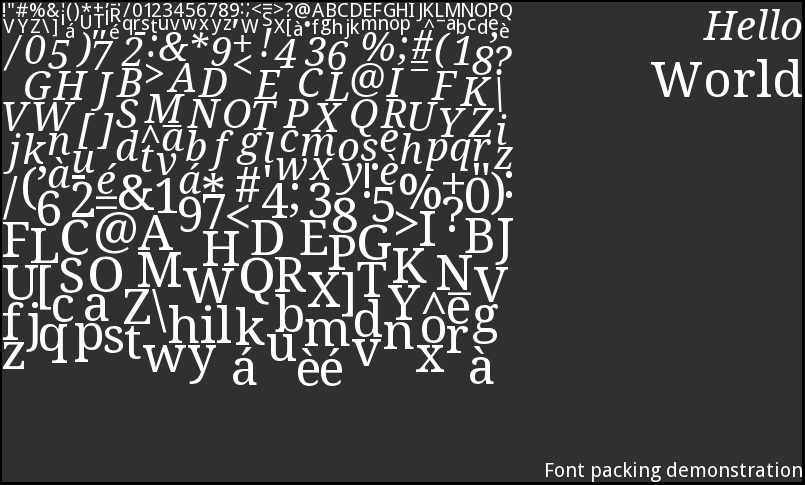

experimentalCharAtlasconfiguration option, or with a dropdown in the demo webpage.The new DynamicCharAtlas implementation uses a fixed-size texture and an ordered map to maintain an LRU cache of single-width characters in different colors and with different backgrounds. After using it for a while, you end up with an atlas that looks something like this:

When scrolling through vim with the dynamic character atlas, we can see that almost no time is spent calling

fillText, and that most of the time is spent in calls todrawImageandfillRect:Whereas the opposite is generally true for the static char atlas:

There's more performance overhead involved in creating and managing the LRU cache, but in practice it should cover more glyphs, so performance will depend on what kind of text you're rendering.

I've got more optimizations I'm currently implementing and/or playing with that reduce the cost of drawing and the overhead of maintaining the cache, but I think the current implementation is usable and competitive with the static atlas for most use-cases.

This does add a dependency on

Map(and an implementation ofSymbol.iteratorto traverse it), but that's only if you enable the dynamic atlas. If you don't enable the dynamic atlas, you shouldn't needMap(In theory; I haven't tested this claim).I'm considering replacing

Mapwith a custom ordered map implementation, since the act of deleting and setting entries on the Map with every cache read (to mark it as used and move it to the end of the Map) ends up being rather expensive, and appears to slow down over time (on V8 at least?). I think I can probably devise a data structure that works better for our access patterns.Fixes #1294