forked from sigp/lighthouse

-

Notifications

You must be signed in to change notification settings - Fork 0

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Reduce bandwidth over the VC<>BN API using dependant roots (sigp#4170)

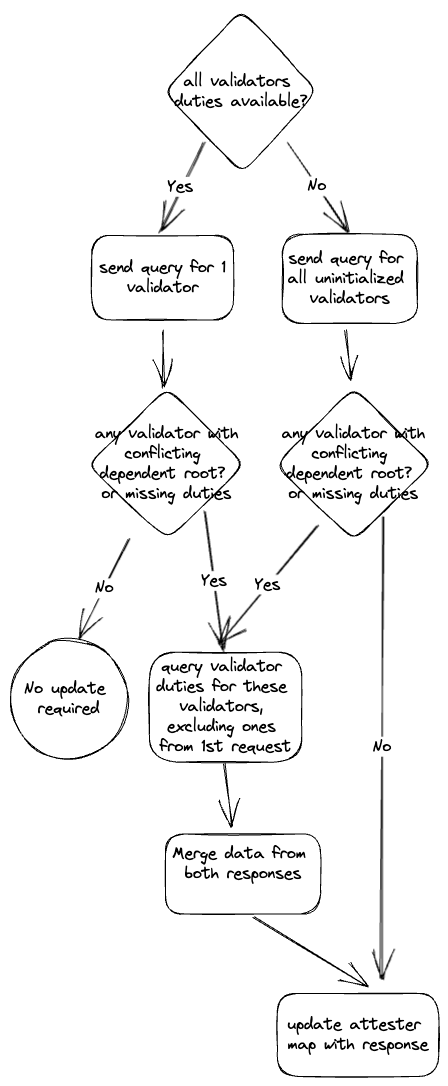

## Issue Addressed sigp#4157 ## Proposed Changes See description in sigp#4157. In diagram form:  Co-authored-by: Jimmy Chen <jimmy@sigmaprime.io>

- Loading branch information

1 parent

7f3a57a

commit ca2a164

Showing

1 changed file

with

152 additions

and

79 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters