-

Notifications

You must be signed in to change notification settings - Fork 745

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Reduce bandwidth over the VC<>BN API using dependant roots #4157

Comments

|

Something that Teku does which we could also consider is subscribing to the SSE event stream and using that to infer a change of dependent root. I guess the This would be more of a major architectural change though, and may come with other complications (handling stream reconnects, etc). Perhaps the reduced polling approach is more pragmatic |

|

@paulhauner thanks a lot for the nice write up! I'd like to look into this. |

|

PR created here #4170 |

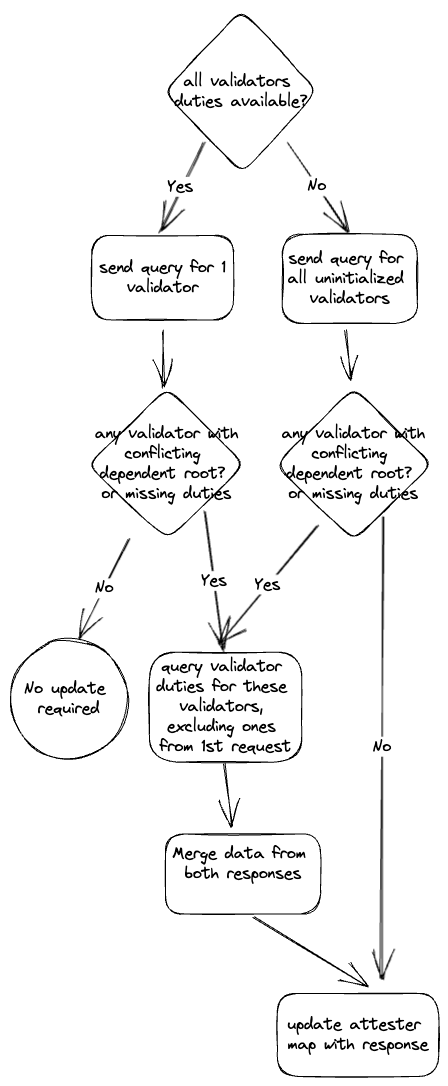

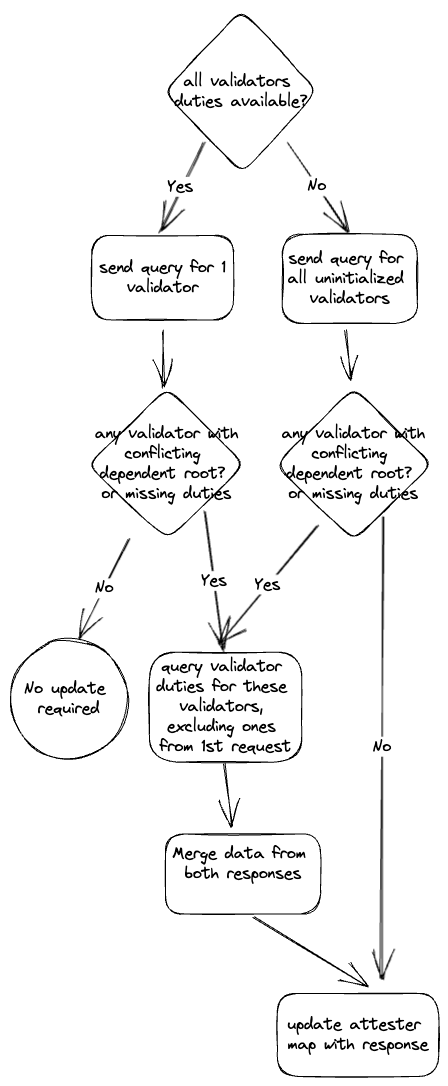

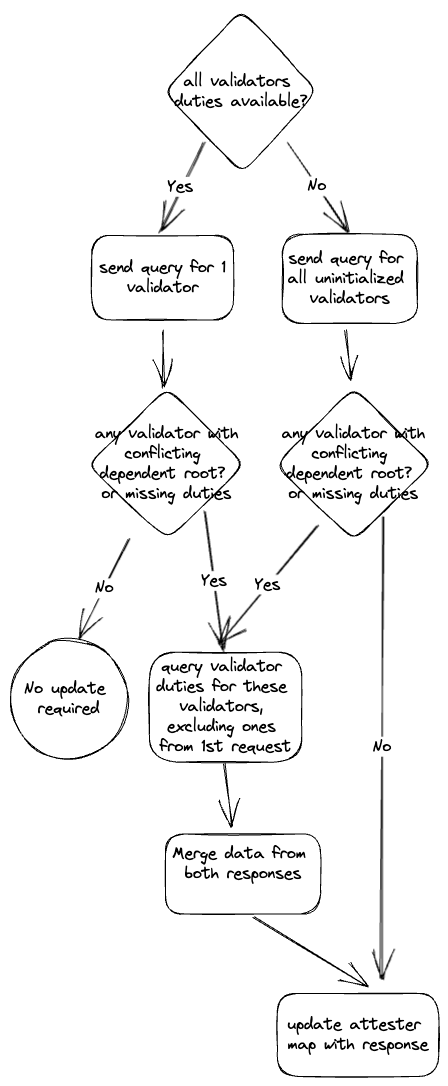

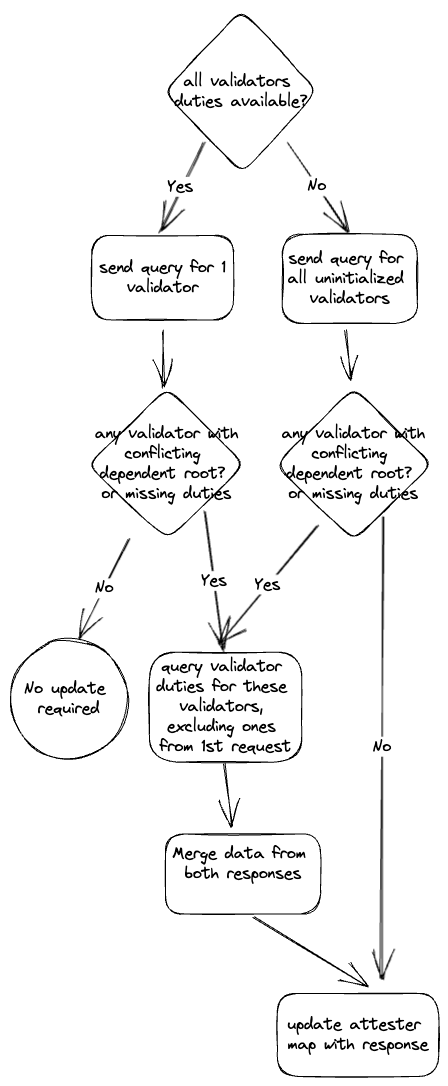

## Issue Addressed #4157 ## Proposed Changes See description in #4157. In diagram form:  Co-authored-by: Jimmy Chen <jimmy@sigmaprime.io>

|

Completed in #4170 |

## Issue Addressed sigp#4157 ## Proposed Changes See description in sigp#4157. In diagram form:  Co-authored-by: Jimmy Chen <jimmy@sigmaprime.io>

## Issue Addressed sigp#4157 ## Proposed Changes See description in sigp#4157. In diagram form:  Co-authored-by: Jimmy Chen <jimmy@sigmaprime.io>

## Issue Addressed sigp#4157 ## Proposed Changes See description in sigp#4157. In diagram form:  Co-authored-by: Jimmy Chen <jimmy@sigmaprime.io>

Description

Presently the VC will poll for attestation duties each slot. Our polling achieves:

Looking at the

/eth/v1/validator/duties/attester/{epoch}endpoint, we see that its structure is:Request Body: An array of the validator indices for which to obtain the duties.

[ "1" ]Note: we also included the

epochin the URL.Response Body

{ "dependent_root": "0xcf8e0d4e9587369b2301d0790347320302cc0943d5a1884560367e8208d920f2", "execution_optimistic": false, "data": [ { "pubkey": "0x93247f2209abcacf57b75a51dafae777f9dd38bc7053d1af526f220a7489a6d3a2753e5f3e8b1cfe39b56f43611df74a", "validator_index": "1", "committee_index": "1", "committee_length": "1", "committees_at_slot": "1", "validator_committee_index": "1", "slot": "1" } ] }In our current implementation, the request will contain all validators managed by the VC. I claim that, in the best case, we could only send a request for one validator and know whether or not all the other validators need updating.

I claim this because a set of shuffling is uniquely identified by

(epoch, dependent_root)(see "Background Info" below if this isn't clear to you). We already use this assumption in the VC:lighthouse/validator_client/src/duties_service.rs

Lines 740 to 749 in a53830f

With the above statement we're filtering out any duties that are already have the same

(epoch, dependent_root)signature. So, our current flow goes like:duties/attesterfor all validators.(epoch, dependent_root).duties_service.attesterswith any new duties (reference).I propose that we should instead:

duties/attesterforINITIAL_DUTIES_QUERY_SIZE = 1validators.(epoch, dependent_root)values.duties/attesterrequest for those validators.duties_service.attesterswith any new duties which resulted from the first or second request.In the case where we don't expect the duties to change (i.e., it's not the first request after VC boot, it's not the first request of an epoch and there wasn't a re-org) then we should reduce the bandwidth by a factor of the number of validators in that VC (e.g., if the VC has 100 validators then they request/response should be ~100x smaller).

Additional Details

There's some extra detail regarding the first request of

INITIAL_DUTIES_QUERY_SIZE. I propose that we should actually make this query of sizemax(INITIAL_DUTIES_QUERY_SIZE, num_uninitialized_validators)wherenum_uninitialized_validatorsis the count of validators for which we don't already know their duties for that epoch. This will be all validators when booting for the first time or querying for the "next epoch". It'll avoid us doing the second request when we know we need the duties for all validators.Background Info

Whilst the term "dependent root" doesn't appear in the specification, it exists as a concept in

get_beacon_committee. We usedependant_rootto refer to the block root at the same slot asget_seeduses to load therandao_mixas used as the input tocompute_committee.The argument is that any chain which has

dependant_rootin its history will always return the same result forget_beacon_committeegiven the sameepoch.Here's a scrappy diagram that might help:

The term "dependent root" was introduced to the API some time after we'd implemented the concept in Lighthouse for keying our internal shuffling caches. Internally, we will sometimes refer to it as the "shuffling decision" root (example).

The text was updated successfully, but these errors were encountered: