-

Notifications

You must be signed in to change notification settings - Fork 726

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Merged by Bors] - Reduce bandwidth over the VC<>BN API using dependant roots #4170

Conversation

|

I've pushed a first implementation, seems to work fine on a local testnet between 4 Lighthouse nodes. I'm going to do a bit more testing on this, and verify the bandwidth improvements. |

|

Some initial test numbers below from running local testnet, seems to be working fine and chain is finalizing. Test Setup

|

|

Short update: I've been running some tests on this branch, and the VC seems to be querying attester duties correctly (on a local testnet) when the Test SetupIt is very difficult to verify this on the Goerli testnet because re-orgs that change the However it is possible to verify this on a local testnet using @michaelsproul's suggestion:

This test branch contains code that achieves the above. This would allow verifying the attestations work as expected when Additional InfoAssume the current epoch is

|

|

In addition to the above re-org scenario, I've also observed two other scenarios that changes

More details on this HackMD page. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm impressed at how small this change ended up, nice work!

I have a few suggestions but nothing major!

| // request for extra data unless necessary in order to save on network bandwidth. | ||

| let uninitialized_validators = | ||

| get_uninitialized_validators(duties_service, &epoch, local_pubkeys); | ||

| let indices_to_request = if uninitialized_validators.len() >= INITIAL_DUTIES_QUERY_SIZE { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In the (hypothetical) case where INITIAL_DUTIES_QUERY_SIZE == 2 and uninitialized_validators == 1 I think we could fail to initialize that one uninitialized validator in the first call.

To avoid this, I think we'd want something like:

if !uninitialized_validators.is_empty() {

uninitalized_validators

} else {

&local_indices[0..min(INITIAL_DUTIES_QUERY_SIZE, local_indices.len())]

}My suggestion removes the guarantee that we'll always request INITIAL_DUTIES_QUERY_SIZE validators, but it does ensure that we always query for all uninitialized validators. I think it's probably OK to sometimes query for less than INITIAL_DUTIES_QUERY_SIZE, especially since we current have it set to 1.

| ); | ||

|

|

||

| if duties_service.per_validator_metrics() { | ||

| update_per_validator_duty_metrics::<E>(current_slot, &new_duties); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Previously we were running this function for all duties and now we're only running it for new duties. I think that might pose a problem where we don't update the metrics to show the next epoch duties, once that next epoch arrives (assuming they haven't changed since the previous epoch).

I think we could solve this by iterating over duties_service.attesters instead.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, didn't think about this scenario! Will push a fix.

… suggestions Co-authored-by: Paul Hauner <paul@paulhauner.com>

…use into reduce-vc-bn-bandwith

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice! We're almost there! Just a minor comment request and also something I missed earlier about updating the attestation slot metrics 🙏

|

|

||

| if validators_to_update.is_empty() { | ||

| // No validators have conflicting (epoch, dependent_root) values or missing duties for the epoch. | ||

| return Ok(()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we need to make sure we run update_per_validator_duty_metrics so that we start reporting the next-epoch duties as the current_slot progresses past the current-epoch slot.

Since we've changed update_per_validator_duty_metrics, perhaps it makes sense to hoist it up into poll_beacon_attesters (perhaps after each call to poll_beacon_attesters_for_epoch in here)? That way we can exit this whenever we like and still be confident that update_per_validator_duty_metrics is being called.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice catch 🙏 , I've moved this to where you suggested!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Perfect, approved!

|

bors r+ |

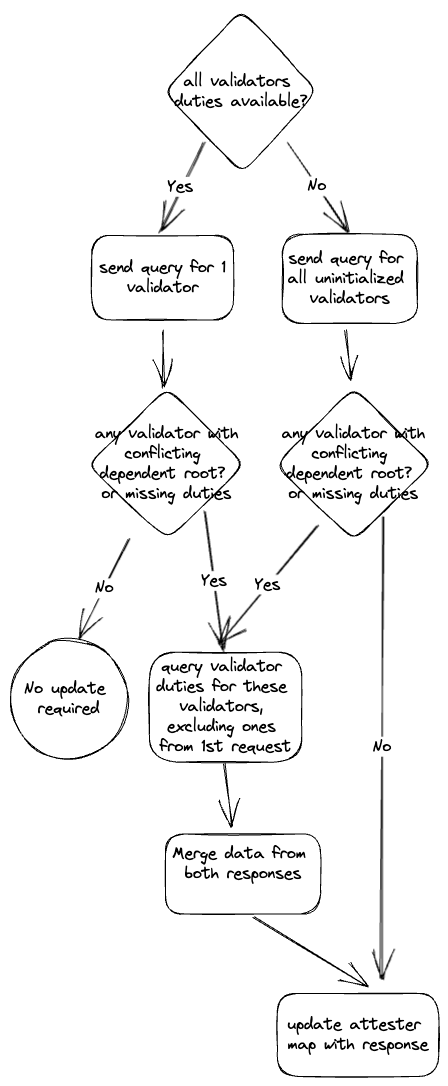

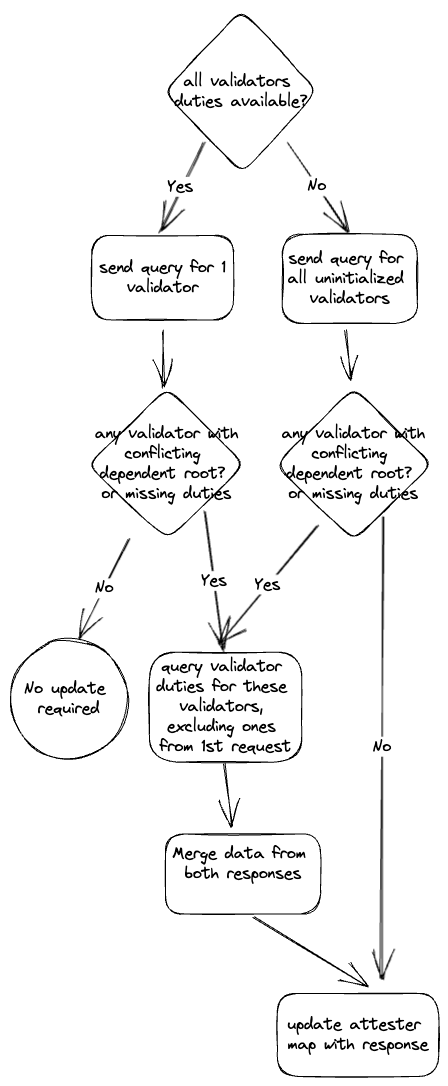

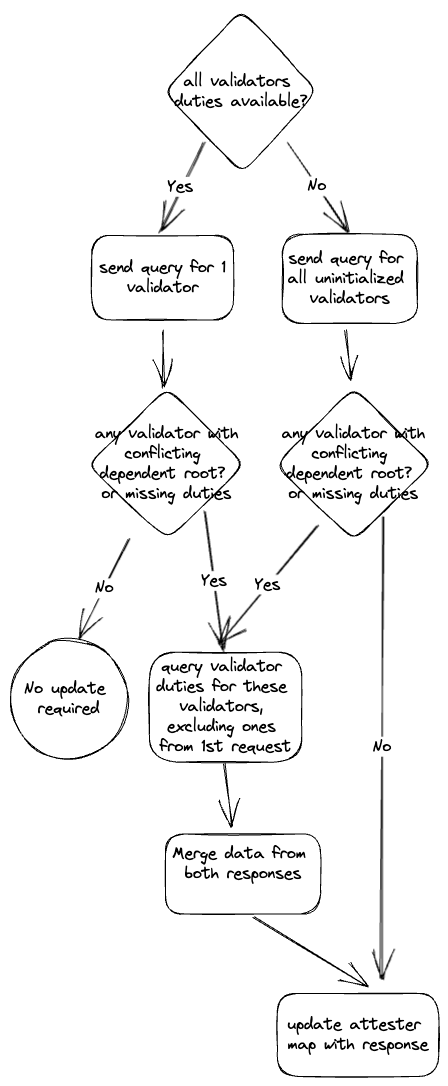

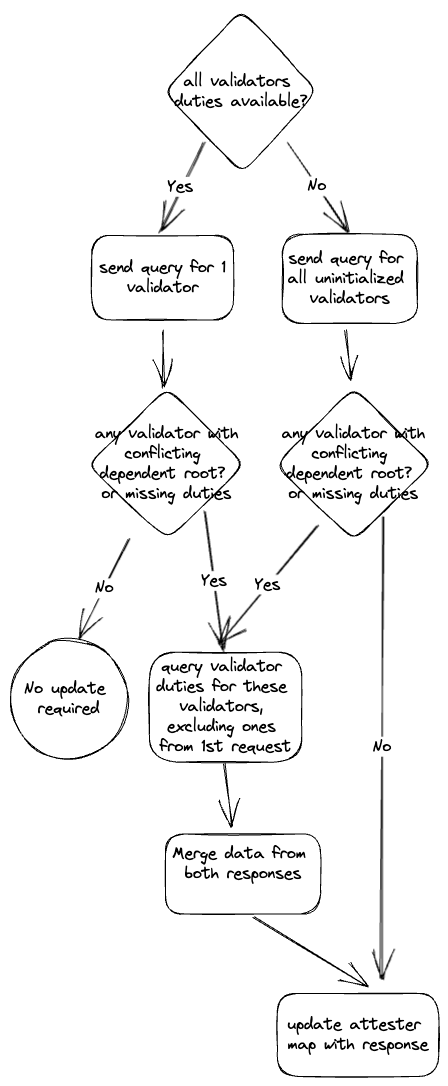

## Issue Addressed #4157 ## Proposed Changes See description in #4157. In diagram form:  Co-authored-by: Jimmy Chen <jimmy@sigmaprime.io>

|

Pull request successfully merged into unstable. Build succeeded! The publicly hosted instance of bors-ng is deprecated and will go away soon. If you want to self-host your own instance, instructions are here. If you want to switch to GitHub's built-in merge queue, visit their help page.

|

## Issue Addressed sigp#4157 ## Proposed Changes See description in sigp#4157. In diagram form:  Co-authored-by: Jimmy Chen <jimmy@sigmaprime.io>

## Issue Addressed sigp#4157 ## Proposed Changes See description in sigp#4157. In diagram form:  Co-authored-by: Jimmy Chen <jimmy@sigmaprime.io>

## Issue Addressed sigp#4157 ## Proposed Changes See description in sigp#4157. In diagram form:  Co-authored-by: Jimmy Chen <jimmy@sigmaprime.io>

Issue Addressed

#4157

Proposed Changes

See description in #4157.

In diagram form: