-

Notifications

You must be signed in to change notification settings - Fork 67

Download network dapps and load them from filesystem #144

Conversation

| node: { | ||

| fs: 'empty' | ||

| }, | ||

| target: 'electron-renderer', |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Otherwise in src/ I couldn't use modules that used require('fs') (the latter would return undefined), even though window.require('fs') worked fine in my src/ files.

This also fixes having to use window.require instead of require in src/

Not sure if there are consequences to this (some warnings and errors occur during bundling -- CI doesn't pass because of this)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No problem, the react app is exactly in the renderer process. I'll have a look at the CI error, building locally works fine.

|

|

||

| // We store the URL to allow GH Hint URL updates for a given hash | ||

| // to take effect immediately, and to disregard past failed attempts at | ||

| // getting this file from other URLs. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Attempts are keyed on hash+url right now.

Should we? It's a DDoS vector if the target host is the same. If the attacker registers (some hash, "http://victim-website.com/") on GH Hint and then every few minutes changes the URL to http://victim-website.com/?x http://victim-website.com/?xx http://victim-website.com/?xxx then ExpoRetry isn't used at all..

Or is this a non-issue because of costs etc.?

I'm thinking in particular if somebody registers a dapp with the icon or manifest URL in GitHub Hint set to some big file like http://victim-website.com/bigfile.zip

Parity UI tries to download the icons and manifests of network daps each time the user goes to the dapps list (homepage). If the download failed (file too big), ExpoRetry blacklists {url,hash} exponentially; however this exponential increase will be reset each time the URL updates on GH Hint. If the attacker regularly updates the URL to http://victim-website.com/bigfile.zip?x etc. then victim website has a bad time.

This could be fixed if we key the attempts based on the hash only (although the attacker could regularly update the manifest/icon hash in the dapp registry..). What do you think?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

cc @tomusdrw

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry just noticed this comment today, can we maybe use ExpoRetry on hash, not the destination?

That said, modifying the entry is not really something that we should consider - it will always be possible either by modifying the URL or the hash itself.

| @@ -45,18 +49,10 @@ function createWindow () { | |||

|

|

|||

| mainWindow = new BrowserWindow({ | |||

| height: 800, | |||

| width: 1200 | |||

| width: 1200, | |||

| webPreferences: { nodeIntegrationInWorker: true } | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should be fine, we are not requiring any external files as web workers.

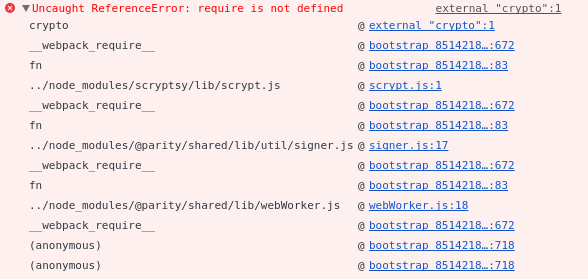

Is the error stack longer? Who is requiring @parity/shared/lib/webWorker.js? I'd be more enthusiast to remove web workers altoghether.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

that's the whole error stack

we import some files of @parity/shared (for example import { initStore } from '@parity/shared/lib/redux' in index.parity.js) that require the worker of @parity/shared (in that case, initStore calls setupWorker). I imagine that's where it's coming from

|

Does this need to be updated? Lines 92 to 104 in 5ad324f

Also, this becomes outdated: Lines 91 to 93 in 5ad324f

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks good!

| if (app.type === 'network') { | ||

| return HashFetch.get().fetch(this._api, app.contentHash, 'dapp') | ||

| .then(appPath => { | ||

| app.localUrl = `file://${appPath}/index.html`; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Does it work on windows? I thought that /// is required

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Good question! Need to check

| const manifestHash = bytesToHex(manifestId).substr(2); | ||

|

|

||

| return Promise.all([ | ||

| HashFetch.get().fetch(api, imageHash, 'file').catch((e) => { console.warn(`Couldn't download icon for dapp ${appId}`, e); }), |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this will resolve the promise to undefined in case of error, is that ok?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes, this is expected. we want to still display the dapp if the icon fetch failed. the only consequence is that the icon url will be file://undefined

src/util/hashFetch/expoRetry.js

Outdated

| load () { | ||

| const filePath = this._getFilePath(); | ||

|

|

||

| return fsExists(filePath) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'd skip fsExists, it's the same as just reading the file.

src/util/hashFetch/expoRetry.js

Outdated

|

|

||

| return fsWriteJson(this._getFilePath(), this.failHistory); | ||

| } | ||

| }); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Since we are not handling errors here it seems that if it fails once it will never be attempted again, right? Should we at least print a warning if that happens?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice catch! (or rather, missing catch :P) I don't think we need to never attempt writing again if it failed once. I'll add a catch

src/util/hashFetch/index.js

Outdated

| } | ||

|

|

||

| function checkHashMatch (hash, path) { | ||

| return fsReadFile(path).then(content => { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This will read the whole file to memory, might be better to just hash without allocating. In general we should avoid reading the files that were fetched over the network, better to just stream them if it's possible.

src/util/hashFetch/index.js

Outdated

| const finalPath = path.join(getHashFetchPath(), 'files', hash); | ||

|

|

||

| return download(url, tempPath) | ||

| // Don't register a failed attempt if the download failed; the user might be offline. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We should actually distinguish between:

- User offline

- Size limit reached

- Server returned non-200 response

We can only skip registering failed attempt in the first case, but skipping it in the other two opens up a ddos vector

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You're right. I think it's easier for now to register the failed attempt in any case, and assume the user is online.

| }); | ||

| } | ||

|

|

||

| function download (url, destinationPath) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What will happen if the url is file:///etc/passwd?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

download is only called by hashDownload, which is called with a url that is guaranteed to start with http:

shell/src/util/hashFetch/index.js

Lines 161 to 172 in f76f89c

| if (commit === '0x0000000000000000000000000000000000000000') { // The slug is the URL to a file | |

| if (!slug.toLowerCase().startsWith('http')) { throw new Error(`GitHub Hint URL ${slug} isn't HTTP/HTTPS.`); } | |

| url = slug; | |

| zip = false; | |

| } else if (commit === '0x0000000000000000000000000000000000000001') { // The slug is the URL to a dapp zip file | |

| if (!slug.toLowerCase().startsWith('http')) { throw new Error(`GitHub Hint URL ${slug} isn't HTTP/HTTPS.`); } | |

| url = slug; | |

| zip = true; | |

| } else { // The slug is the `owner/repo` of a dapp stored in GitHub | |

| url = `https://codeload.github.com/${slug}/zip/${commit.substr(2)}`; | |

| zip = true; | |

| } |

I'll add a condition in the download function for future-proofing

| httpx.get(url, response => { | ||

| var size = 0; | ||

|

|

||

| response.on('data', (data) => { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we reject the promise .on('error') (either read error or fs write error?)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Works like a charm on Kovan! I'm syncing mainnet now

| @@ -45,18 +49,10 @@ function createWindow () { | |||

|

|

|||

| mainWindow = new BrowserWindow({ | |||

| height: 800, | |||

| width: 1200 | |||

| width: 1200, | |||

| webPreferences: { nodeIntegrationInWorker: true } | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should be fine, we are not requiring any external files as web workers.

Is the error stack longer? Who is requiring @parity/shared/lib/webWorker.js? I'd be more enthusiast to remove web workers altoghether.

src/util/hashFetch/index.js

Outdated

| const fsReadFile = util.promisify(fs.readFile); | ||

| const fsUnlink = util.promisify(fs.unlink); | ||

| const fsReaddir = util.promisify(fs.readdir); | ||

| const fsRmdir = util.promisify(fs.rmdir); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I saw you included fs-extra in package.json, great idea. In this case you can fs-extra directly, it includes fs and is promise-based if no callback is passed.

| node: { | ||

| fs: 'empty' | ||

| }, | ||

| target: 'electron-renderer', |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No problem, the react app is exactly in the renderer process. I'll have a look at the CI error, building locally works fine.

It's fs-extra which requires universalify. See RyanZim/universalify#6. Or a quicker hack might be to exlude universalify in the Uglify plugin

If we merge this PR, all dapps will be able to use

We can probably remove this piece of code, as it's less a security issue. The only permission we're using that I can think of is the signer requiring camera access for Parity Signer. Again, same point as above, if the manifest.json is easily fetchable here, it'd be easier to allow all permissions for builtin dapps, and disallow for network ones. |

|

How realistic is it we get this through this week? |

|

re 1. excluding universalify from the uglify plugin doesn't seem to make a difference (or maybe I didn't exclude it correctly). I tried new webpack.optimize.UglifyJsPlugin({

sourceMap: true,

screwIe8: true,

compress: {

warnings: false

},

output: {

comments: false

},

+ exclude: /universalify/

})re 2. off the top of my head: we don't really have easy access to the manifest fields here. re 3. same here. According to https://electronjs.org/docs/tutorial/security#4-handle-session-permission-requests-from-remote-content, |

|

@5chdn I addressed the grumbles; last few commits need reviewing. Branch needs some testing to make sure it works fine (also on windows, and on the mainnet). CI still doesn't pass. Apart from that I think it's good to go. |

|

Concerning 3- yes, disabling all permissions for webviews seems reasonable. |

60d2e7f

to

3db6aa7

Compare

|

CI passed :) |

|

Even works well with --light |

Network dapps are now completely handled by Parity UI.

Uses parity-js/ui#10

~/.config/parity-ui/hashfetch/partial/<hash>.part~/.config/parity-ui/hashfetch/files/<hash>if it's a regular file ; if it's a zip archive, extract it temporarily to~/.config/parity-ui/hashfetch/partial-extract/<hash>and then move it to~/.config/parity-ui/hashfetch/files/<hash>Manifests and icons are fetched on the dapps list page (Dapps); the dapps themselves (their contents) are fetched on demand, when the user wants to open the dapp. Added a loading screen if the dapp takes more than 1.5 second to load (we then assume it's a network dapp and that it's being downloaded).

Tomek advised me to set an upper limit for the downloads :

I set 20MB as max file size to download, regardless of whether it's a regular file (manifest or icon) or a dapp zip archive.

Tomek's reply on my suggestion to blacklist the URL locally if the download fails (to prevent DDoSing a target victim server)

In case of hash mismatch or file too big (not in case of connection error though), we register the timestamp of the failed attempt, and the next time we request this hash with this url, we check if enough time has elapsed. Failed attempts history is stored in

hashfetch/failed_history.jsonCould only test on Kovan. Would be nice to compare

masterand this branch on the mainnet to see if there are differences (in the dapps displayed, rejected, working..)Other notes:

0x0000000000000000000000000000000000000000) rather than the URL to a zip archive (commit0x0000000000000000000000000000000000000001) are rejectedLet me know what you think!

(cc @tomusdrw)