-

Notifications

You must be signed in to change notification settings - Fork 0

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

AE_1 #3

base: AE

Are you sure you want to change the base?

AE_1 #3

Commits on Dec 4, 2018

-

[MINOR][SQL] Combine the same codes in test cases

## What changes were proposed in this pull request? In the DDLSuit, there are four test cases have the same codes , writing a function can combine the same code. ## How was this patch tested? existing tests. Closes apache#23194 from CarolinePeng/Update_temp. Authored-by: 彭灿00244106 <00244106@zte.intra> Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 93f5592 - Browse repository at this point

Copy the full SHA 93f5592View commit details -

[SPARK-24423][FOLLOW-UP][SQL] Fix error example

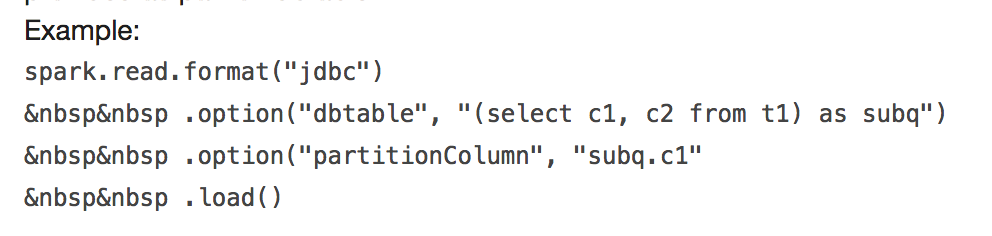

## What changes were proposed in this pull request?  It will throw: ``` requirement failed: When reading JDBC data sources, users need to specify all or none for the following options: 'partitionColumn', 'lowerBound', 'upperBound', and 'numPartitions' ``` and ``` User-defined partition column subq.c1 not found in the JDBC relation ... ``` This PR fix this error example. ## How was this patch tested? manual tests Closes apache#23170 from wangyum/SPARK-24499. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 06a3b6a - Browse repository at this point

Copy the full SHA 06a3b6aView commit details -

[SPARK-26178][SQL] Use java.time API for parsing timestamps and dates…

… from CSV ## What changes were proposed in this pull request? In the PR, I propose to use **java.time API** for parsing timestamps and dates from CSV content with microseconds precision. The SQL config `spark.sql.legacy.timeParser.enabled` allow to switch back to previous behaviour with using `java.text.SimpleDateFormat`/`FastDateFormat` for parsing/generating timestamps/dates. ## How was this patch tested? It was tested by `UnivocityParserSuite`, `CsvExpressionsSuite`, `CsvFunctionsSuite` and `CsvSuite`. Closes apache#23150 from MaxGekk/time-parser. Lead-authored-by: Maxim Gekk <max.gekk@gmail.com> Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for f982ca0 - Browse repository at this point

Copy the full SHA f982ca0View commit details -

[SPARK-26233][SQL] CheckOverflow when encoding a decimal value

## What changes were proposed in this pull request? When we encode a Decimal from external source we don't check for overflow. That method is useful not only in order to enforce that we can represent the correct value in the specified range, but it also changes the underlying data to the right precision/scale. Since in our code generation we assume that a decimal has exactly the same precision and scale of its data type, missing to enforce it can lead to corrupted output/results when there are subsequent transformations. ## How was this patch tested? added UT Closes apache#23210 from mgaido91/SPARK-26233. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 556d83e - Browse repository at this point

Copy the full SHA 556d83eView commit details -

[SPARK-26119][CORE][WEBUI] Task summary table should contain only suc…

…cessful tasks' metrics ## What changes were proposed in this pull request? Task summary table in the stage page currently displays the summary of all the tasks. However, we should display the task summary of only successful tasks, to follow the behavior of previous versions of spark. ## How was this patch tested? Added UT. attached screenshot Before patch:   Closes apache#23088 from shahidki31/summaryMetrics. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Configuration menu - View commit details

-

Copy full SHA for 35f9163 - Browse repository at this point

Copy the full SHA 35f9163View commit details -

[SPARK-26094][CORE][STREAMING] createNonEcFile creates parent dirs.

## What changes were proposed in this pull request? We explicitly avoid files with hdfs erasure coding for the streaming WAL and for event logs, as hdfs EC does not support all relevant apis. However, the new builder api used has different semantics -- it does not create parent dirs, and it does not resolve relative paths. This updates createNonEcFile to have similar semantics to the old api. ## How was this patch tested? Ran tests with the WAL pointed at a non-existent dir, which failed before this change. Manually tested the new function with a relative path as well. Unit tests via jenkins. Closes apache#23092 from squito/SPARK-26094. Authored-by: Imran Rashid <irashid@cloudera.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Configuration menu - View commit details

-

Copy full SHA for 180f969 - Browse repository at this point

Copy the full SHA 180f969View commit details

Commits on Dec 5, 2018

-

[SPARK-25829][SQL][FOLLOWUP] Refactor MapConcat in order to check pro…

…perly the limit size ## What changes were proposed in this pull request? The PR starts from the [comment](apache#23124 (comment)) in the main one and it aims at: - simplifying the code for `MapConcat`; - be more precise in checking the limit size. ## How was this patch tested? existing tests Closes apache#23217 from mgaido91/SPARK-25829_followup. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 7143e9d - Browse repository at this point

Copy the full SHA 7143e9dView commit details -

[SPARK-26252][PYTHON] Add support to run specific unittests and/or do…

…ctests in python/run-tests script ## What changes were proposed in this pull request? This PR proposes add a developer option, `--testnames`, to our testing script to allow run specific set of unittests and doctests. **1. Run unittests in the class** ```bash ./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowTests' ``` ``` Running PySpark tests. Output is in /.../spark/python/unit-tests.log Will test against the following Python executables: ['python2.7', 'pypy'] Will test the following Python tests: ['pyspark.sql.tests.test_arrow ArrowTests'] Starting test(python2.7): pyspark.sql.tests.test_arrow ArrowTests Starting test(pypy): pyspark.sql.tests.test_arrow ArrowTests Finished test(python2.7): pyspark.sql.tests.test_arrow ArrowTests (14s) Finished test(pypy): pyspark.sql.tests.test_arrow ArrowTests (14s) ... 22 tests were skipped Tests passed in 14 seconds Skipped tests in pyspark.sql.tests.test_arrow ArrowTests with pypy: test_createDataFrame_column_name_encoding (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.' test_createDataFrame_does_not_modify_input (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.' test_createDataFrame_fallback_disabled (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.' test_createDataFrame_fallback_enabled (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped ... ``` **2. Run single unittest in the class.** ```bash ./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion' ``` ``` Running PySpark tests. Output is in /.../spark/python/unit-tests.log Will test against the following Python executables: ['python2.7', 'pypy'] Will test the following Python tests: ['pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion'] Starting test(pypy): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion Starting test(python2.7): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion Finished test(pypy): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion (0s) ... 1 tests were skipped Finished test(python2.7): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion (8s) Tests passed in 8 seconds Skipped tests in pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion with pypy: test_null_conversion (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.' ``` **3. Run doctests in single PySpark module.** ```bash ./run-tests --testnames pyspark.sql.dataframe ``` ``` Running PySpark tests. Output is in /.../spark/python/unit-tests.log Will test against the following Python executables: ['python2.7', 'pypy'] Will test the following Python tests: ['pyspark.sql.dataframe'] Starting test(pypy): pyspark.sql.dataframe Starting test(python2.7): pyspark.sql.dataframe Finished test(python2.7): pyspark.sql.dataframe (47s) Finished test(pypy): pyspark.sql.dataframe (48s) Tests passed in 48 seconds ``` Of course, you can mix them: ```bash ./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowTests,pyspark.sql.dataframe' ``` ``` Running PySpark tests. Output is in /.../spark/python/unit-tests.log Will test against the following Python executables: ['python2.7', 'pypy'] Will test the following Python tests: ['pyspark.sql.tests.test_arrow ArrowTests', 'pyspark.sql.dataframe'] Starting test(pypy): pyspark.sql.dataframe Starting test(pypy): pyspark.sql.tests.test_arrow ArrowTests Starting test(python2.7): pyspark.sql.dataframe Starting test(python2.7): pyspark.sql.tests.test_arrow ArrowTests Finished test(pypy): pyspark.sql.tests.test_arrow ArrowTests (0s) ... 22 tests were skipped Finished test(python2.7): pyspark.sql.tests.test_arrow ArrowTests (18s) Finished test(python2.7): pyspark.sql.dataframe (50s) Finished test(pypy): pyspark.sql.dataframe (52s) Tests passed in 52 seconds Skipped tests in pyspark.sql.tests.test_arrow ArrowTests with pypy: test_createDataFrame_column_name_encoding (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.' test_createDataFrame_does_not_modify_input (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.' test_createDataFrame_fallback_disabled (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.' ``` and also you can use all other options (except `--modules`, which will be ignored) ```bash ./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion' --python-executables=python ``` ``` Running PySpark tests. Output is in /.../spark/python/unit-tests.log Will test against the following Python executables: ['python'] Will test the following Python tests: ['pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion'] Starting test(python): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion Finished test(python): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion (12s) Tests passed in 12 seconds ``` See help below: ```bash ./run-tests --help ``` ``` Usage: run-tests [options] Options: ... Developer Options: --testnames=TESTNAMES A comma-separated list of specific modules, classes and functions of doctest or unittest to test. For example, 'pyspark.sql.foo' to run the module as unittests or doctests, 'pyspark.sql.tests FooTests' to run the specific class of unittests, 'pyspark.sql.tests FooTests.test_foo' to run the specific unittest in the class. '--modules' option is ignored if they are given. ``` I intentionally grouped it as a developer option to be more conservative. ## How was this patch tested? Manually tested. Negative tests were also done. ```bash ./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion1' --python-executables=python ``` ``` ... AttributeError: type object 'ArrowTests' has no attribute 'test_null_conversion1' ... ``` ```bash ./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowT' --python-executables=python ``` ``` ... AttributeError: 'module' object has no attribute 'ArrowT' ... ``` ```bash ./run-tests --testnames 'pyspark.sql.tests.test_ar' --python-executables=python ``` ``` ... /.../python2.7: No module named pyspark.sql.tests.test_ar ``` Closes apache#23203 from HyukjinKwon/SPARK-26252. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>Configuration menu - View commit details

-

Copy full SHA for 7e3eb3c - Browse repository at this point

Copy the full SHA 7e3eb3cView commit details -

[SPARK-26133][ML][FOLLOWUP] Fix doc for OneHotEncoder

## What changes were proposed in this pull request? This fixes doc of renamed OneHotEncoder in PySpark. ## How was this patch tested? N/A Closes apache#23230 from viirya/remove_one_hot_encoder_followup. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 169d9ad - Browse repository at this point

Copy the full SHA 169d9adView commit details -

[SPARK-26271][FOLLOW-UP][SQL] remove unuse object SparkPlan

## What changes were proposed in this pull request? this code come from PR: apache#11190, but this code has never been used, only since PR: apache#14548, Let's continue fix it. thanks. ## How was this patch tested? N / A Closes apache#23227 from heary-cao/unuseSparkPlan. Authored-by: caoxuewen <cao.xuewen@zte.com.cn> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 7bb1dab - Browse repository at this point

Copy the full SHA 7bb1dabView commit details -

[SPARK-26151][SQL][FOLLOWUP] Return partial results for bad CSV records

## What changes were proposed in this pull request? Updated SQL migration guide according to changes in apache#23120 Closes apache#23235 from MaxGekk/failuresafe-partial-result-followup. Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com> Co-authored-by: Maxim Gekk <max.gekk@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for dd518a1 - Browse repository at this point

Copy the full SHA dd518a1View commit details

Commits on Dec 6, 2018

-

[SPARK-26275][PYTHON][ML] Increases timeout for StreamingLogisticRegr…

…essionWithSGDTests.test_training_and_prediction test ## What changes were proposed in this pull request? Looks this test is flaky https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99704/console https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99569/console https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99644/console https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99548/console https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99454/console https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99609/console ``` ====================================================================== FAIL: test_training_and_prediction (pyspark.mllib.tests.test_streaming_algorithms.StreamingLogisticRegressionWithSGDTests) Test that the model improves on toy data with no. of batches ---------------------------------------------------------------------- Traceback (most recent call last): File "/home/jenkins/workspace/SparkPullRequestBuilder/python/pyspark/mllib/tests/test_streaming_algorithms.py", line 367, in test_training_and_prediction self._eventually(condition) File "/home/jenkins/workspace/SparkPullRequestBuilder/python/pyspark/mllib/tests/test_streaming_algorithms.py", line 78, in _eventually % (timeout, lastValue)) AssertionError: Test failed due to timeout after 30 sec, with last condition returning: Latest errors: 0.67, 0.71, 0.78, 0.7, 0.75, 0.74, 0.73, 0.69, 0.62, 0.71, 0.69, 0.75, 0.72, 0.77, 0.71, 0.74 ---------------------------------------------------------------------- Ran 13 tests in 185.051s FAILED (failures=1, skipped=1) ``` This looks happening after increasing the parallelism in Jenkins to speed up at apache#23111. I am able to reproduce this manually when the resource usage is heavy (with manual decrease of timeout). ## How was this patch tested? Manually tested by ``` cd python ./run-tests --testnames 'pyspark.mllib.tests.test_streaming_algorithms StreamingLogisticRegressionWithSGDTests.test_training_and_prediction' --python-executables=python ``` Closes apache#23236 from HyukjinKwon/SPARK-26275. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for ab76900 - Browse repository at this point

Copy the full SHA ab76900View commit details -

[SPARK-25274][PYTHON][SQL] In toPandas with Arrow send un-ordered rec…

…ord batches to improve performance ## What changes were proposed in this pull request? When executing `toPandas` with Arrow enabled, partitions that arrive in the JVM out-of-order must be buffered before they can be send to Python. This causes an excess of memory to be used in the driver JVM and increases the time it takes to complete because data must sit in the JVM waiting for preceding partitions to come in. This change sends un-ordered partitions to Python as soon as they arrive in the JVM, followed by a list of partition indices so that Python can assemble the data in the correct order. This way, data is not buffered at the JVM and there is no waiting on particular partitions so performance will be increased. Followup to apache#21546 ## How was this patch tested? Added new test with a large number of batches per partition, and test that forces a small delay in the first partition. These test that partitions are collected out-of-order and then are are put in the correct order in Python. ## Performance Tests - toPandas Tests run on a 4 node standalone cluster with 32 cores total, 14.04.1-Ubuntu and OpenJDK 8 measured wall clock time to execute `toPandas()` and took the average best time of 5 runs/5 loops each. Test code ```python df = spark.range(1 << 25, numPartitions=32).toDF("id").withColumn("x1", rand()).withColumn("x2", rand()).withColumn("x3", rand()).withColumn("x4", rand()) for i in range(5): start = time.time() _ = df.toPandas() elapsed = time.time() - start ``` Spark config ``` spark.driver.memory 5g spark.executor.memory 5g spark.driver.maxResultSize 2g spark.sql.execution.arrow.enabled true ``` Current Master w/ Arrow stream | This PR ---------------------|------------ 5.16207 | 4.342533 5.133671 | 4.399408 5.147513 | 4.468471 5.105243 | 4.36524 5.018685 | 4.373791 Avg Master | Avg This PR ------------------|-------------- 5.1134364 | 4.3898886 Speedup of **1.164821449** Closes apache#22275 from BryanCutler/arrow-toPandas-oo-batches-SPARK-25274. Authored-by: Bryan Cutler <cutlerb@gmail.com> Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

Configuration menu - View commit details

-

Copy full SHA for ecaa495 - Browse repository at this point

Copy the full SHA ecaa495View commit details -

[SPARK-26236][SS] Add kafka delegation token support documentation.

## What changes were proposed in this pull request? Kafka delegation token support implemented in [PR#22598](apache#22598) but that didn't contain documentation because of rapid changes. Because it has been merged in this PR I've documented it. ## How was this patch tested? jekyll build + manual html check Closes apache#23195 from gaborgsomogyi/SPARK-26236. Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Configuration menu - View commit details

-

Copy full SHA for b14a26e - Browse repository at this point

Copy the full SHA b14a26eView commit details -

[SPARK-26194][K8S] Auto generate auth secret for k8s apps.

This change modifies the logic in the SecurityManager to do two things: - generate unique app secrets also when k8s is being used - only store the secret in the user's UGI on YARN The latter is needed so that k8s won't unnecessarily create k8s secrets for the UGI credentials when only the auth token is stored there. On the k8s side, the secret is propagated to executors using an environment variable instead. This ensures it works in both client and cluster mode. Security doc was updated to mention the feature and clarify that proper access control in k8s should be enabled for it to be secure. Author: Marcelo Vanzin <vanzin@cloudera.com> Closes apache#23174 from vanzin/SPARK-26194.

Configuration menu - View commit details

-

Copy full SHA for dbd90e5 - Browse repository at this point

Copy the full SHA dbd90e5View commit details

Commits on Dec 7, 2018

-

[SPARK-26289][CORE] cleanup enablePerfMetrics parameter from BytesToB…

…ytesMap ## What changes were proposed in this pull request? `enablePerfMetrics `was originally designed in `BytesToBytesMap `to control `getNumHashCollisions getTimeSpentResizingNs getAverageProbesPerLookup`. However, as the Spark version gradual progress. this parameter is only used for `getAverageProbesPerLookup ` and always given to true when using `BytesToBytesMap`. it is also dangerous to determine whether `getAverageProbesPerLookup `opens and throws an `IllegalStateException `exception. So this pr will be remove `enablePerfMetrics `parameter from `BytesToBytesMap`. thanks. ## How was this patch tested? the existed test cases. Closes apache#23244 from heary-cao/enablePerfMetrics. Authored-by: caoxuewen <cao.xuewen@zte.com.cn> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for bfc5569 - Browse repository at this point

Copy the full SHA bfc5569View commit details -

[SPARK-26263][SQL] Validate partition values with user provided schema

## What changes were proposed in this pull request? Currently if user provides data schema, partition column values are converted as per it. But if the conversion failed, e.g. converting string to int, the column value is null. This PR proposes to throw exception in such case, instead of converting into null value silently: 1. These null partition column values doesn't make sense to users in most cases. It is better to show the conversion failure, and then users can adjust the schema or ETL jobs to fix it. 2. There are always exceptions on such conversion failure for non-partition data columns. Partition columns should have the same behavior. We can reproduce the case above as following: ``` /tmp/testDir ├── p=bar └── p=foo ``` If we run: ``` val schema = StructType(Seq(StructField("p", IntegerType, false))) spark.read.schema(schema).csv("/tmp/testDir/").show() ``` We will get: ``` +----+ | p| +----+ |null| |null| +----+ ``` ## How was this patch tested? Unit test Closes apache#23215 from gengliangwang/SPARK-26263. Authored-by: Gengliang Wang <gengliang.wang@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>Configuration menu - View commit details

-

Copy full SHA for 5a140b7 - Browse repository at this point

Copy the full SHA 5a140b7View commit details -

[SPARK-26298][BUILD] Upgrade Janino to 3.0.11

## What changes were proposed in this pull request? This PR aims to upgrade Janino compiler to the latest version 3.0.11. The followings are the changes from the [release note](http://janino-compiler.github.io/janino/changelog.html). - Script with many "helper" variables. - Java 9+ compatibility - Compilation Error Messages Generated by JDK. - Added experimental support for the "StackMapFrame" attribute; not active yet. - Make Unparser more flexible. - Fixed NPEs in various "toString()" methods. - Optimize static method invocation with rvalue target expression. - Added all missing "ClassFile.getConstant*Info()" methods, removing the necessity for many type casts. ## How was this patch tested? Pass the Jenkins with the existing tests. Closes apache#23250 from dongjoon-hyun/SPARK-26298. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 4772265 - Browse repository at this point

Copy the full SHA 4772265View commit details -

[SPARK-26060][SQL][FOLLOW-UP] Rename the config name.

## What changes were proposed in this pull request? This is a follow-up of apache#23031 to rename the config name to `spark.sql.legacy.setCommandRejectsSparkCoreConfs`. ## How was this patch tested? Existing tests. Closes apache#23245 from ueshin/issues/SPARK-26060/rename_config. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 1ab3d3e - Browse repository at this point

Copy the full SHA 1ab3d3eView commit details -

[SPARK-24243][CORE] Expose exceptions from InProcessAppHandle

Adds a new method to SparkAppHandle called getError which returns the exception (if present) that caused the underlying Spark app to fail. New tests added to SparkLauncherSuite for the new method. Closes apache#21849 Closes apache#23221 from vanzin/SPARK-24243. Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Sahil Takiar authored and Marcelo Vanzin committedDec 7, 2018 Configuration menu - View commit details

-

Copy full SHA for 543577a - Browse repository at this point

Copy the full SHA 543577aView commit details -

[SPARK-26294][CORE] Delete Unnecessary If statement

## What changes were proposed in this pull request? Delete unnecessary If statement, because it Impossible execution when records less than or equal to zero.it is only execution when records begin zero. ................... if (inMemSorter == null || inMemSorter.numRecords() <= 0) { return 0L; } .................... if (inMemSorter.numRecords() > 0) { ..................... } ## How was this patch tested? Existing tests (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. Closes apache#23247 from wangjiaochun/inMemSorter. Authored-by: 10087686 <wang.jiaochun@zte.com.cn> Signed-off-by: Sean Owen <sean.owen@databricks.com>Configuration menu - View commit details

-

Copy full SHA for 9b7679a - Browse repository at this point

Copy the full SHA 9b7679aView commit details -

[MINOR][SQL][DOC] Correct parquet nullability documentation

## What changes were proposed in this pull request? Parquet files appear to have nullability info when being written, not being read. ## How was this patch tested? Some test code: (running spark 2.3, but the relevant code in DataSource looks identical on master) case class NullTest(bo: Boolean, opbol: Option[Boolean]) val testDf = spark.createDataFrame(Seq(NullTest(true, Some(false)))) defined class NullTest testDf: org.apache.spark.sql.DataFrame = [bo: boolean, opbol: boolean] testDf.write.parquet("s3://asana-stats/tmp_dima/parquet_check_schema") spark.read.parquet("s3://asana-stats/tmp_dima/parquet_check_schema/part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet4").printSchema() root |-- bo: boolean (nullable = true) |-- opbol: boolean (nullable = true) Meanwhile, the parquet file formed does have nullable info: []batchprod-report000:/tmp/dimakamalov-batch$ aws s3 ls s3://asana-stats/tmp_dima/parquet_check_schema/ 2018-10-17 21:03:52 0 _SUCCESS 2018-10-17 21:03:50 504 part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet []batchprod-report000:/tmp/dimakamalov-batch$ aws s3 cp s3://asana-stats/tmp_dima/parquet_check_schema/part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet . download: s3://asana-stats/tmp_dima/parquet_check_schema/part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet to ./part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet []batchprod-report000:/tmp/dimakamalov-batch$ java -jar parquet-tools-1.8.2.jar schema part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet message spark_schema { required boolean bo; optional boolean opbol; } Closes apache#22759 from dima-asana/dima-asana-nullable-parquet-doc. Authored-by: dima-asana <42555784+dima-asana@users.noreply.github.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>Configuration menu - View commit details

-

Copy full SHA for bd00f10 - Browse repository at this point

Copy the full SHA bd00f10View commit details -

[SPARK-26196][SPARK-26281][WEBUI] Total tasks title in the stage page…

… is incorrect when there are failed or killed tasks and update duration metrics ## What changes were proposed in this pull request? This PR fixes 3 issues 1) Total tasks message in the tasks table is incorrect, when there are failed or killed tasks 2) Sorting of the "Duration" column is not correct 3) Duration in the aggregated tasks summary table and the tasks table and not matching. Total tasks = numCompleteTasks + numActiveTasks + numKilledTasks + numFailedTasks; Corrected the duration metrics in the tasks table as executorRunTime based on the PR apache#23081 ## How was this patch tested? test step: 1) ``` bin/spark-shell scala > sc.parallelize(1 to 100, 10).map{ x => throw new RuntimeException("Bad executor")}.collect() ```  After patch:  2) Duration metrics: Before patch:  After patch:  Closes apache#23160 from shahidki31/totalTasks. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 3b8ae23 - Browse repository at this point

Copy the full SHA 3b8ae23View commit details -

[SPARK-24333][ML][PYTHON] Add fit with validation set to spark.ml GBT…

…: Python API ## What changes were proposed in this pull request? Add validationIndicatorCol and validationTol to GBT Python. ## How was this patch tested? Add test in doctest to test the new API. Closes apache#21465 from huaxingao/spark-24333. Authored-by: Huaxin Gao <huaxing@us.ibm.com> Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

Configuration menu - View commit details

-

Copy full SHA for 20278e7 - Browse repository at this point

Copy the full SHA 20278e7View commit details -

[SPARK-26304][SS] Add default value to spark.kafka.sasl.kerberos.serv…

…ice.name parameter ## What changes were proposed in this pull request? spark.kafka.sasl.kerberos.service.name is an optional parameter but most of the time value `kafka` has to be set. As I've written in the jira the following reasoning is behind: * Kafka's configuration guide suggest the same value: https://kafka.apache.org/documentation/#security_sasl_kerberos_brokerconfig * It would be easier for spark users by providing less configuration * Other streaming engines are doing the same In this PR I've changed the parameter from optional to `WithDefault` and set `kafka` as default value. ## How was this patch tested? Available unit tests + on cluster. Closes apache#23254 from gaborgsomogyi/SPARK-26304. Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Configuration menu - View commit details

-

Copy full SHA for 9b1f6c8 - Browse repository at this point

Copy the full SHA 9b1f6c8View commit details

Commits on Dec 8, 2018

-

[SPARK-26266][BUILD] Update to Scala 2.12.8

## What changes were proposed in this pull request? Update to Scala 2.12.8 ## How was this patch tested? Existing tests. Closes apache#23218 from srowen/SPARK-26266. Authored-by: Sean Owen <sean.owen@databricks.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 2ea9792 - Browse repository at this point

Copy the full SHA 2ea9792View commit details -

[SPARK-24207][R] follow-up PR for SPARK-24207 to fix code style problems

## What changes were proposed in this pull request? follow-up PR for SPARK-24207 to fix code style problems Closes apache#23256 from huaxingao/spark-24207-cnt. Authored-by: Huaxin Gao <huaxing@us.ibm.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 678e1ac - Browse repository at this point

Copy the full SHA 678e1acView commit details -

[SPARK-26021][SQL][FOLLOWUP] only deal with NaN and -0.0 in UnsafeWriter

## What changes were proposed in this pull request? A followup of apache#23043 There are 4 places we need to deal with NaN and -0.0: 1. comparison expressions. `-0.0` and `0.0` should be treated as same. Different NaNs should be treated as same. 2. Join keys. `-0.0` and `0.0` should be treated as same. Different NaNs should be treated as same. 3. grouping keys. `-0.0` and `0.0` should be assigned to the same group. Different NaNs should be assigned to the same group. 4. window partition keys. `-0.0` and `0.0` should be treated as same. Different NaNs should be treated as same. The case 1 is OK. Our comparison already handles NaN and -0.0, and for struct/array/map, we will recursively compare the fields/elements. Case 2, 3 and 4 are problematic, as they compare `UnsafeRow` binary directly, and different NaNs have different binary representation, and the same thing happens for -0.0 and 0.0. To fix it, a simple solution is: normalize float/double when building unsafe data (`UnsafeRow`, `UnsafeArrayData`, `UnsafeMapData`). Then we don't need to worry about it anymore. Following this direction, this PR moves the handling of NaN and -0.0 from `Platform` to `UnsafeWriter`, so that places like `UnsafeRow.setFloat` will not handle them, which reduces the perf overhead. It's also easier to add comments explaining why we do it in `UnsafeWriter`. ## How was this patch tested? existing tests Closes apache#23239 from cloud-fan/minor. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

Configuration menu - View commit details

-

Copy full SHA for bdf3284 - Browse repository at this point

Copy the full SHA bdf3284View commit details

Commits on Dec 9, 2018

-

[SPARK-25132][SQL][FOLLOWUP][DOC] Add migration doc for case-insensit…

…ive field resolution when reading from Parquet ## What changes were proposed in this pull request? apache#22148 introduces a behavior change. According to discussion at apache#22184, this PR updates migration guide when upgrade from Spark 2.3 to 2.4. ## How was this patch tested? N/A Closes apache#23238 from seancxmao/SPARK-25132-doc-2.4. Authored-by: seancxmao <seancxmao@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 55276d3 - Browse repository at this point

Copy the full SHA 55276d3View commit details -

[SPARK-26193][SQL] Implement shuffle write metrics in SQL

## What changes were proposed in this pull request? 1. Implement `SQLShuffleWriteMetricsReporter` on the SQL side as the customized `ShuffleWriteMetricsReporter`. 2. Add shuffle write metrics to `ShuffleExchangeExec`, and use these metrics to create corresponding `SQLShuffleWriteMetricsReporter` in shuffle dependency. 3. Rework on `ShuffleMapTask` to add new class named `ShuffleWriteProcessor` which control shuffle write process, we use sql shuffle write metrics by customizing a ShuffleWriteProcessor on SQL side. ## How was this patch tested? Add UT in SQLMetricsSuite. Manually test locally, update screen shot to document attached in JIRA. Closes apache#23207 from xuanyuanking/SPARK-26193. Authored-by: Yuanjian Li <xyliyuanjian@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 877f82c - Browse repository at this point

Copy the full SHA 877f82cView commit details -

[SPARK-26283][CORE] Enable reading from open frames of zstd, when rea…

…ding zstd compressed eventLog ## What changes were proposed in this pull request? Root cause: Prior to Spark2.4, When we enable zst for eventLog compression, for inprogress application, It always throws exception in the Application UI, when we open from the history server. But after 2.4 it will display the UI information based on the completed frames in the zstd compressed eventLog. But doesn't read incomplete frames for inprogress application. In this PR, we have added 'setContinous(true)' for reading input stream from eventLog, so that it can read from open frames also. (By default 'isContinous=false' for zstd inputStream and when we try to read an open frame, it throws truncated error) ## How was this patch tested? Test steps: 1) Add the configurations in the spark-defaults.conf (i) spark.eventLog.compress true (ii) spark.io.compression.codec zstd 2) Restart history server 3) bin/spark-shell 4) sc.parallelize(1 to 1000, 1000).count 5) Open app UI from the history server UI **Before fix**  **After fix:**  Closes apache#23241 from shahidki31/zstdEventLog. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for ec506bd - Browse repository at this point

Copy the full SHA ec506bdView commit details

Commits on Dec 10, 2018

-

[SPARK-26287][CORE] Don't need to create an empty spill file when mem…

…ory has no records ## What changes were proposed in this pull request? If there are no records in memory, then we don't need to create an empty temp spill file. ## How was this patch tested? Existing tests (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. Closes apache#23225 from wangjiaochun/ShufflSorter. Authored-by: 10087686 <wang.jiaochun@zte.com.cn> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 403c8d5 - Browse repository at this point

Copy the full SHA 403c8d5View commit details -

[SPARK-26307][SQL] Fix CTAS when INSERT a partitioned table using Hiv…

…e serde ## What changes were proposed in this pull request? This is a Spark 2.3 regression introduced in apache#20521. We should add the partition info for InsertIntoHiveTable in CreateHiveTableAsSelectCommand. Otherwise, we will hit the following error by running the newly added test case: ``` [info] - CTAS: INSERT a partitioned table using Hive serde *** FAILED *** (829 milliseconds) [info] org.apache.spark.SparkException: Requested partitioning does not match the tab1 table: [info] Requested partitions: [info] Table partitions: part [info] at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.processInsert(InsertIntoHiveTable.scala:179) [info] at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.run(InsertIntoHiveTable.scala:107) ``` ## How was this patch tested? Added a test case. Closes apache#23255 from gatorsmile/fixCTAS. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 3bc83de - Browse repository at this point

Copy the full SHA 3bc83deView commit details -

[SPARK-26319][SQL][TEST] Add appendReadColumns Unit Test for HiveShim…

…Suite ## What changes were proposed in this pull request? Add appendReadColumns Unit Test for HiveShimSuite. ## How was this patch tested? ``` $ build/sbt > project hive > testOnly *HiveShimSuite ``` Closes apache#23268 from sadhen/refactor/hiveshim. Authored-by: Darcy Shen <sadhen@zoho.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for c8ac6ae - Browse repository at this point

Copy the full SHA c8ac6aeView commit details -

[SPARK-26286][TEST] Add MAXIMUM_PAGE_SIZE_BYTES exception bound unit …

…test ## What changes were proposed in this pull request? Add MAXIMUM_PAGE_SIZE_BYTES Exception test ## How was this patch tested? Existing tests (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. Closes apache#23226 from wangjiaochun/BytesToBytesMapSuite. Authored-by: 10087686 <wang.jiaochun@zte.com.cn> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 42e8c38 - Browse repository at this point

Copy the full SHA 42e8c38View commit details -

[MINOR][DOC] Update the condition description of serialized shuffle

## What changes were proposed in this pull request? `1. The shuffle dependency specifies no aggregation or output ordering.` If the shuffle dependency specifies aggregation, but it only aggregates at the reduce-side, serialized shuffle can still be used. `3. The shuffle produces fewer than 16777216 output partitions.` If the number of output partitions is 16777216 , we can use serialized shuffle. We can see this mothod: `canUseSerializedShuffle` ## How was this patch tested? N/A Closes apache#23228 from 10110346/SerializedShuffle_doc. Authored-by: liuxian <liu.xian3@zte.com.cn> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 9794923 - Browse repository at this point

Copy the full SHA 9794923View commit details -

Configuration menu - View commit details

-

Copy full SHA for 0bf6c77 - Browse repository at this point

Copy the full SHA 0bf6c77View commit details -

Configuration menu - View commit details

-

Copy full SHA for b1a724b - Browse repository at this point

Copy the full SHA b1a724bView commit details -

[SPARK-24958][CORE] Add memory from procfs to executor metrics.

This adds the entire memory used by spark’s executor (as measured by procfs) to the executor metrics. The memory usage is collected from the entire process tree under the executor. The metrics are subdivided into memory used by java, by python, and by other processes, to aid users in diagnosing the source of high memory usage. The additional metrics are sent to the driver in heartbeats, using the mechanism introduced by SPARK-23429. This also slightly extends that approach to allow one ExecutorMetricType to collect multiple metrics. Added unit tests and also tested on a live cluster. Closes apache#22612 from rezasafi/ptreememory2. Authored-by: Reza Safi <rezasafi@cloudera.com> Signed-off-by: Imran Rashid <irashid@cloudera.com>

Configuration menu - View commit details

-

Copy full SHA for 90c77ea - Browse repository at this point

Copy the full SHA 90c77eaView commit details -

[SPARK-26317][BUILD] Upgrade SBT to 0.13.18

## What changes were proposed in this pull request? SBT 0.13.14 ~ 1.1.1 has a bug on accessing `java.util.Base64.getDecoder` with JDK9+. It's fixed at 1.1.2 and backported to [0.13.18 (released on Nov 28th)](https://github.com/sbt/sbt/releases/tag/v0.13.18). This PR aims to update SBT. ## How was this patch tested? Pass the Jenkins with the building and existing tests. Closes apache#23270 from dongjoon-hyun/SPARK-26317. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 0a37da6 - Browse repository at this point

Copy the full SHA 0a37da6View commit details

Commits on Dec 11, 2018

-

[SPARK-25696] The storage memory displayed on spark Application UI is…

… incorrect. ## What changes were proposed in this pull request? In the reported heartbeat information, the unit of the memory data is bytes, which is converted by the formatBytes() function in the utils.js file before being displayed in the interface. The cardinality of the unit conversion in the formatBytes function is 1000, which should be 1024. Change the cardinality of the unit conversion in the formatBytes function to 1024. ## How was this patch tested? manual tests Please review http://spark.apache.org/contributing.html before opening a pull request. Closes apache#22683 from httfighter/SPARK-25696. Lead-authored-by: 韩田田00222924 <han.tiantian@zte.com.cn> Co-authored-by: han.tiantian@zte.com.cn <han.tiantian@zte.com.cn> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 82c1ac4 - Browse repository at this point

Copy the full SHA 82c1ac4View commit details -

[SPARK-19827][R] spark.ml R API for PIC

## What changes were proposed in this pull request? Add PowerIterationCluster (PIC) in R ## How was this patch tested? Add test case Closes apache#23072 from huaxingao/spark-19827. Authored-by: Huaxin Gao <huaxing@us.ibm.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 05cf81e - Browse repository at this point

Copy the full SHA 05cf81eView commit details -

[SPARK-26312][SQL] Replace RDDConversions.rowToRowRdd with RowEncoder…

… to improve its conversion performance ## What changes were proposed in this pull request? `RDDConversions` would get disproportionately slower as the number of columns in the query increased, for the type of `converters` before is `scala.collection.immutable.::` which is a subtype of list. This PR removing `RDDConversions` and using `RowEncoder` to convert the Row to InternalRow. The test of `PrunedScanSuite` for 2000 columns and 20k rows takes 409 seconds before this PR, and 361 seconds after. ## How was this patch tested? Test case of `PrunedScanSuite` Closes apache#23262 from eatoncys/toarray. Authored-by: 10129659 <chen.yanshan@zte.com.cn> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for cbe9230 - Browse repository at this point

Copy the full SHA cbe9230View commit details -

[SPARK-26293][SQL] Cast exception when having python udf in subquery

## What changes were proposed in this pull request? This is a regression introduced by apache#22104 at Spark 2.4.0. When we have Python UDF in subquery, we will hit an exception ``` Caused by: java.lang.ClassCastException: org.apache.spark.sql.catalyst.expressions.AttributeReference cannot be cast to org.apache.spark.sql.catalyst.expressions.PythonUDF at scala.collection.immutable.Stream.map(Stream.scala:414) at org.apache.spark.sql.execution.python.EvalPythonExec.$anonfun$doExecute$2(EvalPythonExec.scala:98) at org.apache.spark.rdd.RDD.$anonfun$mapPartitions$2(RDD.scala:815) ... ``` apache#22104 turned `ExtractPythonUDFs` from a physical rule to optimizer rule. However, there is a difference between a physical rule and optimizer rule. A physical rule always runs once, an optimizer rule may be applied twice on a query tree even the rule is located in a batch that only runs once. For a subquery, the `OptimizeSubqueries` rule will execute the entire optimizer on the query plan inside subquery. Later on subquery will be turned to joins, and the optimizer rules will be applied to it again. Unfortunately, the `ExtractPythonUDFs` rule is not idempotent. When it's applied twice on a query plan inside subquery, it will produce a malformed plan. It extracts Python UDF from Python exec plans. This PR proposes 2 changes to be double safe: 1. `ExtractPythonUDFs` should skip python exec plans, to make the rule idempotent 2. `ExtractPythonUDFs` should skip subquery ## How was this patch tested? a new test. Closes apache#23248 from cloud-fan/python. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 7d5f6e8 - Browse repository at this point

Copy the full SHA 7d5f6e8View commit details -

[SPARK-26303][SQL] Return partial results for bad JSON records

## What changes were proposed in this pull request? In the PR, I propose to return partial results from JSON datasource and JSON functions in the PERMISSIVE mode if some of JSON fields are parsed and converted to desired types successfully. The changes are made only for `StructType`. Whole bad JSON records are placed into the corrupt column specified by the `columnNameOfCorruptRecord` option or SQL config. Partial results are not returned for malformed JSON input. ## How was this patch tested? Added new UT which checks converting JSON strings with one invalid and one valid field at the end of the string. Closes apache#23253 from MaxGekk/json-bad-record. Lead-authored-by: Maxim Gekk <max.gekk@gmail.com> Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 4e1d859 - Browse repository at this point

Copy the full SHA 4e1d859View commit details -

[SPARK-26327][SQL] Bug fix for

FileSourceScanExecmetrics update an……d name changing ## What changes were proposed in this pull request? As the description in [SPARK-26327](https://issues.apache.org/jira/browse/SPARK-26327), `postDriverMetricUpdates` was called on wrong place cause this bug, fix this by split the initializing of `selectedPartitions` and metrics updating logic. Add the updating logic in `inputRDD` initializing which can take effect in both code generation node and normal node. Also rename `metadataTime` to `fileListingTime` for clearer meaning. ## How was this patch tested? New test case in `SQLMetricsSuite`. Manual test: | | Before | After | |---------|:--------:|:-------:| | CodeGen ||| | Normal ||| Closes apache#23277 from xuanyuanking/SPARK-26327. Authored-by: Yuanjian Li <xyliyuanjian@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for bd7df6b - Browse repository at this point

Copy the full SHA bd7df6bView commit details -

[SPARK-26265][CORE] Fix deadlock in BytesToBytesMap.MapIterator when …

…locking both BytesToBytesMap.MapIterator and TaskMemoryManager ## What changes were proposed in this pull request? In `BytesToBytesMap.MapIterator.advanceToNextPage`, We will first lock this `MapIterator` and then `TaskMemoryManager` when going to free a memory page by calling `freePage`. At the same time, it is possibly that another memory consumer first locks `TaskMemoryManager` and then this `MapIterator` when it acquires memory and causes spilling on this `MapIterator`. So it ends with the `MapIterator` object holds lock to the `MapIterator` object and waits for lock on `TaskMemoryManager`, and the other consumer holds lock to `TaskMemoryManager` and waits for lock on the `MapIterator` object. To avoid deadlock here, this patch proposes to keep reference to the page to free and free it after releasing the lock of `MapIterator`. ## How was this patch tested? Added test and manually test by running the test 100 times to make sure there is no deadlock. Closes apache#23272 from viirya/SPARK-26265. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for a3bbca9 - Browse repository at this point

Copy the full SHA a3bbca9View commit details -

[SPARK-26316][SPARK-21052] Revert hash join metrics in that causes pe…

…rformance degradation ## What changes were proposed in this pull request? The wrong implementation in the hash join metrics in [spark 21052](https://issues.apache.org/jira/browse/SPARK-21052) caused significant performance degradation in TPC-DS. And the result is [here](https://docs.google.com/spreadsheets/d/18a5BdOlmm8euTaRodyeWum9yu92mbWWu6JbhGXtr7yE/edit#gid=0) in TPC-DS 1TB scale. So we currently partial revert 21052. **Cluster info:** | Master Node | Worker Nodes -- | -- | -- Node | 1x | 4x Processor | Intel(R) Xeon(R) Platinum 8170 CPU 2.10GHz | Intel(R) Xeon(R) Platinum 8180 CPU 2.50GHz Memory | 192 GB | 384 GB Storage Main | 8 x 960G SSD | 8 x 960G SSD Network | 10Gbe | Role | CM Management NameNodeSecondary NameNodeResource ManagerHive Metastore Server | DataNodeNodeManager OS Version | CentOS 7.2 | CentOS 7.2 Hadoop | Apache Hadoop 2.7.5 | Apache Hadoop 2.7.5 Hive | Apache Hive 2.2.0 | Spark | Apache Spark 2.1.0 & Apache Spark2.3.0 | JDK version | 1.8.0_112 | 1.8.0_112 **Related parameters setting:** Component | Parameter | Value -- | -- | -- Yarn Resource Manager | yarn.scheduler.maximum-allocation-mb | 120GB | yarn.scheduler.minimum-allocation-mb | 1GB | yarn.scheduler.maximum-allocation-vcores | 121 | Yarn.resourcemanager.scheduler.class | Fair Scheduler Yarn Node Manager | yarn.nodemanager.resource.memory-mb | 120GB | yarn.nodemanager.resource.cpu-vcores | 121 Spark | spark.executor.memory | 110GB | spark.executor.cores | 50 ## How was this patch tested? N/A Closes apache#23269 from JkSelf/partial-revert-21052. Authored-by: jiake <ke.a.jia@intel.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 5c67a9a - Browse repository at this point

Copy the full SHA 5c67a9aView commit details -

[SPARK-26300][SS] Remove a redundant

checkForStreamingcall## What changes were proposed in this pull request? If `checkForContinuous` is called ( `checkForStreaming` is called in `checkForContinuous` ), the `checkForStreaming` mothod will be called twice in `createQuery` , this is not necessary, and the `checkForStreaming` method has a lot of statements, so it's better to remove one of them. ## How was this patch tested? Existing unit tests in `StreamingQueryManagerSuite` and `ContinuousAggregationSuite` Closes apache#23251 from 10110346/isUnsupportedOperationCheckEnabled. Authored-by: liuxian <liu.xian3@zte.com.cn> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

Configuration menu - View commit details

-

Copy full SHA for d811369 - Browse repository at this point

Copy the full SHA d811369View commit details -

[SPARK-26239] File-based secret key loading for SASL.

This proposes an alternative way to load secret keys into a Spark application that is running on Kubernetes. Instead of automatically generating the secret, the secret key can reside in a file that is shared between both the driver and executor containers. Unit tests. Closes apache#23252 from mccheah/auth-secret-with-file. Authored-by: mcheah <mcheah@palantir.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Configuration menu - View commit details

-

Copy full SHA for 57d6fbf - Browse repository at this point

Copy the full SHA 57d6fbfView commit details

Commits on Dec 12, 2018

-

[SPARK-26193][SQL][FOLLOW UP] Read metrics rename and display text ch…

…anges ## What changes were proposed in this pull request? Follow up pr for apache#23207, include following changes: - Rename `SQLShuffleMetricsReporter` to `SQLShuffleReadMetricsReporter` to make it match with write side naming. - Display text changes for read side for naming consistent. - Rename function in `ShuffleWriteProcessor`. - Delete `private[spark]` in execution package. ## How was this patch tested? Existing tests. Closes apache#23286 from xuanyuanking/SPARK-26193-follow. Authored-by: Yuanjian Li <xyliyuanjian@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for bd8da37 - Browse repository at this point

Copy the full SHA bd8da37View commit details -

[SPARK-19827][R][FOLLOWUP] spark.ml R API for PIC

## What changes were proposed in this pull request? Follow up style fixes to PIC in R; see apache#23072 ## How was this patch tested? Existing tests. Closes apache#23292 from srowen/SPARK-19827.2. Authored-by: Sean Owen <sean.owen@databricks.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 79e36e2 - Browse repository at this point

Copy the full SHA 79e36e2View commit details -

[SPARK-24102][ML][MLLIB] ML Evaluators should use weight column - add…

…ed weight column for regression evaluator ## What changes were proposed in this pull request? The evaluators BinaryClassificationEvaluator, RegressionEvaluator, and MulticlassClassificationEvaluator and the corresponding metrics classes BinaryClassificationMetrics, RegressionMetrics and MulticlassMetrics should use sample weight data. I've closed the PR: apache#16557 as recommended in favor of creating three pull requests, one for each of the evaluators (binary/regression/multiclass) to make it easier to review/update. The updates to the regression metrics were based on (and updated with new changes based on comments): https://issues.apache.org/jira/browse/SPARK-11520 ("RegressionMetrics should support instance weights") but the pull request was closed as the changes were never checked in. ## How was this patch tested? I added tests to the metrics class. Closes apache#17085 from imatiach-msft/ilmat/regression-evaluate. Authored-by: Ilya Matiach <ilmat@microsoft.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 570b8f3 - Browse repository at this point

Copy the full SHA 570b8f3View commit details -

[SPARK-25877][K8S] Move all feature logic to feature classes.

This change makes the driver and executor builders a lot simpler by encapsulating almost all feature logic into the respective feature classes. The only logic that remains is the creation of the initial pod, which needs to happen before anything else so is better to be left in the builder class. Most feature classes already behave fine when the config has nothing they should handle, but a few minor tweaks had to be added. Unit tests were also updated or added to account for these. The builder suites were simplified a lot and just test the remaining pod-related code in the builders themselves. Author: Marcelo Vanzin <vanzin@cloudera.com> Closes apache#23220 from vanzin/SPARK-25877.

Configuration menu - View commit details

-

Copy full SHA for a63e7b2 - Browse repository at this point

Copy the full SHA a63e7b2View commit details

Commits on Dec 13, 2018

-

[SPARK-25277][YARN] YARN applicationMaster metrics should not registe…

…r static metrics ## What changes were proposed in this pull request? YARN applicationMaster metrics registration introduced in SPARK-24594 causes further registration of static metrics (Codegenerator and HiveExternalCatalog) and of JVM metrics, which I believe do not belong in this context. This looks like an unintended side effect of using the start method of [[MetricsSystem]]. A possible solution proposed here, is to introduce startNoRegisterSources to avoid these additional registrations of static sources and of JVM sources in the case of YARN applicationMaster metrics (this could be useful for other metrics that may be added in the future). ## How was this patch tested? Manually tested on a YARN cluster, Closes apache#22279 from LucaCanali/YarnMetricsRemoveExtraSourceRegistration. Lead-authored-by: Luca Canali <luca.canali@cern.ch> Co-authored-by: LucaCanali <luca.canali@cern.ch> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Configuration menu - View commit details

-

Copy full SHA for 2920438 - Browse repository at this point

Copy the full SHA 2920438View commit details -

[SPARK-26322][SS] Add spark.kafka.sasl.token.mechanism to ease delega…

…tion token configuration. ## What changes were proposed in this pull request? When Kafka delegation token obtained, SCRAM `sasl.mechanism` has to be configured for authentication. This can be configured on the related source/sink which is inconvenient from user perspective. Such granularity is not required and this configuration can be implemented with one central parameter. In this PR `spark.kafka.sasl.token.mechanism` added to configure this centrally (default: `SCRAM-SHA-512`). ## How was this patch tested? Existing unit tests + on cluster. Closes apache#23274 from gaborgsomogyi/SPARK-26322. Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Configuration menu - View commit details

-

Copy full SHA for 6daa783 - Browse repository at this point

Copy the full SHA 6daa783View commit details -

[SPARK-26297][SQL] improve the doc of Distribution/Partitioning

## What changes were proposed in this pull request? Some documents of `Distribution/Partitioning` are stale and misleading, this PR fixes them: 1. `Distribution` never have intra-partition requirement 2. `OrderedDistribution` does not require tuples that share the same value being colocated in the same partition. 3. `RangePartitioning` can provide a weaker guarantee for a prefix of its `ordering` expressions. ## How was this patch tested? comment-only PR. Closes apache#23249 from cloud-fan/doc. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 05b68d5 - Browse repository at this point

Copy the full SHA 05b68d5View commit details -

[SPARK-26348][SQL][TEST] make sure expression is resolved during test

## What changes were proposed in this pull request? cleanup some tests to make sure expression is resolved during test. ## How was this patch tested? test-only PR Closes apache#23297 from cloud-fan/test. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 3238e3d - Browse repository at this point

Copy the full SHA 3238e3dView commit details -

[SPARK-26355][PYSPARK] Add a workaround for PyArrow 0.11.

## What changes were proposed in this pull request? In PyArrow 0.11, there is a API breaking change. - [ARROW-1949](https://issues.apache.org/jira/browse/ARROW-1949) - [Python/C++] Add option to Array.from_pandas and pyarrow.array to perform unsafe casts. This causes test failures in `ScalarPandasUDFTests.test_vectorized_udf_null_(byte|short|int|long)`: ``` File "/Users/ueshin/workspace/apache-spark/spark/python/pyspark/worker.py", line 377, in main process() File "/Users/ueshin/workspace/apache-spark/spark/python/pyspark/worker.py", line 372, in process serializer.dump_stream(func(split_index, iterator), outfile) File "/Users/ueshin/workspace/apache-spark/spark/python/pyspark/serializers.py", line 317, in dump_stream batch = _create_batch(series, self._timezone) File "/Users/ueshin/workspace/apache-spark/spark/python/pyspark/serializers.py", line 286, in _create_batch arrs = [create_array(s, t) for s, t in series] File "/Users/ueshin/workspace/apache-spark/spark/python/pyspark/serializers.py", line 284, in create_array return pa.Array.from_pandas(s, mask=mask, type=t) File "pyarrow/array.pxi", line 474, in pyarrow.lib.Array.from_pandas return array(obj, mask=mask, type=type, safe=safe, from_pandas=True, File "pyarrow/array.pxi", line 169, in pyarrow.lib.array return _ndarray_to_array(values, mask, type, from_pandas, safe, File "pyarrow/array.pxi", line 69, in pyarrow.lib._ndarray_to_array check_status(NdarrayToArrow(pool, values, mask, from_pandas, File "pyarrow/error.pxi", line 81, in pyarrow.lib.check_status raise ArrowInvalid(message) ArrowInvalid: Floating point value truncated ``` We should add a workaround to support PyArrow 0.11. ## How was this patch tested? In my local environment. Closes apache#23305 from ueshin/issues/SPARK-26355/pyarrow_0.11. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 8edae94 - Browse repository at this point

Copy the full SHA 8edae94View commit details -

[MINOR][R] Fix indents of sparkR welcome message to be consistent wit…

…h pyspark and spark-shell ## What changes were proposed in this pull request? 1. Removed empty space at the beginning of welcome message lines of sparkR to be consistent with welcome message of `pyspark` and `spark-shell` 2. Setting indent of logo message lines to 3 to be consistent with welcome message of `pyspark` and `spark-shell` Output of `pyspark`: ``` Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /__ / .__/\_,_/_/ /_/\_\ version 2.4.0 /_/ Using Python version 3.6.6 (default, Jun 28 2018 11:07:29) SparkSession available as 'spark'. ``` Output of `spark-shell`: ``` Spark session available as 'spark'. Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_\ version 2.4.0 /_/ Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_161) Type in expressions to have them evaluated. Type :help for more information. ``` ## How was this patch tested? Before: Output of `sparkR`: ``` Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_\ version 2.4.0 /_/ SparkSession available as 'spark'. ``` After: ``` Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_\ version 2.4.0 /_/ SparkSession available as 'spark'. ``` Closes apache#23293 from AzureQ/master. Authored-by: Qi Shao <qi.shao.nyu@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>Configuration menu - View commit details

-

Copy full SHA for 19b63c5 - Browse repository at this point

Copy the full SHA 19b63c5View commit details -

[MINOR][DOC] Fix comments of ConvertToLocalRelation rule

## What changes were proposed in this pull request? There are some comments issues left when `ConvertToLocalRelation` rule was added (see apache#22205/[SPARK-25212](https://issues.apache.org/jira/browse/SPARK-25212)). This PR fixes those comments issues. ## How was this patch tested? N/A Closes apache#23273 from seancxmao/ConvertToLocalRelation-doc. Authored-by: seancxmao <seancxmao@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for f372609 - Browse repository at this point

Copy the full SHA f372609View commit details -

[MINOR][DOC] update the condition description of BypassMergeSortShuffle

## What changes were proposed in this pull request? These three condition descriptions should be updated, follow apache#23228 : <li>no Ordering is specified,</li> <li>no Aggregator is specified, and</li> <li>the number of partitions is less than <code>spark.shuffle.sort.bypassMergeThreshold</code>. </li> 1、If the shuffle dependency specifies aggregation, but it only aggregates at the reduce-side, BypassMergeSortShuffle can still be used. 2、If the number of output partitions is spark.shuffle.sort.bypassMergeThreshold(eg.200), we can use BypassMergeSortShuffle. ## How was this patch tested? N/A Closes apache#23281 from lcqzte10192193/wid-lcq-1211. Authored-by: lichaoqun <li.chaoqun@zte.com.cn> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for f69998a - Browse repository at this point

Copy the full SHA f69998aView commit details -

[SPARK-26340][CORE] Ensure cores per executor is greater than cpu per…

… task Currently this check is only performed for dynamic allocation use case in ExecutorAllocationManager. ## What changes were proposed in this pull request? Checks that cpu per task is lower than number of cores per executor otherwise throw an exception ## How was this patch tested? manual tests Please review http://spark.apache.org/contributing.html before opening a pull request. Closes apache#23290 from ashangit/master. Authored-by: n.fraison <n.fraison@criteo.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 29b3eb6 - Browse repository at this point

Copy the full SHA 29b3eb6View commit details -

[SPARK-26313][SQL] move

newScanBuilderfrom Table to read related m……ix-in traits ## What changes were proposed in this pull request? As discussed in https://github.com/apache/spark/pull/23208/files#r239684490 , we should put `newScanBuilder` in read related mix-in traits like `SupportsBatchRead`, to support write-only table. In the `Append` operator, we should skip schema validation if not necessary. In the future we would introduce a capability API, so that data source can tell Spark that it doesn't want to do validation. ## How was this patch tested? existing tests. Closes apache#23266 from cloud-fan/ds-read. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 6c1f7ba - Browse repository at this point

Copy the full SHA 6c1f7baView commit details -

[SPARK-26098][WEBUI] Show associated SQL query in Job page

## What changes were proposed in this pull request? For jobs associated to SQL queries, it would be easier to understand the context to showing the SQL query in Job detail page. Before code change, it is hard to tell what the job is about from the job page:  After code change:  After navigating to the associated SQL detail page, We can see the whole context :  **For Jobs don't have associated SQL query, the text won't be shown.** ## How was this patch tested? Manual test Closes apache#23068 from gengliangwang/addSQLID. Authored-by: Gengliang Wang <gengliang.wang@databricks.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com>

Configuration menu - View commit details

-

Copy full SHA for 524d1be - Browse repository at this point

Copy the full SHA 524d1beView commit details

Commits on Dec 14, 2018

-

[SPARK-23886][SS] Update query status for ContinuousExecution

## What changes were proposed in this pull request? Added query status updates to ContinuousExecution. ## How was this patch tested? Existing unit tests + added ContinuousQueryStatusAndProgressSuite. Closes apache#23095 from gaborgsomogyi/SPARK-23886. Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Configuration menu - View commit details

-

Copy full SHA for 362e472 - Browse repository at this point

Copy the full SHA 362e472View commit details -

[SPARK-26364][PYTHON][TESTING] Clean up imports in test_pandas_udf*

## What changes were proposed in this pull request? Clean up unconditional import statements and move them to the top. Conditional imports (pandas, numpy, pyarrow) are left as-is. ## How was this patch tested? Exising tests. Closes apache#23314 from icexelloss/clean-up-test-imports. Authored-by: Li Jin <ice.xelloss@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 160e583 - Browse repository at this point

Copy the full SHA 160e583View commit details -

[SPARK-26360] remove redundant validateQuery call

## What changes were proposed in this pull request? remove a redundant `KafkaWriter.validateQuery` call in `KafkaSourceProvider ` ## How was this patch tested? Just removing duplicate codes, so I just build and run unit tests. Closes apache#23309 from JasonWayne/SPARK-26360. Authored-by: jasonwayne <wuwenjie0102@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Configuration menu - View commit details

-

Copy full SHA for 9c481c7 - Browse repository at this point

Copy the full SHA 9c481c7View commit details -

[SPARK-26337][SQL][TEST] Add benchmark for LongToUnsafeRowMap

## What changes were proposed in this pull request? Regarding the performance issue of SPARK-26155, it reports the issue on TPC-DS. I think it is better to add a benchmark for `LongToUnsafeRowMap` which is the root cause of performance regression. It can be easier to show performance difference between different metric implementations in `LongToUnsafeRowMap`. ## How was this patch tested? Manually run added benchmark. Closes apache#23284 from viirya/SPARK-26337. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for 93139af - Browse repository at this point

Copy the full SHA 93139afView commit details -

[SPARK-26368][SQL] Make it clear that getOrInferFileFormatSchema does…

…n't create InMemoryFileIndex ## What changes were proposed in this pull request? I was looking at the code and it was a bit difficult to see the life cycle of InMemoryFileIndex passed into getOrInferFileFormatSchema, because once it is passed in, and another time it was created in getOrInferFileFormatSchema. It'd be easier to understand the life cycle if we move the creation of it out. ## How was this patch tested? This is a simple code move and should be covered by existing tests. Closes apache#23317 from rxin/SPARK-26368. Authored-by: Reynold Xin <rxin@databricks.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com>

Configuration menu - View commit details

-

Copy full SHA for 2d8838d - Browse repository at this point

Copy the full SHA 2d8838dView commit details -

[SPARK-26370][SQL] Fix resolution of higher-order function for the sa…

…me identifier. ## What changes were proposed in this pull request? When using a higher-order function with the same variable name as the existing columns in `Filter` or something which uses `Analyzer.resolveExpressionBottomUp` during the resolution, e.g.,: ```scala val df = Seq( (Seq(1, 9, 8, 7), 1, 2), (Seq(5, 9, 7), 2, 2), (Seq.empty, 3, 2), (null, 4, 2) ).toDF("i", "x", "d") checkAnswer(df.filter("exists(i, x -> x % d == 0)"), Seq(Row(Seq(1, 9, 8, 7), 1, 2))) checkAnswer(df.select("x").filter("exists(i, x -> x % d == 0)"), Seq(Row(1))) ``` the following exception happens: ``` java.lang.ClassCastException: org.apache.spark.sql.catalyst.expressions.BoundReference cannot be cast to org.apache.spark.sql.catalyst.expressions.NamedExpression at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:237) at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62) at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55) at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49) at scala.collection.TraversableLike.map(TraversableLike.scala:237) at scala.collection.TraversableLike.map$(TraversableLike.scala:230) at scala.collection.AbstractTraversable.map(Traversable.scala:108) at org.apache.spark.sql.catalyst.expressions.HigherOrderFunction.$anonfun$functionsForEval$1(higherOrderFunctions.scala:147) at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:237) at scala.collection.immutable.List.foreach(List.scala:392) at scala.collection.TraversableLike.map(TraversableLike.scala:237) at scala.collection.TraversableLike.map$(TraversableLike.scala:230) at scala.collection.immutable.List.map(List.scala:298) at org.apache.spark.sql.catalyst.expressions.HigherOrderFunction.functionsForEval(higherOrderFunctions.scala:145) at org.apache.spark.sql.catalyst.expressions.HigherOrderFunction.functionsForEval$(higherOrderFunctions.scala:145) at org.apache.spark.sql.catalyst.expressions.ArrayExists.functionsForEval$lzycompute(higherOrderFunctions.scala:369) at org.apache.spark.sql.catalyst.expressions.ArrayExists.functionsForEval(higherOrderFunctions.scala:369) at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.functionForEval(higherOrderFunctions.scala:176) at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.functionForEval$(higherOrderFunctions.scala:176) at org.apache.spark.sql.catalyst.expressions.ArrayExists.functionForEval(higherOrderFunctions.scala:369) at org.apache.spark.sql.catalyst.expressions.ArrayExists.nullSafeEval(higherOrderFunctions.scala:387) at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.eval(higherOrderFunctions.scala:190) at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.eval$(higherOrderFunctions.scala:185) at org.apache.spark.sql.catalyst.expressions.ArrayExists.eval(higherOrderFunctions.scala:369) at org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificPredicate.eval(Unknown Source) at org.apache.spark.sql.execution.FilterExec.$anonfun$doExecute$3(basicPhysicalOperators.scala:216) at org.apache.spark.sql.execution.FilterExec.$anonfun$doExecute$3$adapted(basicPhysicalOperators.scala:215) ... ``` because the `UnresolvedAttribute`s in `LambdaFunction` are unexpectedly resolved by the rule. This pr modified to use a placeholder `UnresolvedNamedLambdaVariable` to prevent unexpected resolution. ## How was this patch tested? Added a test and modified some tests. Closes apache#23320 from ueshin/issues/SPARK-26370/hof_resolution. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>Configuration menu - View commit details

-

Copy full SHA for 3dda58a - Browse repository at this point

Copy the full SHA 3dda58aView commit details -

[MINOR][SQL] Some errors in the notes.

## What changes were proposed in this pull request? When using ordinals to access linked list, the time cost is O(n). ## How was this patch tested? Existing tests. Closes apache#23280 from CarolinePeng/update_Two. Authored-by: CarolinPeng <00244106@zte.intra> Signed-off-by: Sean Owen <sean.owen@databricks.com>

Configuration menu - View commit details

-

Copy full SHA for d25e443 - Browse repository at this point

Copy the full SHA d25e443View commit details

Commits on Dec 15, 2018

-

[SPARK-26265][CORE][FOLLOWUP] Put freePage into a finally block